Augmented reality (AR) and virtual reality (VR) are types of mixed reality (MR) technologies, with AR defined as technologies that mix the digital and physical and VR defined as replacing reality with the virtual (Milgram & Kishino, 1994). Because AR/VR technologies are not singular but comprised of a set of criteria (e.g., virtual, real, 3-D, interactive), AR/VR technologies can vary significantly in terms of devices used to access the technology, display types, degrees of immersiveness, input modalities, levels of interactivity, uses of avatars/other embodiments, and types of content (Liao, 2016).

As a visual medium, many early applications of AR/VR have been considered for educational purposes (Bacca et al., 2014; Wu et al., 2013), with deployments of AR learning materials (Klopfer & Squire, 2008), AR educational games (Di Serio et al., 2013; Dunleavy et al., 2009), VR task simulators (Henderson & Feiner, 2009), and even entire courses delivered in VR environments (Han et al., 2023). AR/VR uses have been explored across varied learning contexts, including primary (Freitas & Campos, 2008; Hidayat et al., 2021), secondary (Martin-Gutierrez et al., 2015), postsecondary (Fonseca et al., 2014), and postgraduate specialties like medical training (Barsom et al., 2016; Kaplan et al., 2021).

Over the past decade, meta-analyses of AR/VR studies in education have shown small but demonstrable effects regarding improvements to educational outcomes through the use of AR/VR technologies as compared to traditional methods (Chang et al., 2022; Garzón & Acevedo, 2019; Yu & Xu, 2022). With the increasing affordability of AR/VR devices, interest in this emerging area continues to grow. Recent articles have examined novel implementations of entire undergraduate courses in VR (Han et al., 2023). Although the first wave of research seems promising, some areas still need to be explored at programmatic and implementation levels to facilitate authentic learning. For example, while there are many studies about AR/VR educational applications in experimental settings, they often leave out details about how they were designed/created (Radianti et al., 2020). Another feature of AR/VR research in education is that it has tended to group a variety of technologies together under the umbrella of AR/VR (Chang et al., 2022), and looks at how the technology as a whole affects specific variables such as learning, engagement, or motivation (see, for example, Tai et al., 2022). Only some recent studies have looked more specifically at comparing technological features within AR/VR devices and examining how they contribute to (or impede) certain learning objectives (Won et al., 2023). Many early implementations have also been assessed experimentally, without real-world implementation in groups of learners. Dey and colleagues (2018) found in their meta-analysis of hundreds of AR research articles over a decade that most studies were experimental user studies, with few comparisons/evaluations of creation processes and only a small number of field studies to assess implementation.

While these studies are important in demonstrating that AR/VR has potential in educational contexts, there is widespread recognition that the next phase of research will have to answer some important questions. The first is to examine the processes for creating these applications to understand and compare how decisions made in the design process can affect learning outcomes. Second, field studies that do full implementations of these technologies in real-world settings are critical to the generalizability of these findings across learning populations, and also to understanding issues that arise and whether these can be overcome across educational institutions, faculty, and contexts. Many of the educational studies on both AR/VR have run into similar issues of implementation and scalability (Han et al., 2023; King et al., 2018).

This design case aims to address this gap by describing a programmatic effort undertaken at a large Research 1 institution in the state of Texas. The University began with a broad initiative to “Envision the Classroom of the Future” and formed an AR/VR task force dedicated to creating and implementing real-world AR/VR applications into the undergraduate curriculum. This article first describes a structured process for how the team operated, made decisions, and worked collaboratively across disciplinary areas. The team utilized pedagogical theories of backward design and educational implementation theories to center the designs around the learning outcomes and to make sure that the technology was serving those objectives. We spotlight the case of creating and implementing a VR learning activity for pre-service elementary school teachers designing their own classrooms. From this case, we conclude with some recommendations for practice and reflections on ways a theoretically grounded process helped navigate challenges of real-world design and implementation that arose.

Introducing emerging technologies into the classroom is challenging, especially to transcend the novelty factor alone and create implementations that facilitate authentic and meaningful learning (Davison & Lazaros, 2015; Herrington et al., 2006; Levin & Wadmany, 2008). Some common pitfalls are content-related, in which the technology-integrated solutions fail to address clear learning objectives. Another issue can be overutilization of the technology, where early adopters promote the use of technology while its necessity or efficacy may not yet be established. Even when a technology is well-designed and useful, instructor adoption and confidence in using it in their teaching can also be a challenge (Aldunate & Nussbaum, 2013). Instructor hesitation may be related to their uncertainty about whether the tool will work in their classroom, concern about the additional time and effort that will be needed to learn the tool and integrate it into existing lessons, or fear of losing credibility using a tool that they have not mastered (Johnson et al., 2016).

Additionally, AR/VR adoption poses some unique challenges in the educational setting. The first is related to motion sickness, which can lower students’ willingness to participate (Chang et al., 2020). Secondly, there are resource challenges, as headsets for each student and ample time to teach students set up and navigation are needed (Han et al., 2023). Instructors may have difficulty seeing what students see in their headsets, which can limit the instructor’s ability to assist them in the VR environment or necessitate content management subscriptions (e.g. ManageXR, ArborXR). Lastly, there are logistical issues of headset charging and ancillary equipment needs such as cables and storage. Consideration also needs to be given to the selection of the AR/VR hardware, software, and content, of which there are many options. Much of the existing educational adoption has been with out-of-the-box solutions, with more work needed on applications “developed on a bespoke basis by University faculty, staff, and/or students to test hypotheses or undergo experiential learning (Hutson & Olsen, 2021; p, 6).”

Our task force acquired/tested several of the latest devices and platforms and provided faculty members demonstrations and examples of a wide range of AR/VR technologies. Even though this particular implementation chose VR, understanding some of the literature on AR education is still relevant because they often confront similar challenges and barriers. Understanding some of the logistical reasons why AR was not ultimately chosen in this case could also be instructive for future practitioners.

Many institutions of higher education are working to re-envision education, prompted in part by changes brought on during the COVID-19 pandemic, and to support student success while meeting the needs of the current workforce (García-Morales et al., 2021; Mintz, 2024). The present project emerged in this environment. An interdisciplinary team was formed, comprised of AR/VR research faculty in the College of Engineering, curriculum and instruction experts and clinical teaching faculty from the College of Education, computer science programmers from the College of Natural Sciences and Mathematics, and digital media producers from the College of Engineering Technology Division.

In reimagining for AR/VR classroom integration, the team utilized two theoretical models that structured the process and guided decision-making – Understanding by Design (UbD; commonly referred to as “backward design”) and Substitution, Augmentation, Modification, Redefinition (SAMR). The backward design model emphasizes student learning objectives as the guiding principle, develops the relevant evaluation criteria, and then intentionally creates the technologies/lessons supporting those goals (Wiggins & McTighe, 2005). Backward design has been applied to many different aspects of education and is particularly useful for exploring how to best implement certain technologies and test them effectively (Jensen et al., 2017). Further, scaffolding tends to be embedded, where multiple levels of learning can build upon one another over time to gain mastery of specific knowledge and skills (Childre et al., 2009).

The SAMR model can guide the selection, incorporation, and evaluation of instructional technologies, offering a range of four implementation-level options (Bauder et al., 2020; Hamilton et al., 2016; Puentedura, 2013). The substitution and augmentation levels enhance the learning activity design without changing it fundamentally; at the modification and redefinition levels, the use of the technology becomes transformative as it changes what is possible through the learning activity. While the use of technology at the modification and redefinition levels facilitates larger changes in the learning activity design, identifying technology applications at all four levels affords a “robust repertoire” and offers potential for teacher growth (Kopcha et al., 2020, p. 743). In this section, we explain how these models informed the design process and then describe our five-step process for creating and implementing a VR-based activity on classroom design in an undergraduate teacher education course.

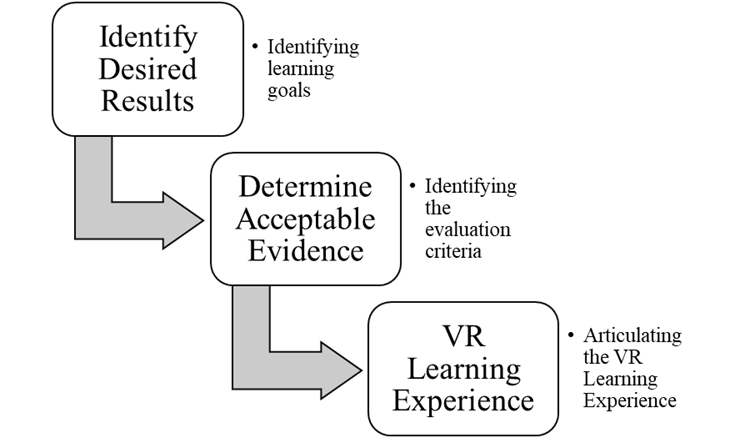

As illustrated in Figure 1, the application of backward design in the present project was to focus on student learning outcomes rather than the novelty of the AR/VR experience so that the technology would be considered in support of the goals. The curriculum and instruction faculty identified an instructional problem of practice, key learning outcomes, and evaluation criteria from their educational technology course for preservice teachers. The team then explored AR/VR tools and software and built an initial design prototype using authentic assets, including materials from the course and local elementary classrooms. Thus, by beginning with the learning outcomes, the emphasis was on the learning goals and how students could achieve those goals through AR/VR integration. As the team considered how the technology tools would be used to support student learning in this project, SAMR served as a useful framework for determining how the VR would be utilized in the learning experience.

Figure 1

Stages of Backward Design as Applied to VR Technology Integration

Note. Adapted from Stages of Backward Design (Wiggins & McTighe, 2005).

The team held exploration sessions that were open to faculty in the humanities, arts, social sciences, engineering, mathematics, and teacher education who were interested in re-imagining classroom experiences using AR/VR (Figure 2). The team explored with the faculty a broad range of existing AR/VR technologies, including mobile AR content creation tools such as Halo AR for creating augmented markers, VR collaboration spaces such as Spatial.io and Engage VR using the Meta Quest 2, heads-up displays of mobile AR objects with the NuEyes Pro3, gesture recognition VR systems through the Pico Neo 3 and Ultraleap, and AR projection systems through the Magic Leap One device. Faculty then brainstormed how particular features could be used to meet and transform learning objectives in their courses, focusing on lessons that students often had difficulty with, topics where visual instruction/modeling and tactile learning tend to be utilized, or concepts that are challenging with the current traditional instruction and would benefit from differentiated learning experiences. This approach facilitated faculty’s active learning about the AR/VR tools, building their agency to match the tools to potential evaluation criteria (Ma et al., 2022).

Figure 2

Faculty AR/VR Exploration Sessions

Based on the exploratory sessions, the team reviewed their notes and highlighted learning outcomes that appeared to be potential candidates for further development, including those that 1) had a clear visual component that could be supported with AR/VR, 2) addressed a core learning objective for the discipline to maximize impact, and 3) were tied to needs in the local community. One of the learning outcomes that emerged involved preservice teacher competency in designing classroom spaces that support productive, accessible, and safe learning environments, as articulated in the following Texas Education Agency Standard:

Texas Teacher Standard 4. Learning Environment.

B. Teachers organize their classrooms in a safe and accessible manner that maximizes learning. (i) Teachers arrange the physical environment to maximize student learning and to ensure that all students have access to resources. (ii) Teachers create a physical classroom set-up that is flexible and accommodates the different learning needs of students (Texas Administrative Code 149.1001, 2024).

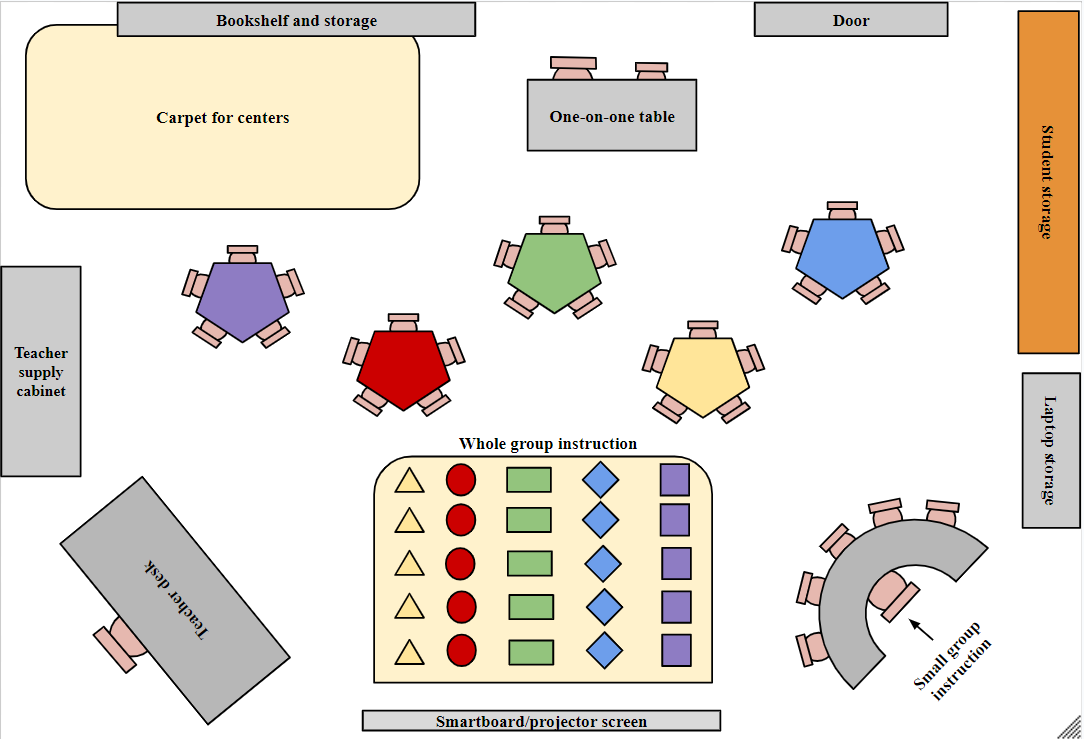

Students were taught and prepared for this competency in an educational technology course for pre-service elementary grade teachers. The original assignment incorporated in-person site visits in which the pre-service teachers visited local elementary schools and noted the furniture, décor, layouts, and instructional materials in practice. Upon returning from their site visits, pre-service teachers engaged in written reflections about the spaces, identifying aspects that seemed effective and ideas for improvement. They then used Google Drawing to generate approximate classroom floorplans with individual and group learning spaces. They used color coding and shapes to indicate practical items like teachers’ desks, storage, technology, and instructional materials (see Figure 3). The pre-service teachers were also prompted to consider the potential pathways for movement and flow of students and teachers.

Figure 3

Example Google Drawing Classroom Floorplan

With this assignment focus, the team considered current challenges in the assignment and ways in which AR/VR could make it more experiential and practical. With limited in-person site visit time, students would have difficulty remembering classroom aspects and translating their observations to their Google Drawing floorplans. The 2D exercise format was also limited in conveying logistical considerations of classroom design, resulting in common student mistakes in furniture placement, entry/exit, layout/flow, and power supply aspects. The original version of the assignment had a sharing and reflecting component, but other students had a difficult time visualizing someone else’s design just seeing a 2D blueprint, especially if they did not visit the same physical classroom site.

A 3D VR-based version of the exercise could afford simulated experiential exploration of student classroom designs in more immersive ways. The team discussed how the VR-based tools could support varied types of furniture, instructional materials, and décor, enabling students to select from a library of options (e.g., single square desks versus two-seat desks versus round group tables). Visualizing a wide range of possibilities could facilitate imagination beyond what the preservice teachers would see in their specific site visits while also providing opportunities for making classroom design decisions that they might be presented with when they enter the field. The team also considered how a VR screencast system could enable students to share their classroom design with the rest of the class and narrate/explain their design choices. In this way, they would have to explain their design decisions, which could support greater intentionality in their thinking about their plans. Such screen-sharing functionality could also enable the teacher education faculty to provide real-time feedback on the students’ designs.

As the team began prototyping tools, they tried to step into the role of the learners and course context to promote empathetic design and authentic learning. Adding the VR component to the 2D design assignment was a clear goal, but the team had to decide whether to create a classroom design tool entirely in a VR headset or a computer program that could create 3-D VR viewable classroom designs. The team decided to create a computer-based classroom floorplan design tool for a couple of reasons. First, because students had already drawn their layouts in 2-D on a computer, they could more easily convert that to a 3-D tool than having to reconstruct that in a point-of-view VR setting. Second, the task force felt that learning a computer-based system would be easier than teaching everyone to use the VR design tool so that less time could be spent on training. Lastly, the logistics of providing everyone time to be in a VR headset was seen as a challenge, whereas the computer-based tool could allow students to design in class or out of class.

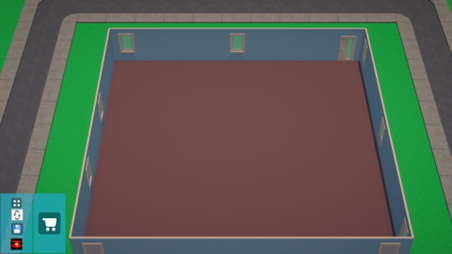

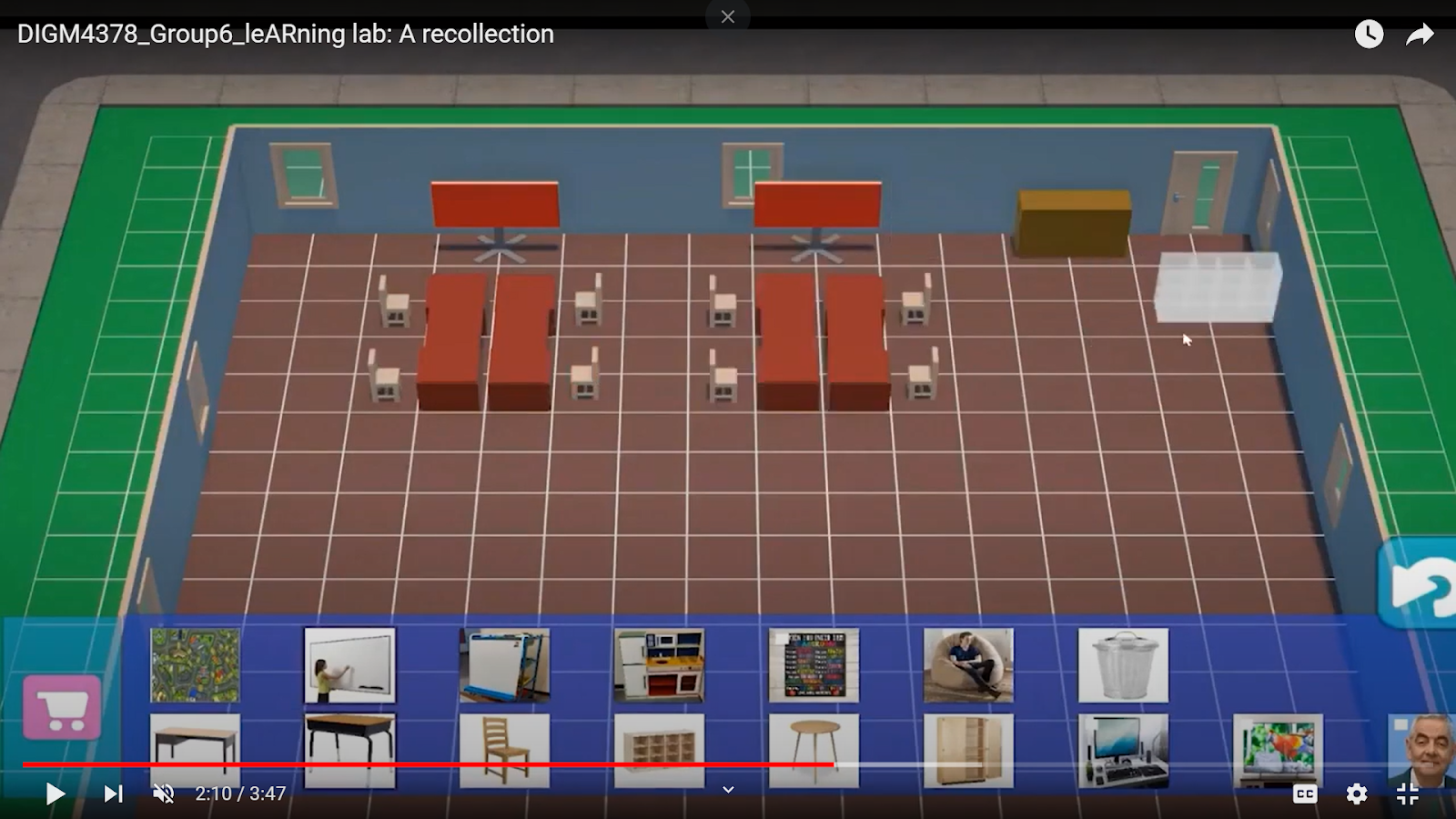

The programming team on the task force created the VR development program in Unity. The instructional design expert and the computer science programmer visited a local elementary school together and observed multiple classrooms to familiarize themselves with 1) the size and proportion of the learning environment; 2) the size and proportion of classroom furniture such as desks, chairs, interactive whiteboards, and storage; 3) available space for elementary students to move around the room; and 4) windows, doors, points of entry/exit. The team discussed and agreed upon an initial set of dimensions for the floorplan template that would approximate the relative scale of the local elementary-level classrooms. Then, virtual manipulatable objects of desks, chairs, interactive whiteboards, storage, rugs, and bookshelves were added as options in the tool (Figure 4). A rotate and delete function for objects was also added for ease of the user.

In addition to the features/assets needed to design the room, the tool also had to include a VR viewing condition, where one could switch from the overhead view into a particular spot in the classroom with point of view perspective. The program also included movement functions through buttons to allow someone to move in VR. Lastly, the program had to allow for save/load features so that students could load up their specific classrooms. Rather than having students design in VR and having to learn a whole new creation platform and interaction for how to do this, the team saw the embodied viewing of the design in VR as more important to their learning than designing in VR. The tool was finalized and installed on the computers in the teaching lab for the course as well as made available for students to download on their own computers. Once they finished and saved their classroom, their floorplan designs could then be viewed through Meta Quest 2 headsets. They could also move around in the VR space and their classmates outside of the headset could see what they were doing on the computer monitor.

Figure 4

Unity 3D Classroom Building Tool: Blank Template (top), Placement of Objects (middle, bottom)

The VR-based activity was implemented in the educational technology course during the Fall 2023 semester, with 66 preservice teachers enrolled across two face-to-face sections. This 16-week one-credit hour undergraduate course was taught by a graduate teaching assistant. The activity was implemented over four weeks midway through the course.

Week 1. In the first week, the instructor introduced key concepts about learner-centered classroom design layouts, such as arranging table groups to support collaboration and social learning and providing individual student spaces for reflection and focus. The class reviewed the related state guidelines for core competency, which state, “The teacher knows how to establish a classroom climate that fosters learning, equity, and excellence and uses this knowledge to create a physical and emotional environment that is safe and productive[1].” The instructor showed photographs from local elementary classrooms and facilitated a discussion on what the preservice teachers noticed in the classroom arrangements and furnishings. The preservice teachers then sketched out rough drafts of potential classroom designs. They were provided with the following scenario to guide their classroom designs:

You teach a second-grade self-contained class that has 23 students. In your classroom blueprint, include seating for every student (individual workspaces), storage materials, a small group area, teacher workspace, technology screen, space for centers/stations, student storage spaces, and space to collect assignments.

The pre-service teachers used Google Drawing to create the 2D rough draft classroom design ideas (Figure 5).

Figure 5

2D Classroom Design Rough Drafts

Week 2. The preservice teachers completed observations in local elementary classrooms the following week. They were prompted to notice elements of the classrooms they visited and identified similarities and differences between those classrooms and their drafted classroom designs. They noted aspects that they would add, remove, or otherwise modify to improve the authenticity of their designs based on their field observations.

Week 3. The preservice teachers returned to the course lab where they used the classroom building tool to turn their 2D classroom design ideas into 3D versions that would be ready for VR exploration (Figure 6). The design team conducted a 10-minute training session that walked through how to use the tool for building classrooms, select and rotate objects, save the files, and submit them for the design team to load onto the Oculus headsets.

Figure 6

In Class Design Activity (Top), Completed Classroom (Bottom)

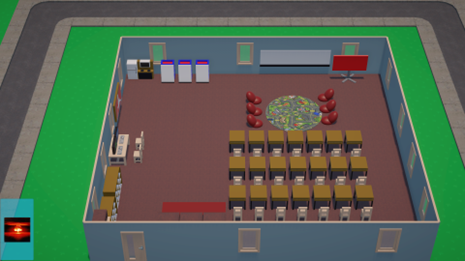

Week 4. Due to the class size in relation to available members of the design team and computers/headsets, the preservice teachers were divided into groups. One student in each group put on an Oculus headset and had their classroom loaded into the VR view. They were instructed to look around, move to different places in the room, and narrate their thoughts to their group members. They noted things like furniture placement, movement through the room, and how accessible certain areas were for them to be able to work with students and for students to be able to learn productively. The group discussed and compared different layouts, noted examples where learner-centered classroom design concepts were illustrated, assessed the authenticity of the classroom designs based on their field observation experiences, and offered suggestions to improve the designs (Figure 7).

Figure 7

Virtual Reality Classroom Implementation: Student Explaining their Classroom Design (Top), Virtual Reality Walkthrough Screenshot (Bottom)

Each preservice teacher exploration of their classroom design was about five minutes. This individual implementation in front of a larger group enabled other members of the class to follow along as they described their simulated classroom. The preservice teachers could thus consider a range of classroom designs and reflect upon ideas for how they could modify their own classroom design. Finally, the preservice teachers completed a post-activity reflection guide in which they recorded their observations about classroom design that they gleaned across the four-week pilot implementation and offered suggestions to the design team for ways to improve or expand the activity.

The entire team discussed with the instructors and students about observations from the pilot implementation and reviewed suggestions offered in the preservice teachers’ post-activity reflections. The team generated a list of potential updates to the classroom building tool, and the developers explored the feasibility of the suggestions. The team then agreed upon the revisions for the next iteration of the activity.

This programmatic effort offers several key insights for how applied backward design can be integrated into a process for faculty to identify learning goals and outcomes and ultimately help create authentic learning experiences through AR/VR. The first takeaway has to do with team construction and institutional support. An interdisciplinary team that was financially supported in terms of hardware and time was essential to develop and implement the activity. Having a wide range of expertise, including technical and pedagogical was necessary in each step. The instructional design faculty were instrumental in introducing backward design and ensuring that we led with the learning outcomes to adhere to that process (Wiggins & McTighe, 2011). On the technological/research side, there were team members who could explain the technology to newcomers, develop prototypes of the tools, and create educational assessments to test certain outcomes. It was also helpful to have the AR/VR hardware available on-site for hands-on exploration, which fostered conversations within the team about what potential features could be harnessed to support student learning in different ways. The project overall benefitted from broad expertise and time for the team to navigate the challenges of developing and implementing AR/VR in a course.

The second takeaway relates to the initial focus to target specific learning objectives. By identifying the instructional need first, the team was able to work toward that goal with the technology and faculty buy-in. Research suggests that university faculty have been slow to integrate technology into the classroom (Aldunate & Nussbaum, 2013; Johnson et al., 2016; Moser, 2007). Using backward design can create a shared goal with faculty to work with the technology, to stick with the technology even where there are challenges, and actually implement it in the classroom. Using the backward design, the team established explicit learning targets based on need and designed a learning experience for preservice teachers to transfer knowledge and skills, make connections, and demonstrate their learning (Wiggins & McTighe, 2011).

The exploration sessions enabled faculty to actively explore the technology themselves so they could generate their own productive experiences with the technology. By starting with a demonstration of a broad range of AR/VR tools and capabilities, the faculty were able to see multiple possibilities that could align with their specific learning outcomes and needs. Rather than beginning with the capabilities of AR/VR, our team initiated the project from the needs of students and the institution; thus, the faculty did not feel compelled to fit a specific technological system into their instruction. By asking faculty to think about some of the practical limitations of existing practices (e.g., time, cost of site visits) and learning outcomes that may not fully get activated (e.g., limitations of 2D blueprint visualizations), VR came to be viewed as a supplement to existing methods for teaching classroom design and scaffolding those activities (Childre et al., 2009).

A third takeaway is the value of utilizing the SAMR model to think about how to select the best technology for the desired learning outcomes and utilize it at the appropriate time. Identifying the learning outcome was the first step toward thinking about technology, but the SAMR model was what became useful as a brainstorming, explanatory, and decision-making tool for understanding the possibilities of what to build. For example, the team chose to utilize VR technology rather than AR technology. Because the learning outcome was concerned with the design of safe and effective spaces in a number of different contexts, VR was deemed the more appropriate choice, and it could enable visualizations of newly created spaces that the preservice teachers created, rather than being an overlay to current spaces. The team concluded that using the physical space of AR would have redefined the activity in ways that may have distracted/detracted from the desired learning outcome.

Overall, the activity straddles the line between modification and redefinition of the exercise (Puentedura, 2013). The classroom design tool enables students to see the relationships between objects and space in real-time. It also redefines the possibility of the task altogether, enhancing learner perspective of the classrooms they designed. They were able to sit in students’ seats to see their view of the board, assess the teacher’s view of the whole class from their workstation, and move through the flow of various learning stations, storage areas, and other classroom elements. Through the backward design process, student learning goals grounded the project. The VR aspects of the activity enabled the preservice teachers to actively construct and refine their own understandings about classroom design. Further, backward design set a foundation for the VR experience, helping the team identify and understand related learning criteria.

One surprise that came out of this process was the overall time spent in VR. Because the activity was built off the drafted 2D designs and converted to 3D VR, the emphasis was more on the personalization of the design rather than on spending considerable time in VR. Most of the work of the team was spent designing the classroom building tool through site visits, modeling of the 3D objects, and working on the templates, layouts, and save/load features. The critical components of the VR involved visualizing each student’s specific design and projecting their walk-throughs to an external monitor for narration purposes.

The finding that brief periods in VR benefit students has important implications for future VR implementations in the classroom. One is that if the theoretical approach called for that short technological implementation based on SAMR, it is perfectly acceptable and should be embraced even if the nominal goal is more technological adoption. If these short implementations that build on traditional assignments/technologies are what a successful implementation looks like in that instance, then that is what should be implemented. Second, if short experiences in VR prove to be successful, then schools may not need to devote as many resources to the hardware as previously thought (e.g., about 10-12 headsets per classroom versus one per student). This stands in contrast to studies that involved extended time spent in VR (Han et al., 2023; Markowitz & Bailenson, 2021). Third, shortening VR interactions could be an important mitigating factor for barriers to adoption, such as motion sickness, eye strain from extended use, and class time limitations.

This article offers the description of one attempt of explicating a backward design process for AR/VR tool integration. Design teams can build upon the takeaways and explore potential AR/VR applications across SAMR levels, seeking greater authenticity in the classroom when the technology affordances are appropriately matched to certain learning objectives. As a new team, the working practices around development and integration were largely established as the process unfolded, and the team made adjustments and developed solutions as problems arose. As there were differing acquisition, purchasing, and direction decisions, the interdisciplinary expertise and strong committed leadership from the institution and within the team were essential. In terms of the implementation, instructors could see the value of the technology but practically had to acknowledge the need for training sessions/time devoted to the implementation. The re-envisioned classroom design activity expanded from two to four weeks. During the pilot, there were students who experienced frustrations in using the classroom building tool, such as the slow load times, lack of confidence in their ability to learn the tool, and difficulties in selecting objects and placing/rotating them effectively. This hesitation and need for efficacy related to the tool highlighted the importance of training and instructor encouragement to help students push through their hesitancy in learning a new technological tool.

Lastly, successful implementations need to consider replicability and scalability. The need to obtain gaming computers, VR headsets, and connecting cables could mean that long-term support for instructors is necessary on the VR side. The underlying software and creation tool, however, can be more easily duplicated. The team is exploring design tools that can be utilized with or without the VR, such that the 3D design tool could be a standalone supplement absent the VR hardware. Future builds could also modify these capabilities in interesting ways, such as exploring ways to improve the implementation structure and ratio of VR for students, how this activity could be adapted to other teaching formats like asynchronous or remote learning, whether additional VR interactivity with objects could further learning outcomes, and how the process differs for formal learning experiences compared to informal learning experiences. Continuity in the team and knowing that there will be additional implementations helps to iteratively improve the tool based on feedback and shared experience.

The process for creating a custom application and full-scale implementation was not easy, and future endeavors should understand some of the challenges that will arise and set expectations accordingly. There were a myriad of decisions that needed to be made, and at various points the team could have defaulted to just using pre-developed programs, being rigid in their original vision, asking students to do more in VR because that was the nominal goal, or ignoring some of the implementation concerns or ancillary features (e.g. sharing/screencast, movement functions). By adhering to the backward design principles, teams can ultimately make design and implementation decisions that supports learning outcomes through AR/VR, and hopefully continue to identify effective places for authentic learning using these technologies.

[1] https://www.tx.nesinc.com/Content/StudyGuide/TX_SG_SRI_160.htm