Assistant Director of Instructional Services: The Assistant Director of Instructional Services conceptualized the training, as well as led the team’s effort in designing it, while contributing to designing learning activities for the training.

Senior Instructional Designer: The instructional designer’s role was to contribute to the conceptualization of the training, design appropriate learning activities, identify appropriate tools to use, as well as provide just-in-time support to training participants when the training was implemented.

Instructional Technology Consultant: The instructional technology consultant was responsible for the entire technology logistics of the training to make sure it ran smoothly. The instructional technology consultant also provided just-in-time support to the participants regarding the tools being used in the training and the platform being used for delivering the training.

Graphic Designer: The graphic designer created all the visual elements for the training, such as banners, visuals for accolades, and badges, as well as overall branding of the training. The graphic designer played a central role in shaping the visual identity of the training.

We are part of the Instructional Services team, and our mission is to support faculty and staff at the School of Public Health across a wide range of teaching-related needs, from course design and planning to instructional technology, media development, and learning analysis. Our mission is to support the school's reputation for excellence in teaching.

Since November 2022, when ChatGPT first came into existence, Generative AI has been taking the world by storm. The use cases of Generative AI span multiple areas, and education is no exception (e.g., intelligent tutoring systems (Nye, 2015) and adaptive learning platforms (Fan et al., 2021). Apart from its fascinating advanced features, AI-driven systems like Generative AI present undeniable challenges ranging from privacy issues (Barocas et al., 2019; Holmes et al., 2019; Misiejuk & Wasson, 2017) to the spread of biased and inaccurate information (Pierres et al., 2024; Rudolph et al., 2024; Roshanaei, 2024). Regardless of one’s stance, it is increasingly clear that understanding how GenAI works and how to use it responsibly has become a core literacy for educators and professionals alike.

AI literacy is an important area of research and practice (Airaj, 2024; Javed et al., 2022; Pierres et al., 2024). It aims to eliminate misconceptions about AI and clearly present how AI-driven systems, like Generative AI, work and how to leverage them while being mindful of their limitations. As part of AI literacy, we, a group of designers, created and implemented the following online self-paced training that we titled: “When life gives you LLMs, make LLMonade”.

With this design case, we do not aim to provide theoretical justification for every design decision, nor do we position this work as a traditional empirical study in which theory drives method and analysis. Instead, our goal is to offer a rigorous account of practice that communicates the kind of situated, experiential knowledge designers actively build through real-world work (Boling, 2010; Boling & Smith, 2009; Gray, 2020). This stance reflects an understanding that design knowledge is pragmatic, contextual, and shaped by the unique characteristics of the environments and situations in which design work takes place. As Gray (2020) notes, generating interest for other designers requires foregrounding this pragmatic and non-deterministic nature of knowledge in design, rather than pursuing the goal of building or validating theory.

Our contribution is intended to be of interest not because it claims generalizability, but because it offers a grounded narrative of design in action and contributes to a facilitative, rather than prescriptive tradition of instructional design. This philosophical orientation prioritizes adaptability, responsiveness to context, and the lived realities of design work. In this context, we do not offer this design case as a fixed model, but as a flexible approach shaped by the specific conditions, needs, and constraints of our context. By describing our design decisions in response to the specific needs and constraints of our setting as well as sharing our design outcome, we aim to offer a situated example that other designers might draw from to build their own repertoire of precedent knowledge (Boling, 2021; Boling et al., 2024; Boling et al., 2025). The precedent design knowledge does not serve as a prescriptive model to follow, but as a source of insight, comparison, or inspiration.

We designed this training during a period of rapid GenAI adoption in industry and education. Outside our institution, tools like ChatGPT and DALL-E were receiving widespread attention, and interest in their use was growing across sectors. By late 2023, generative AI tools like ChatGPT and DALL·E were integrated into platforms like Microsoft 365 (through Copilot) and Google Workspace (through Duet AI, later renamed Gemini), allowing users to access AI features, such as text generation, summarization, and image creation, directly within familiar applications like Word, Excel, Docs, and Gmail. This growing presence made it clear that these tools weren’t just gaining popularity; they were quickly influencing how people approach routine tasks across professions. Educators, professionals, and organizations were starting to explore how to use these tools ethically and responsibly, especially as questions around ethics, productivity, and practical use began to gain attention (Storey et al., 2025; Mollick & Mollick, 2023; Hodges & Kirschner, 2024).

Inside our school, interest in GenAI was emergent but not widespread. We work in a large, research-intensive school of public health where faculty, staff, and students manage a wide range of responsibilities, such as teaching, research, service, and administrative work, all of which rely heavily on writing, data analysis, presentations, and collaboration tools. A few faculty members had started experimenting with GenAI tools, such as using chatbots in their courses, and reached out with follow-up questions about how to extend or refine those uses. But overall, interest remained limited, and GenAI was not yet a widespread topic of discussion.

Institution-wide, professional Communities of Practice—cross-campus groups that include members from teaching and learning with technology, academic innovation, instructional design, the library, and other support units—had begun exploring GenAI, creating an opening for locally grounded professional development. In these spaces, members were exchanging use cases, asking critical questions about ethics and pedagogy, and trying to understand where GenAI might fit into their work.

Rather than promote GenAI or assume its value, our goal was to offer a low-pressure space for exploration, especially for those who were curious but cautious. Our design choices were grounded in responsiveness to this internal context, with attention to the time constraints and varying levels of GenAI familiarity among our audience. We wanted to help participants engage with the tools directly, in ways that felt approachable, hands-on, and relevant to their day-to-day work. By using microlearning, light gamification, and real-world scenarios, we aimed to lower the barrier to entry and support thoughtful experimentation.

Instructional design practice involves navigating ill-structured and complex situations, which require making sound design decisions at each stage of the design process (Stefaniak, 2023; Stefaniak & Tracey, 2014). In this case, the evolving nature of GenAI and the wide range of participant backgrounds and comfort levels posed a particularly complex challenge. Our design team had to consider multiple factors simultaneously, including: (1) topic selection and complexity; (2) types of learning activities; (3) duration and pacing; (4) engagement strategies; (5) scaffolding and support; (6) delivery format and platform; and (7) degree of interactivity.

At the core of our decision-making process was the team’s design precedent knowledge (Boling, 2021), which shaped our approach more than theory. We drew from previous successful initiatives, particularly an earlier 10-day professional development challenge focused on accessibility, delivered via Yellowdig. That initiative relied on microlearning and gamification, which proved effective in supporting flexible, self-paced engagement. Drawing on that experience, we adopted a similar structure for this training and adapted it to a different platform—Slack—to support hands-on, exploratory learning with GenAI tools in a format familiar to our participants.

Therefore, our design was guided primarily by precedent knowledge, which is a shared but individually shaped collection of experiences that informed our decisions. While this knowledge is often unique to each designer, in this case, there was meaningful overlap across the team. Although we did not intentionally apply theory during the design process, some elements, such as peer interaction in Slack and hands-on experimentation, reflect principles found in the Community of Practice and the Community of Inquiry. We included references to these theories in our narrative not to suggest that theory guided our design, but to illustrate how certain concepts can surface through reflective practice. Our aim is to offer readers a way to see how theory may be observed in practice, even when designers are either unaware of a certain theory or recognize its alignment in hindsight through reflective practice.

If any part of our design leaned most explicitly toward a theory-driven approach, it was gamification. However, this decision wasn’t solely grounded in academic literature or theoretical models. Instead, it was primarily based on the team’s previous successful implementation of a similar project, as described above.

Although we did not conduct a formal needs analysis, our ongoing work with faculty and staff provided a strong foundation for understanding their roles, responsibilities, and likely pain points. As GenAI tools gained visibility, it became clear that some form of timely, context-specific training was needed. While some schools and units on campus had begun offering GenAI-focused sessions, none were tailored to our school’s context. Supporting faculty and staff in navigating emerging technologies is part of our ongoing mission, and this project presented an opportunity to offer timely, relevant support.

Given our accelerated timeline—we aimed to launch at the start of the Fall 2024 semester—we relied heavily on informal user feedback and internal discussions. Early in the process, we engaged a colleague with limited prior interest in GenAI as a design persona. Her perspective helped us empathize with participants who might be hesitant, overwhelmed, or unconvinced of GenAI’s relevance. We asked her questions like: “What makes you hesitant about GenAI?”, “What would make you want to try these tools?”, “How easy is this to follow?”, and “Can you see yourself using this in your work?” When asked why she hadn’t engaged with GenAI, she replied, “What am I missing?” That response highlighted a key design insight: not everyone was avoiding GenAI because it was too complex; some simply didn’t see its value yet.

This led us to prioritize a learning-by-doing approach. We intentionally shifted away from abstract or technical explanations and instead focused on helping participants discover practical applications that felt relevant to their own work. We reduced cognitive barriers by emphasizing quick wins and approachable activities. Our goal was to demystify the tools, not to exhaustively explain their architecture or inner workings.

In addition, we included a self-assessment question in the registration form, adapted from user categories described in Fang and Broussard’s (2024) article on GenAI adoption in course design.

These categories included stages such as AI Illiteracy—where individuals are aware of AI tools but unsure how to use them—and AI Hallucination—where users embrace AI but fail to critically assess its outputs. While this wasn’t a formal diagnosis, it gave us a snapshot of participants’ comfort levels and helped us provide just-in-time support during the training. For instance, some participants asked questions about how tools like OpenAI were integrated with platforms they already used, such as Word or Excel. We also monitored Slack discussions closely and made small, real-time adjustments during the training. When one participant encountered an issue with voice input using Microsoft Copilot, we quickly recommended switching to the OpenAI app, which supports voice functionality. These kinds of informal and adaptive strategies allowed us to stay responsive to participant needs within the practical constraints of our context and timeline.

These iterative interactions, combined with insights from our design persona, validated our decision to foreground experiential, hands-on learning, or learning by doing (Dewey, 2008). While foundational knowledge of GenAI concepts like machine learning is important, we chose to emphasize professional relevance and immediate application of GenAI tools within participants’ professional roles. Participants were more likely to persist when they could clearly connect the activity to a meaningful task in their work. We prioritized the practical applications of GenAI tools, providing just enough essential information about GenAI for an overall understanding and integrated quick, practical activities that allowed participants to apply their knowledge and experiment with GenAI tools in their respective roles.

Our topic selection reflected this emphasis. Drawing on our collective experience and GenAI use cases, we identified ten daily themes that offered both breadth and depth, allowing participants to explore different tool features while focusing on practical application. The 10-day structure was deliberate—long enough to cover a range of tools, short enough to remain manageable. Each day introduced a targeted topic paired with a concrete activity:

Day 1: Prompt engineering for beginners

Day 2: Advanced prompt engineering techniques

Day 3: Voice-activated AI

Day 4: Using images as inputs

Day 5: File uploads and document analysis with AI Day 6: Plug-ins, integrations, and AI assistants

Day 7: Research assistance with GenAI

Day 8: AI augmented coding and spreadsheets

Day 9: AI for slide presentation creation

Day 10: Building custom AI applications

Our final design outcome was a self-paced, asynchronous online training that we offered on the Slack cloud-based communication platform. Our design intent was grounded in the objectives posted below:

Encourage discovery and experimentation with GenAI tools.

Provide practical skills to enhance productivity.

Engage participants through gamification (points, badges).

Build a collaborative learning community on Slack.

Below, we provide in detail what our training involved, its key features, and our design decisions associated with key features.

Our decision to use Slack as the platform for collaborative engagement was informed by a combination of design precedent knowledge, institutional infrastructure, and industry examples, such as Slack’s own partnership with Almost Technical to deliver a GenAI microlearning program directly within Slack. For instance, around the same time, a team member attended a presentation that highlighted a GenAI training developed for Slack employees, which was delivered directly on Slack and praised for its effectiveness. This example offered a concrete model of how Slack could support hands-on, GenAI-focused professional learning. Additionally, Slack is a university-supported platform, making it a sustainable and accessible choice within our institutional environment.

In a previous professional development initiative, our team used Yellowdig to deliver a 10-day accessibility challenge. That training integrated microlearning, gamification, and peer-to-peer interaction, and received strong participant feedback for its flexibility and social learning. Because Yellowdig was unavailable for this initiative, we selected Slack as a comparable alternative that could support similarly structured engagement. Slack also aligned with existing institutional support and user familiarity, making it a practical and sustainable choice.

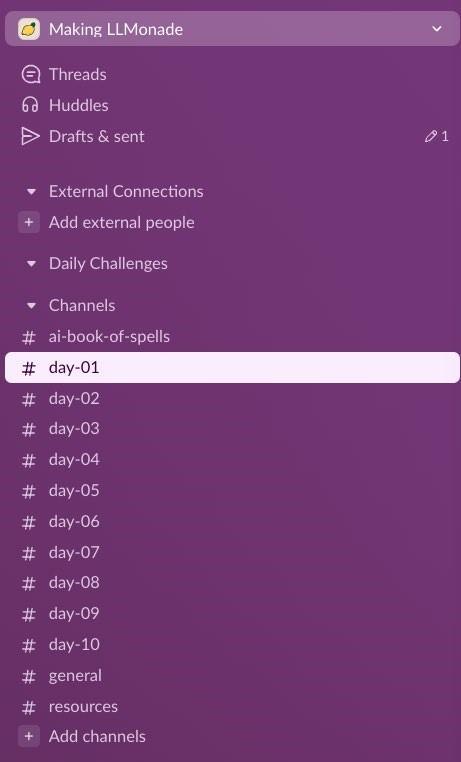

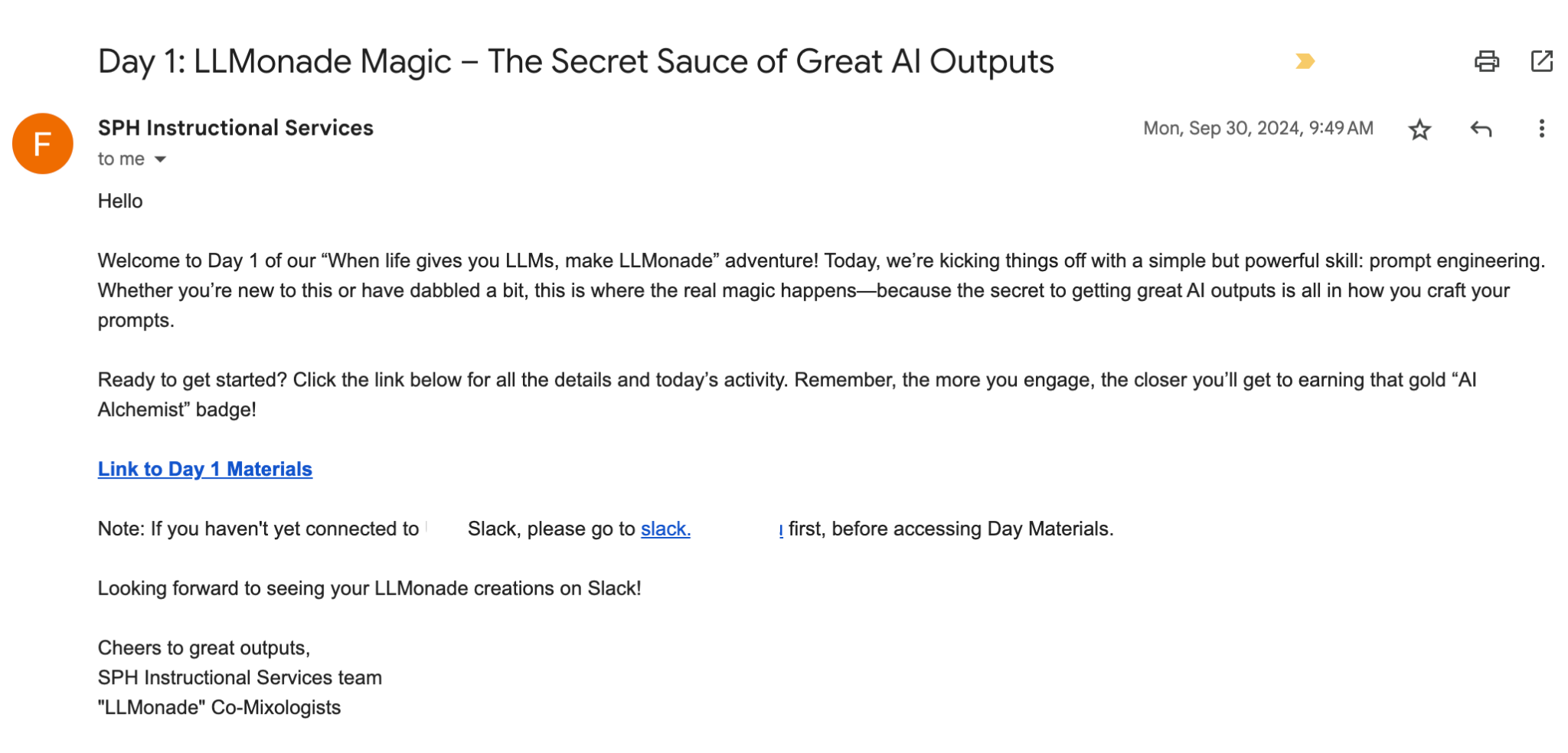

To structure the experience, we created a separate Slack channel for each day of the training (See Figure 1), allowing participants to follow a clear daily progression, share their responses, and engage asynchronously with peers. Each day, participants received a personalized email (See Figure 2) introducing the topic, highlighting key takeaways, and linking to the relevant Slack channel. These emails reinforced the playful tone of the training while offering clear entry points for engagement. For example, the Day 1 message—titled “LLMonade Magic: The Secret Sauce of Great AI Outputs”—framed prompt engineering as a foundational skill and invited participants to begin experimenting. Each message also reminded participants how their activity contributed toward earning points and digital badges. For those who preferred not to engage on Slack, we provided PDF versions of the content so they could follow along independently. This approach allowed for flexible, self-paced engagement.

Figure 1

The training structure on Slack

Figure 2

Example of a daily email that participants received

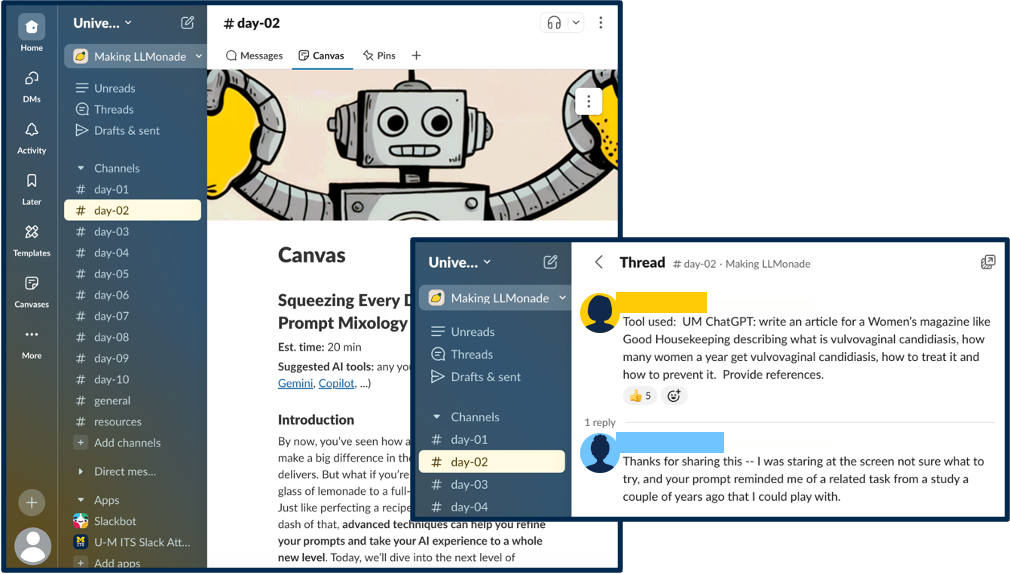

Figure 3

Example of collaboration among participants

While this design element mirrors the principles outlined in the frameworks as Community of Practice (Lave & Wenger, 1991) and the Community of Inquiry (Garrison & Akyol, 2013), it was grounded primarily in the team’s precedent knowledge and practical experience designing similar professional development experiences. Through the daily Slack channels and structured prompts, we fostered a learning community where participants could learn from one another by sharing questions, ideas, and use cases, which embodied the collaborative, situated learning emphasized in Etienne Wenger’s communities of practice (CoP) theoretical framework (See Figure 3). Additionally, the design supported social presence in an online setting, as participants interacted with one another and with facilitators, in ways that closely resemble the social presence principle of the Community of Inquiry framework. Further, the open structure of Slack conversations allowed participants to build on one another’s ideas in real time, facilitating the kind of collaborative sensemaking described in recent research on digital learning environments (Durak, 2024; Kidron & Kali, 2024; McKay & Sridharan, 2024).

We incorporated gamification as a key design element to promote sustained engagement and participation. This decision was grounded in precedent knowledge from a previous 10-day challenge our team developed, which had successfully incorporated points, badges, and social interaction as motivational elements. Participants in that earlier initiative consistently reported that the game-like structure helped make the training feel more approachable, enjoyable, and worth prioritizing alongside other responsibilities.

Drawing on that experience, we designed a points-based system complemented by accolades and digital badges. Our intent was to offer multiple forms of recognition—both quantitative and qualitative—that encouraged ongoing participation without creating pressure or competition. The gamified structure was also tied to Continuing Professional Education (CPE) credits, a feature relevant for staff members whose performance evaluations include professional development.

The point system included the following components:

Registration: 50 points

Reading daily emails: 50 points per day

Completing and sharing an activity: 250 points

Posting or replying on Slack: 50 points

Emoji reactions: 10 points

Daily Slack activity: 50 points per day, with bonus points for streaks

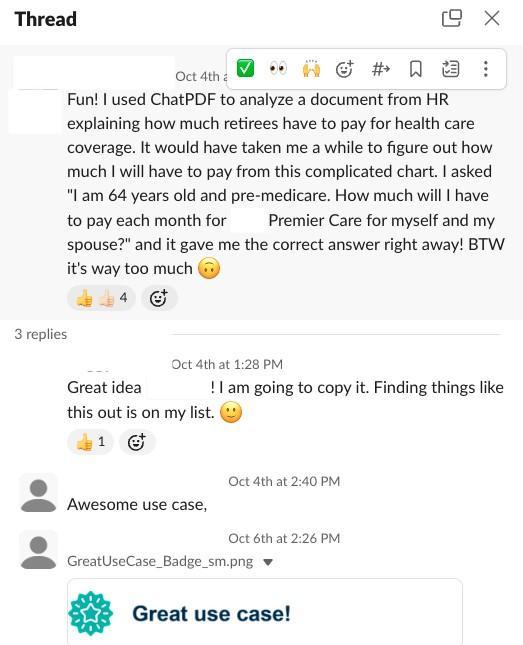

In addition to point-based engagement, participants could receive accolades for meaningful contributions. These informal recognitions highlighted behaviors that aligned with the values of the training—curiosity, creativity, resource sharing, and critical engagement. Accolade categories included: Community Champion, Excellent Question, Fun/Creative Application, Helpful Resource, and Great Use Case. Accolades added an element of recognition and fun, and earned participants additional points.

Each accolade was accompanied by a small point bonus and served to reinforce a culture of encouragement and mutual recognition within the Slack environment.

Digital badges were awarded based on total point accumulation and issued through Canvas Credentials (formerly Badgr), a university-supported platform that allows users to share credentials on professional networks such as LinkedIn. Badge tiers were structured as follows:

Gold: 4,000+ points (4 CPE credits)

Silver: 3,000 points (3 CPE)

Bronze: 2,000 points (2 CPE)

Participant: 1,000 points (1 CPE)

Beyond the mechanics of points and rewards, we emphasized playfulness as a pedagogical stance. The training’s title—When Life Gives You LLMs, Make LLMonade—and the themed daily activity names were intentionally chosen to spark curiosity and lower perceived barriers to entry. Rather than presenting GenAI as a high-stakes or purely technical topic, we used gamification to foster a culture of experimentation, humor, and informal learning.

In this context, gamification functioned not just as a motivational technique, but as an embodiment of our design philosophy, one that aligned with our broader goals of making GenAI literacy approachable, personally relevant, and socially supported.

Figure 4

Example of a discussion and the “Great Use Case” accolade

To ensure the training was accessible and manageable for busy professionals, we structured each day around a single, focused topic. This microlearning approach was intentionally selected to reduce cognitive load, accommodate varied schedules, and support flexible, self-paced engagement. Drawing on our team’s prior experience designing professional development for faculty and staff, we recognized that short, targeted learning segments paired with immediately actionable tasks were more likely to sustain participation and support meaningful learning.

Each daily module was designed to take approximately 15–20 minutes and included a compact but thoughtfully structured learning activity. As described in the “Collaborative Learning Space” section, participants received a personalized daily email introducing the topic and linking to the corresponding Slack channel, where content was presented using Slack’s Canvas feature. For added flexibility, downloadable PDFs were also provided.

The Canvas and PDF content followed a consistent format designed to promote clarity and encourage experimentation:

Brief introduction to the day’s topic

Pro tips for using the featured tool or technique

“What to watch for” section highlighting common pitfalls or ethical considerations (e.g., hallucinations, bias, privacy risks)

“Try it” activity prompt designed for immediate application

Key takeaways summarizing the concept and its relevance to the professional context

This predictable structure helped participants know what to expect and made it easier to fit the training into their daily routines. At the same time, the modular format allowed them to revisit or skip topics based on interest or need. Importantly, the design supported autonomy and learner choice—two principles critical to adult learning in time-constrained environments.

The microlearning model also reinforced our broader pedagogical goals: to keep the training low-barrier, practice-oriented, and relevant across diverse roles and skill levels. Rather than front-loading theoretical content or requiring sequential progression, each day stood alone while contributing to a cumulative sense of competence with GenAI tools.

Figure 5 provides an example of the Canvas layout used on Day 4, Using Images as Inputs, illustrating how microlearning was operationalized within the training.

Figure 5

Example of a Slack Canvas for Day 4: Using images as inputs

To ensure that each day’s activity felt meaningful and professionally relevant, we grounded the training design in real-world tasks that participants were likely to encounter in their everyday work. Early in the planning process, we conducted informal conversations with faculty and staff to understand how they were already using—or considering using—GenAI tools in their teaching, research, and administrative tasks. We also monitored developments in higher education and industry to identify timely, relevant use cases that could inform our design.

As a result, we offered activities that were intentionally practical and adaptable. Examples included drafting emails, summarizing meeting notes, analyzing documents, exploring visual inputs, and working with structured data. Each task was framed as a plausible workplace scenario and designed to highlight the kinds of efficiencies or new capabilities GenAI could offer. At the same time, we built in flexibility: participants were encouraged to adapt the daily activity to their specific context or replace it entirely with a task of their choosing.

This choice-driven approach reinforced two central goals of the training: to promote agency and to support exploratory learning. By inviting participants to engage with GenAI tools in ways that connected to their existing workflows and priorities, we sought to lower the barrier to experimentation and increase perceived relevance. Rather than asking participants to engage with abstract or hypothetical prompts, we offered scenarios that aligned with the realities of their work and allowed them to test the usefulness of this technology on their own terms.

Authentic, context-specific tasks are more likely to result in sustained engagement and are key to helping learners generalize what they’ve learned to new situations. By situating GenAI exploration within real tasks, we encouraged thoughtful evaluation of benefits, risks, and appropriate use of the GenAI tools our participants tried.

Figure 6 shows an example from Day 5—File Uploads: Sweeten Your GenAI LLMonade—in which participants were given two pathways based on their professional responsibilities. This type of branching activity design provided just enough structure to guide participation while preserving space for individualization and deeper exploration.

Figure 6

Example for an activity

We recognized early on that the learning experience would be shaped not only by content and activities, but also by how the material was presented visually. Our goal was to create a welcoming, intuitive, and visually coherent environment that would engage participants and support ease of use, particularly within Slack, a platform not traditionally used for structured learning. To that end, we prioritized both aesthetic design and usability, drawing on principles from multimedia learning theory (Mayer, 2024) to minimize cognitive load and enhance user experience.

Our graphic designer played a central role in shaping the visual identity of the training. This included developing a core thematic image that captured the playful spirit of the experience (see Figure 7), as well as designing visual elements that contributed to cohesion and structure. These included stylized page dividers used in Slack Canvas (Figure 8), custom digital badges (Figure 9), and iconography aligned with the central metaphor of the training—When Life Gives You LLMs, Make LLMonade.

Rather than treating visual design as decorative, we approached it as an integral part of the learning experience. Each graphic element served a functional purpose: breaking up content into digestible sections, reinforcing the training theme, guiding the eye through Slack posts, and rewarding engagement through visual feedback. The graphics were intentionally consistent and aligned with the tone of the training: lighthearted, exploratory, and welcoming.

Figure 7

Image representing the theme of the training

Figure 8

Image showing page dividers to break down the content

Figure 9

Image of a digital badge

While the inclusion of visuals in instructional design might seem self-evident, the value lies in intentionality. Effective visual design is not simply about making content “look good”. Rather it is about aligning visuals with meaning and reinforcing key messages. In this case, visuals helped transform a potentially unfamiliar or intimidating topic into an accessible and engaging experience.

We also recognized that many participants may have approached GenAI with skepticism, hesitation, or uncertainty. By crafting a visually inviting space, supported by vibrant imagery, consistent formatting, and playful branding, we aimed to decrease potential participants’ hesitation or uncertainty about using GenAI tools.

In sum, the visual layer of the training was not an afterthought. It was an essential component of our holistic design strategy: one that supported usability, reinforced tone, and contributed to the overall accessibility and appeal of the experience.

To support the smooth delivery and management of the training, we relied on university-supported tools that aligned with the learning objectives of the training and our logistical constraints. Each tool played a specific role in enabling communication, tracking participation, and delivering content in a cohesive, user-friendly format.

We used Qualtrics for registration and baseline data collection, which allowed us to streamline sign-ups while also gathering initial information about participants’ backgrounds and self-reported familiarity with GenAI tools. This early data helped us understand the diversity of our audience and anticipate potential support needs.

We managed daily communication using Yet Another Mail Merge (YAMM), which allowed us to send automated, personalized emails to each participant. These messages included Slack onboarding instructions, daily topic overviews, key takeaways, and links to the corresponding discussion channels. This system enabled us to maintain a consistent cadence of contact while ensuring that each participant received clear, timely guidance.

The training itself was hosted on Slack, where we created separate channels for each of the 10 daily topics. We used the Slack Canvas feature, which allowed us to deliver structured microlearning activities directly within the platform. This integration supported ease of access, many of whom were already familiar with Slack as a workplace communication tool.

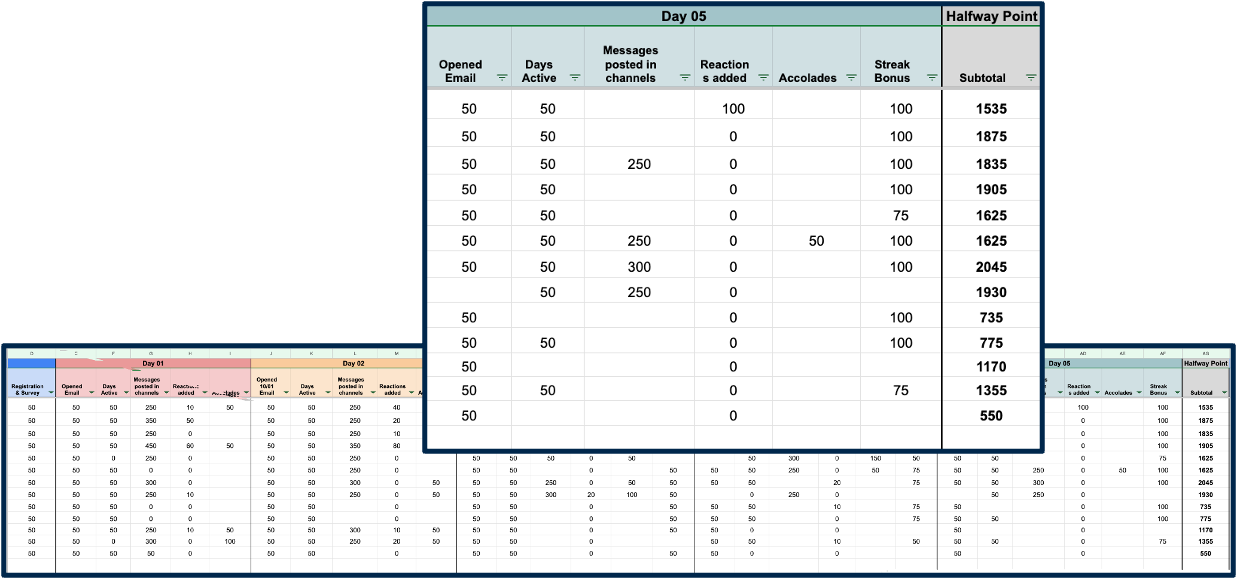

We used Google Sheets to track participation and scores, where we manually recorded points, activity completion, and discussion engagement. While this required daily oversight, it allowed us to maintain transparency and flexibility in awarding points, accolades, and streak bonuses—key components of our engagement strategy.

Upon completion of the training, we issued digital badges and certificates through Canvas Credentials (formerly Badgr). This platform allowed participants to claim verifiable digital credentials and, if desired, share their badges on professional platforms such as LinkedIn. Tying the badge system to a recognized credentialing tool enhanced the perceived value of participation while reinforcing the professional relevance of the training.

Together, these tools formed an integrated ecosystem that enabled us to deliver a cohesive, interactive, and well-supported experience. Our technology choices were guided not only by functionality, but also by institutional availability, participant familiarity, and the need to create a low-barrier, high-impact learning environment.

To evaluate the effectiveness of the training, we developed a brief post-training survey that captured participants’ immediate reactions to the content, delivery format, and perceived value of the experience. While we did not formally adopt the Kirkpatrick Model, our evaluation approach aligned with its first level—reaction—by focusing on participant satisfaction, perceived relevance, and self-reported learning (Kirkpatrick & Kirkpatrick, 2006).

In designing the Likert-style survey items, we followed best practices in instrument development, drawing on recommendations from Chyung et al. (2018), Joshi et al. (2015), and Revilla et al. (2014). We used descending-order response scales to reduce the risk of inflated positive ratings and selected a 5-point agree–disagree format to balance usability and measurement quality. We prioritized clarity and conciseness in wording to reduce cognitive load and ensure accessibility for a broad professional audience.

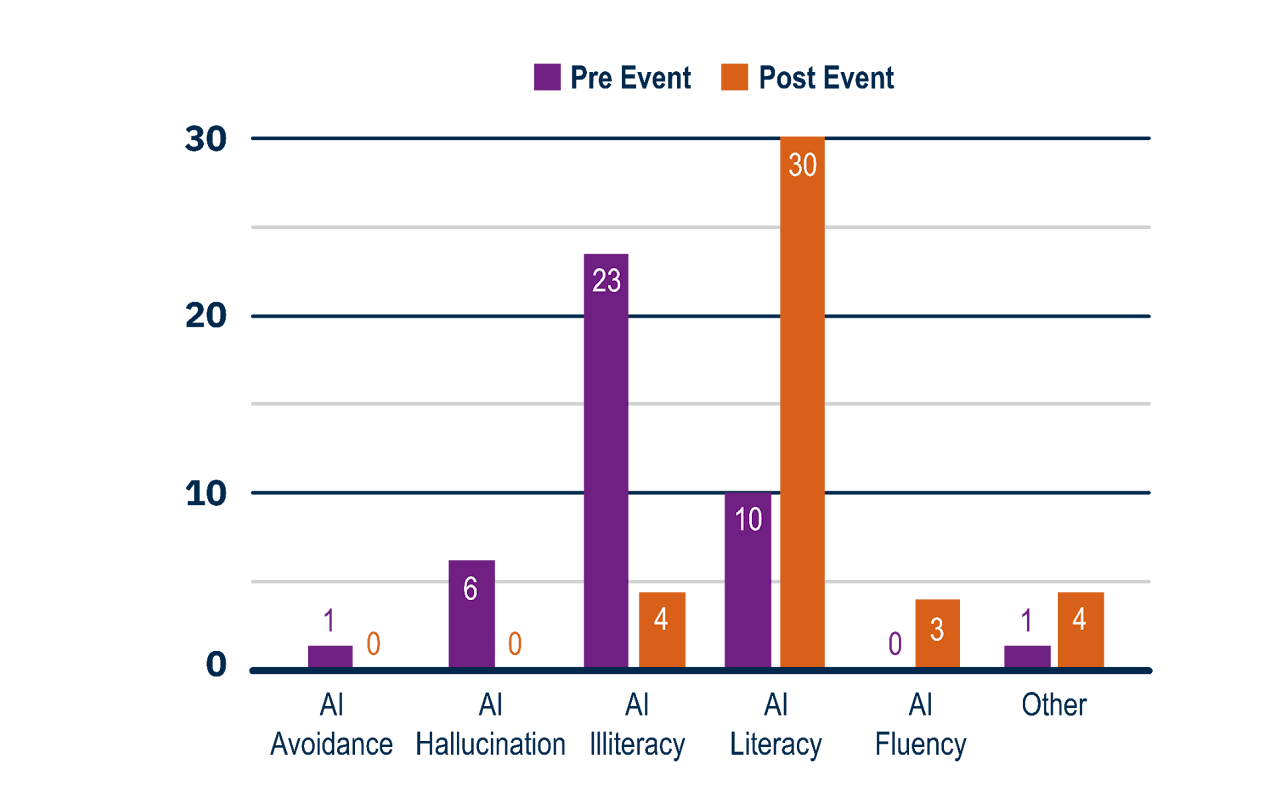

To supplement these perception-based items, we included a pre/post self-assessment prompt that asked participants to reflect on their AI literacy before and after the training. We also included a self-assessment prompt that asked participants to reflect on their AI literacy before and after the training. We adapted these user categories from Fang and Broussard’s (2024) article on GenAI adoption in course design, which outlines stages such as AI Illiteracy and AI Hallucination. This question provided insight into participants’ perceived shifts in confidence and conceptual understanding.

Below are the key outcomes of the training:

Among participants who completed both the pre- and post-training surveys (n = 41), the proportion identifying as AI-literate increased from 10 to 30 by the end of the program (see Figure 10). This finding suggests a meaningful shift in self-perceived capability following participation in the training.

Figure 10

Pre- and Post-Training Level of AI Literacy

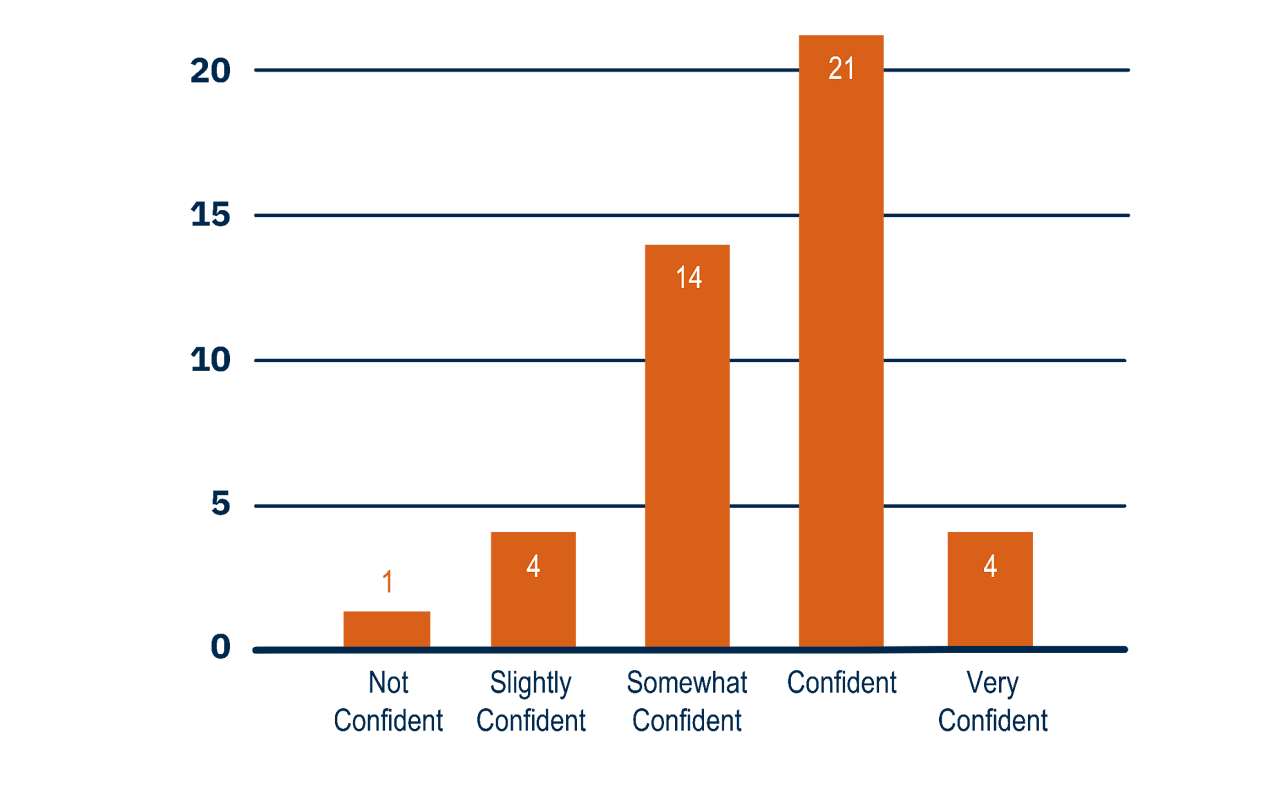

After completing the training, the majority participants (n=25) reported that they are confident using GenAI tools in their workplace (See Figure 11).

Figure 11

Reported confidence using GenAI tools in the workplace

Open-ended responses provided additional insight into the learning experience. Key strengths identified by participants included:

Social learning and community building. Specifically, one participant shared:

I thought this was a fantastic training! I like that it was self-paced and I could complete the activities at a time that worked well for me. I loved the Slack component and it was so helpful to read about what was working for others. The exercise pushed me to try new AI tools and approaches and in general, I have found myself using AI more since completing the training.

The exploratory nature of the training allowed participants to discover and experiment with different tools in their professional settings. A participant’s quote below illustrates the above point:

I really liked the range of topics here. I'd used AI previously for Day 1 tasks and a little bit of coding. I didn't know about how many applications there were.

The microlearning nature of the training, e.g., bite-sized content of the training. Specifically, one of the participants shared:

The bite-sized, go at your own pace format made it very approachable, and took the pressure off. I appreciated knowing the resources would still be available even if I didn't get to something on a particular day.

Applicability of topics, as illustrated by the quote of one of the participants:

I absolutely loved participating in this course. All the topics were relevant and I was able to use them in real-work situations. Thank you so much for putting this together. I learned a lot and am ready to take on even more AI challenges.

Among the positive feedback, participants also highlighted areas of concern, such as questions regarding the privacy and safety of GenAI tools, the accuracy of the produced content, and a preference for using university-wide tools rather than external ones. One participant specifically shared:

The Slack conversations and ability to see how colleagues were using it was VERY HELPFUL. I will say that I am hesitant to use AI in my work given that all of work is research based and I am skeptical about using AI to generate things related to our research. I noted that some people expressed this but not many. I am distrustful of where this data goes when using AI. In addition, I find our students and younger staff are using AI and that sometimes this takes away their ability to think critically.

We observed, which was later reinforced by participant feedback, that certain topics, such as data analysis using GenAI tools, would benefit from additional scaffolding and step-by-step walkthroughs. While our goal was to create a largely exploratory learning experience, this insight highlights an area for improvement in future professional development offerings. It also serves as additional evidence regarding the complexity of designing programs that cater to a wide range of interests and proficiency levels, requiring a creative, flexible, and agile approach to meet diverse learning needs effectively.

In addition to the self-reported data, we monitored participant engagement using a combination of automated and manual tracking methods. Slack analytics allowed us to observe activity levels across daily channels, including post frequency, replies, emoji reactions, and participation streaks. Email engagement metrics, captured through Yet Another Mail Merge (YAMM), provided insight into daily open rates and link clicks, helping us understand which topics generated the most interest.

To support the gamified structure, we manually recorded activity and calculated points using a shared Google Sheet. This allowed us to award badges based on engagement levels and ensured that participation data remained up to date throughout the challenge. These behavioral analytics complemented the survey data by offering an objective view of how participants engaged over time.

For example, by Day 5, several participants had consistently opened emails, contributed to Slack, and accumulated streak bonuses—indicators of sustained engagement, even among those who did not complete the final survey.

Figure 12 shows a snapshot of our participation tracking and scoring dashboard at the midpoint of the training.

Figure 12

Midpoint Participation and Scoring Dashboard

This project exemplified the challenges and opportunities of designing within an ill-structured, evolving landscape, specifically, how to introduce GenAI to a diverse group of professionals with varying roles, schedules, and levels of familiarity with emerging technologies. While our initial intent was clear—to promote GenAI literacy through a low-barrier, hands-on training—the scope of what could be included quickly became a design challenge. Topics like prompt engineering, tool comparison, ethical concerns, and human–AI alignment all felt important, but we had to continually return to a core guiding question: What will be most useful and manageable for our participants?

In this specific context, we operationalized AI literacy as both functional and critical: understanding what GenAI tools can and cannot do, and cultivating a foundational awareness of their ethical implications. Our goal was to foster not mastery, but confident experimentation grounded in practical relevance. We wanted participants to leave not as experts, but as more informed, empowered users.

Also, this experience was a great example of how, even having a reference to a previously successful project, which constitutes part of our design precedent knowledge, didn’t negate the need to think creatively and make sound design judgments. We began with a familiar structure: a 10-day, gamified, microlearning-based challenge. But that foundation alone was not enough. Designing for GenAI literacy required careful consideration of which tools to introduce, how to frame them, and what types of activities would foster exploration without overwhelming learners. We had to anticipate not just how participants would engage with the tools, but how they would talk about them, critique them, and integrate them into their work.

It came as no surprise that topics like data analysis, coding with GenAI tools needed more support and framing than others. These topics are technically introductory, but they involve more nuance than simply “put in a prompt and see what happens.” The level of engagement varied, such as some participants shared code and advanced use cases, while others were just beginning to explore what GenAI could offer. Given our time constraints and limited team capacity, e.g., we’re a small team of four, we weren’t able to create our own walkthroughs or worked examples. Instead, we curated videos available on YouTube, such as tutorials on using ChatGPT plug-ins for Excel or Google Sheets. While these helped fill the gap, some weren’t as focused or concise as we would have liked. The topic itself was heavier than others, and participants would have benefited from more customized support. These days didn’t go badly, but they didn’t go as smoothly as others. For the next iteration, we developed customizable resources that provided more consistent scaffolding. For the data analysis day specifically, we kept the activity intentionally simple and practical, such as demonstrating how GenAI can support initial data analysis through prompting, while still allowing participants to engage at different levels.

In addition, design framing plays a critical role when launching and shaping learning experiences. In retrospect, we could have emphasized more prominently that this training was intended to help participants explore existing GenAI tools and consider how they might apply them in their own professional roles. For the next iteration, we made that framing more explicit by presenting the training as a low-pressure, exploratory experience, and not a technical deep dive or a comprehensive seminar on AI ethics. We wanted to lower the barrier to entry and help participants build confidence through hands-on experimentation. At the same time, we recognized the importance of addressing ethical considerations more directly. For the new iteration, we developed a supplemental resource that outlines key ethical concerns, along with practical examples to help participants reflect on potential risks and responsible use.

Every new design project opens space for creative thinking and originality, while also revealing specific areas for growth. This experience helped us sharpen our focus, clarify our design intent, and identify clear directions for future improvement.

This design case documents one team’s response to a practical challenge: how to introduce GenAI tools in a way that’s approachable, relevant, and grounded in the working realities of higher education professionals. Rather than offering a generalized model, we present a situated account of design-in-action—what we prioritized, how we made trade-offs, and what we learned through iterative practice.

In designing this training, we made a series of intentional design choices based on what we knew about our context and our participants. We prioritized hands-on practice over theoretical explanation to help participants build confidence through exploration. We structured the training to be asynchronous and self-paced, which gave participants flexibility and allowed them to engage on their own terms. We introduced foundational topics first and built toward more complex applications, aiming to reduce cognitive load and maintain momentum. We used gamification in a purposeful way to encourage sustained engagement.

More importantly, we focused on creating a learning environment that encouraged curiosity and experimentation, especially for participants who were newer to GenAI tools. Throughout the design process, we focused on the relevance of learning activities, selecting tools and examples with the goal of making the training feel applicable to participants’ day-to-day responsibilities.

This design case exemplifies how thoughtfully designed learning experiences can support professionals in engaging with GenAI in ways that are practical, grounded in their everyday work, and mindful of key ethical considerations. This design case also highlights the value of context-responsive, practitioner-led instructional design in navigating fast-moving technological landscapes. As GenAI continues to evolve, so too must our approaches to teaching and learning with it. We offer this design case as one example of what intentional design, responsive to diverse learning needs, can look like in practice.

We would like to acknowledge Joanna Kovacevich, Instructional Technology Consultant, for her instrumental role in managing the logistics of the “If life gives LLMs, make LLMonade” training, including setting up the Slack workspace and tracking participant engagement. We also thank Timothy Sharp, Graphic Designer, for designing the visual identity for the training, including banners, accolades, dividers, and other key visual elements. Their contributions were essential to the successful implementation of this important initiative.