EdTech Archives

International Consortium for Innovation and Collaboration in Learning Engineering (ICICLE) 2024 Conference Proceedings: Solving for Complexity at Scale

EdTech Archives

International Consortium for Innovation and Collaboration in Learning Engineering (ICICLE) 2024 Conference Proceedings: Solving for Complexity at Scale

EdTech Archives

International Consortium for Innovation and Collaboration in Learning Engineering (ICICLE) 2024 Conference Proceedings: Solving for Complexity at Scale

EdTech Archives

International Consortium for Innovation and Collaboration in Learning Engineering (ICICLE) 2024 Conference Proceedings: Solving for Complexity at Scale

Competency standards are essential in nearly all environments implementing learning engineering processes. These standards enable alignment across diverse educational elements—such as curricula, assessments, and learning resources—ensuring that all stakeholders, including educators, administrators, and employers, work toward common learning goals. Without consistent competency frameworks, tracking progress, evaluating outcomes, and facilitating seamless transitions between learning stages or institutions become challenging (Jones & Voorhees, 2002). Whether in K-12 education, higher education, or workforce training, competency standards ensure that learning processes are focused, measurable, and adaptable to evolving needs.

However, simply mandating a unified set of competency standards or a controlled vocabulary has proven to be an unsustainable strategy that can undermine local adoption and implementation of competency-based learning. An empirical example of this is the gradual decline in the adoption of the Common Core State Standards (CCSS), a mandated, unified set of competency standards in the United States. Despite initial nationwide support, states began to withdraw or modify their involvement, citing local needs, political resistance, and a desire for greater control (Jochim & Lavery, 2015) . This shift highlights the difficulty of maintaining standardized frameworks across diverse educational systems with competing priorities. As states implement their own, adapted versions or withdraw from the CCSS, the value of the Common Core declines.

This demonstrates that instead of relying on a single standard, education systems need tools that allow competencies to be translated and aligned across diverse frameworks. Given that multiple frameworks are likely to coexist within any given domain for the foreseeable future, such tools are essential. As skills increasingly become the currency of the 21st century (Theben, Plamenova, & Freire, 2023), competency frameworks—and the ability to translate competencies between them—are critical for stakeholders to align skills with learning outcomes in competency-based environments (Dragoo & Barrows, 2016). As a result, these tools will function as a form of 'currency exchange,' enabling the translation of competencies across different standards.

Teachers, admissions officers, hiring professionals, and others who work with competency frameworks seek tools that map between competency frameworks and learning activities in a way they perceive as legitimate. (Penuel, Fishman, Gallagher, Korbak, & Lopez-Prado, 2009). Other stakeholders may want to map competency frameworks across coursework, universities, or states. The failure of existing solutions to account for local requirements, user-intent, and the associated lack of transparency—particularly regarding how alignments are generated—has resulted in officials questioning the defensibility of alignments these systems produce. Engaging stakeholders throughout the inference process will improve transparency in the generated alignments, which is vital to integrating diverse perspectives to effectively address user needs.

MatchMaker leverages a non-structured, polyperspective intermediary, the MatchMaker Palet™, to describe course materials and competency frameworks. Once described, matches between educational elements are generated via a tunable inference-engine. For example, the engine can generate matches—often referred to as mappings or alignments—between learning resources and competency frameworks. Learning resources, competency frameworks, or any component of a learning engagement are also referred to as educational elements.

Intermediary statements, as found in the Palet™, are descriptive. That is, they are intended to describe the skills that a resource teaches, an assessment measures, or a competency framework defines. Each intermediary statement consists of the statement text, a statement type, and an identifier. Defining statement types and ensuring that they function well within the inference engine is a current focus of MatchMaker’s research efforts.

Descriptors are the data elements on which the matching engine operates. A descriptor describes a skills statement (i.e., state curriculum standard), a learning resource, an assessment, a learning experience, or any other learning object. Each descriptor contains metadata aligned with industry standards, along with a set of Palet™ Statements that collectively describe the intention of the element. The basis of the descriptor data model is formed by the Learning Resource Metadata Initiative (LRMI) data model (Dublin Core, 2024), IEEE P2881 (IEEE P2881 Committee, 2024), and Schema.org.

A matching dashboard enables fine-tuning of the inference engine by adjusting weights and thresholds for Palet™ statement types and their connections to the elements they describe. Research is being conducted on how to tune the proprietary inference engine to generate the “best” matches for diverse use-cases (e.g., remediation, skills gap analysis, assessment).

The MatchMaker team has applied learning engineering principles to both (1) identifying the features and functionality needed to enable end-users to apply learning engineering processes within their own workflow and (2) prototyping the inference engine. By sharing how learning engineering principles were applied to the feature selection and prototyping processes, the MatchMaker team offers a case study model for how to design technologies with the learning engineering at the forefront.

Stakeholders and users of MatchMaker’s enabling technology may seek alignments and mappings between competency frameworks and other frameworks, resources, or educational elements. The alignment is generated through an intermediary and a tunable inference engine with 16 adjustable parameters, or weights.

An ongoing research challenge is to determine the optimum parameters for the inference engine given different matching scenarios. Users may need to adjust the parameters, which can be saved as a Match Profile, to ensure the generated matches effectively serve their specific use case. Throughout the course of a user’s workflow, users may also need to align different competency frameworks and have concrete metrics to evaluate the accuracy of these alignments.

Providing users with a high level of control and visibility in their decision-making process enables them to apply learning engineering principles within their workflow. To support this, features were designed with three core learning engineering principles at the forefront.

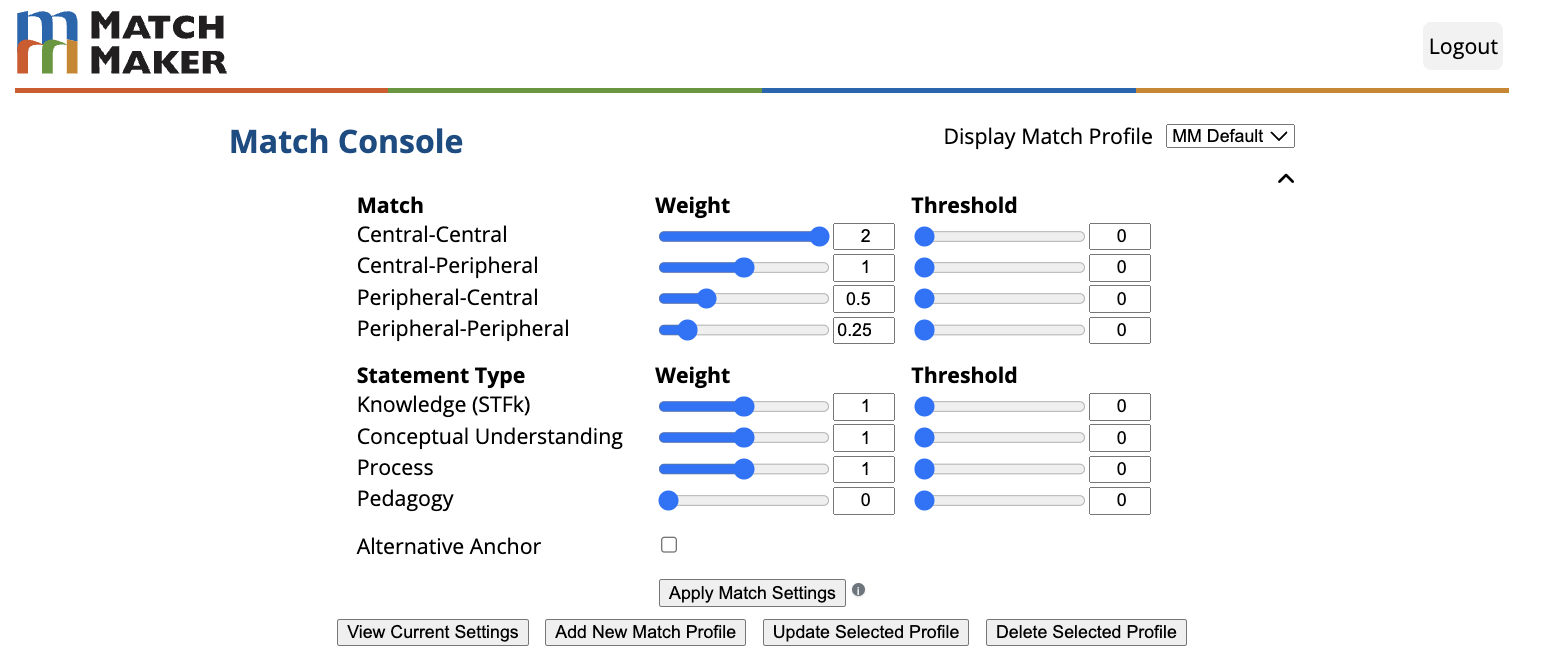

Figure. 1

Experimental match console for adjusting the 16 inference engine parameters.

Continuous, iterative development is critical to learning engineering, as learning engineering requires cycles of creation, implementation, and investigation (Goodell, Kessler, & Schatz, 2022). To support continuous, iterative development, users can create Match Profiles and make rapid adjustments to parameter, ensuring the generated alignments meet their specific use case.

For certain use cases, the matches may need to be expansive, mapping a competency framework to an extremely broad set of learning resources. There may be other circumstances where the matches need to be strict, matching a set of learning resources with other learning resources targeting the same competencies.

Defining what constitutes a sufficiently 'broad' or 'precise' match is inherently complex. Identifying a universal set of parameters for the inference engine to handle both cases effectively is challenging. Instead, these parameters must be refined through rapid iteration until the generated alignments meet the specific requirements of the use case. By providing users visibility and control over the refinement process allows users to employ learning engineering in their workflow.

Adaptable, Extensible Processes ensure systems remain flexible, integrating new frameworks or content seamlessly to meet changing educational demands and context. Learning engineering encourages designing systems that can evolve by incorporating new frameworks, content, or technologies, ensuring that the tools remain relevant as educational needs and environments change. This tool allows users to be adaptable in their workflow as the functionality can be easily expanded and adapted to incorporate new educational elements and frameworks as needed.

Data informed decision making is central to learning engineering as it ensures that insights from data—such as learner behavior or performance metrics—are systematically used to guide design choices and optimize both learning processes and outcomes over time. The Match Index enables users to rapidly evaluate the accuracy of a match between two educational elements, and from this data, make decisions on how to tune the parameters for the inference engine.

In his ICICLE 2023 keynote, Ken Koedinger offered the following quote from his recent paper:

Our evidence suggests that given favorable learning conditions for deliberate practice and given the learner invests effort in sufficient learning opportunities, indeed, anyone can learn anything they want. (Koedinger, Carvalho, Liu, & McLaughlin, 2023)

A key element in creating 'favorable conditions for deliberate practice' is selecting learning exercises aligned with the learner’s goals and appropriate to their skill level (Dunagan & Larson, 2021). For example, a music teacher selects the next piece for a student, a math tutoring application assigns suitable problems, and a programming student takes on a challenging algorithm. These decisions rely on three key factors: the learner’s current skill level, the next step in their learning progression, and the skills represented by the catalog of available activities. Ideally, these elements align with a single competency framework, but in practice, student records, progressions, and resources are often tied to different frameworks.

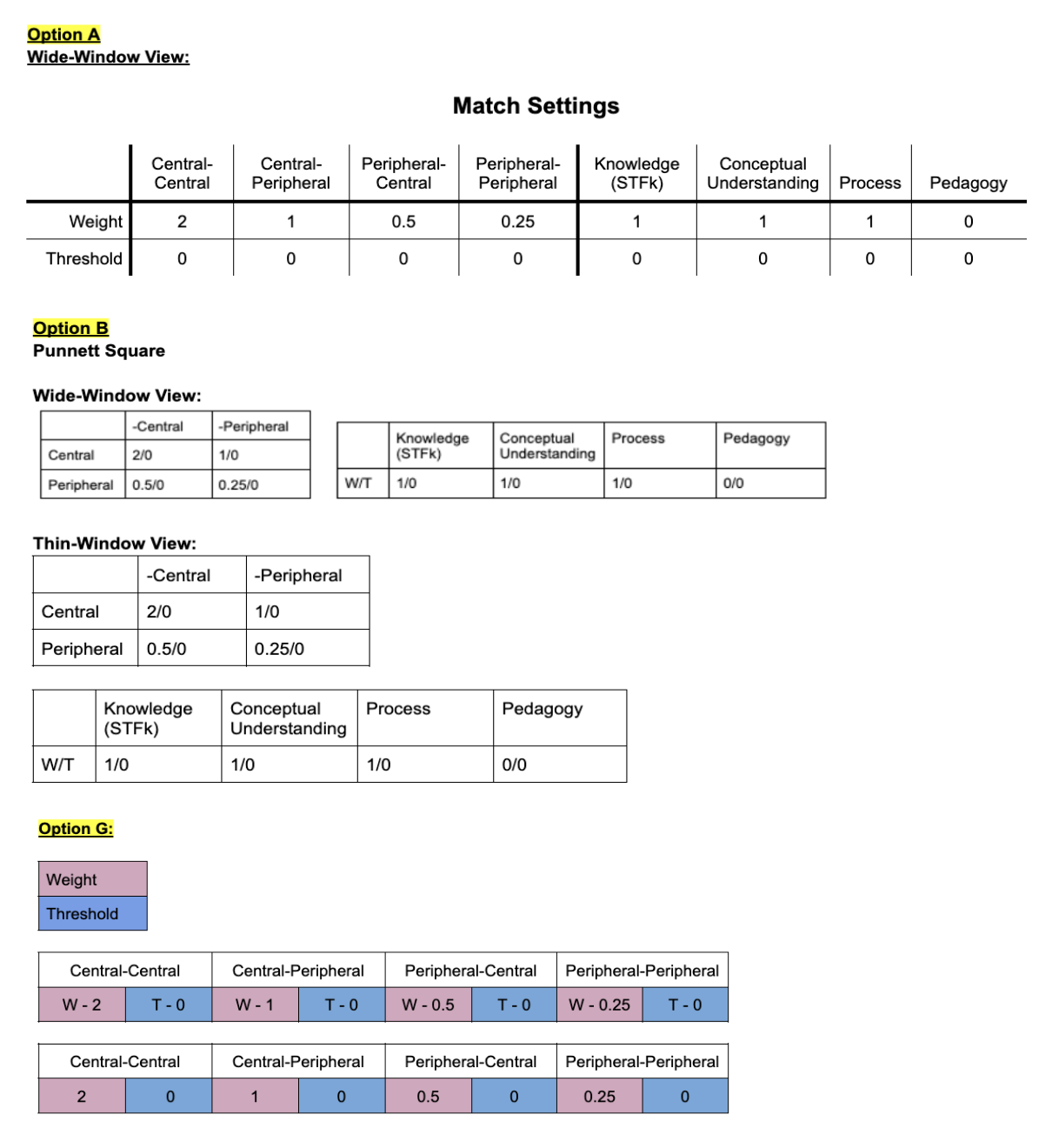

MatchMaker addresses this issue through an inference engine and intermediary, but a key design challenge is presenting the 16 parameters clearly to users or researchers. Using learning engineering principles, we applied rapid prototyping (Thai et al., 2022) to explore different ways of presenting these parameters. The goal is to create a compressed view that enables users to quickly understand the parameter settings. Figure 2 illustrates three of the seven initial designs we developed.

Figure. 2

3 of the 7 designs resulting from the initial rapid prototyping session.

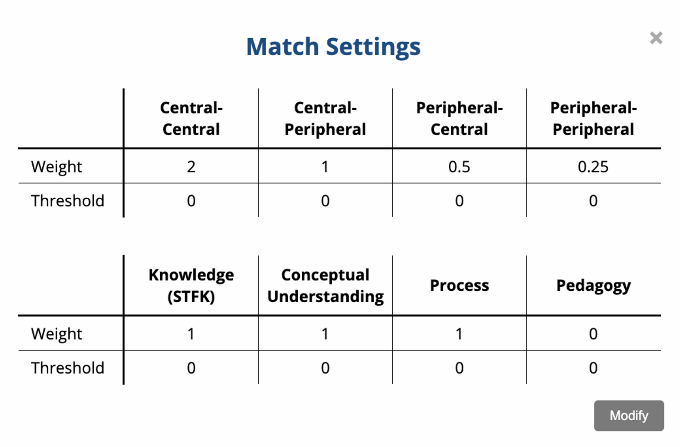

We iteratively presented designs to potential users of the application each time eliminating certain options and refining others. The result was two designs. Figure 3 shows the final form for presenting the 16 parameters. Figure 4 explicitly illustrates how the learning engineering process was applied to the development process of this specific feature.

Figure. 3

Selected design for presenting the 16 parameters to the inference engine

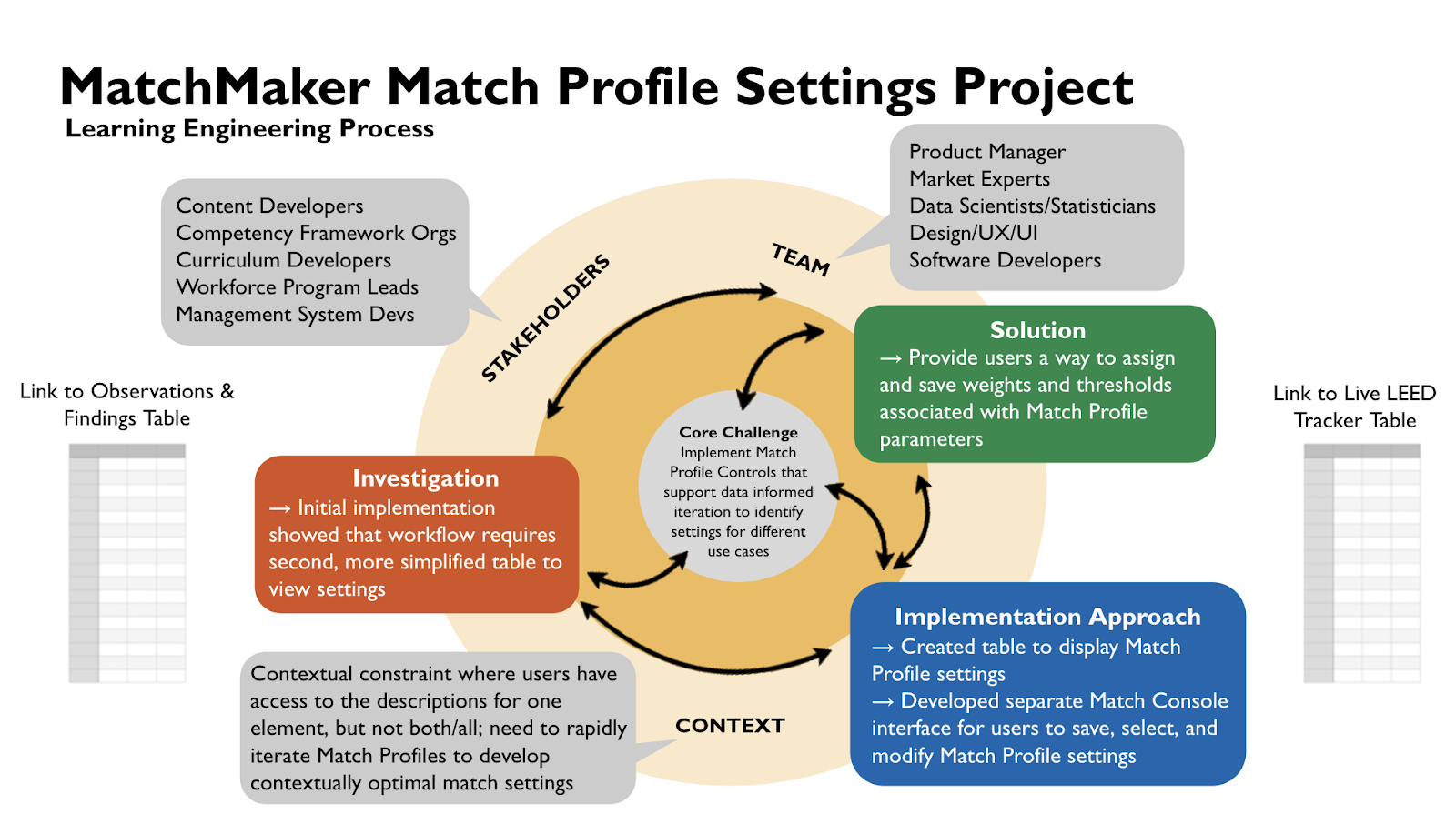

Figure. 4

Empirical application of learning engineering process with match profile settings

Rapid prototyping, external review, and multiple iterations are principles of design that have been proven many times over. While not exclusive to Learning Engineering, feedback-driven iterative design processes are critical components. (Barr, Dargue, Goodell, & Redd, 2022) We have shown one example where such a process was used to improve the user experience of an application intended to support Learning Engineering and develop a tool that enables users to employ learning engineering processes in their own workflow.