Extended Reality (XR) technologies hold immense promise in education. They have the potential to enhance motivation (Cárdenas-Sainz et al., 2019; Tunur et al., 2021), engagement, retention, and understanding by creating immersive, interactive, and experiential learning environments. In medical and dental education, for example, XR allows students to engage in realistic virtual patient simulations and to practice various medical and dental procedures, from routine cleanings to more complex surgeries, in a risk-free and controlled setting (Wang et al., 2021). This helps students develop and refine their skills and gain confidence before performing procedures on actual patients. However, despite potential benefits, integrating XR into education poses challenges for instructors. According to Abeywardena (2003), the lack of open content, tools, and skills, sound pedagogy and instructional design, and scalability and sustainability have been significant barriers to the broader adoption of XR in education.

Applied instructional design for XR blends instructional design principles with the unique affordances of XR, leveraging immersive technologies such as Virtual Reality (VR), Augmented Reality (AR), and Mixed Reality (MR) to address specific educational challenges and enhance learning outcomes. It requires a multidisciplinary approach which combines the principles of instructional design, architecture, spatial design, interaction design, visual design, audio design, and user experience design (Berlund, 2023), involving collaboration between instructional designers, educators, content developers, XR technology experts, and increasingly, generative Artificial Intelligence (AI; Huynh-The et al., 2023). Some instructional design guidelines and frameworks for XR have started to emerge (Abeywardena, 2023; Berlund, 2023; Fegely & Cherner, 2023; Yang et al., 2020), but numerous challenges remain.

One of the main challenges (as we show in a later section of the paper on Authoring Challenges: A Case Study in Dental Education) involves aligning XR activities with specific educational goals and desired competencies while leveraging the unique features of XR technologies, such as 3D spatial interactions, immersive simulations, and real-time feedback. XR design tasks include choosing the appropriate XR platform (VR, AR, or MR) based on learning objectives and user requirements, and creating 3D models, simulations, and interactive content which align with instructional goals. Crucially, ensuring XR experiences are inclusive to diverse learners should be a key priority, but practical and comprehensive guidelines for accessibility in XR are still sparse. This is the first challenge we address in this paper, suggesting an inclusive participatory design approach to XR instructional design, involving learners and instructors in equal partnerships.

Instructors navigating the transition from instructional design to authoring XR learning experiences play a crucial role in shaping the effectiveness and impact of immersive learning. Their multidimensional tasks involve a blend of pedagogical expertise, content knowledge, and technological proficiency to create inclusive, engaging, and educationally sound XR environments, requiring a nuanced understanding of XR technologies. Instructors need to familiarize themselves with the capabilities and limitations of XR technologies and develop a foundational understanding of how XR can enhance the learning experience and align with educational objectives. There is then a need to translate instructional design plans into tangible XR content, which may involve adapting existing materials or creating new, immersive resources. Authoring for XR remains an immense challenge for most instructors due to the extent and complexity of the technical skills required. This is the second challenge we address in the paper, suggesting another partnership between instructors and generative AI.

The paper is structured to address a spectrum of accessibility and inclusion considerations for XR learning experiences. The first part of the paper focuses on instructor-learner partnerships. We outline the accessibility challenges in instructional design practice and introduce participatory instructional design. We then propose and detail three stages of the process: establishing a baseline of understanding, iterative development of the learning solution, and evaluation. The second part of the paper presents a case study in dental education, illustrating instructor-AI partnerships.

While XR offers a powerful platform for varied learning experiences, not all learners access or process these experiences similarly. There is a pressing need for instructional design principles to ensure all students, regardless of their physical, sensory, or cognitive abilities, can benefit from XR in their education. General accessibility considerations identified in the literature thus far (O’Connor et al., 2020; Savickaite et al., 2023) highlight the importance of customizable XR experiences and the importance of inclusive design practices. This involves addressing issues related to hardware and input methods, ensuring technology caters to various user requirements. As a result, the design of XR content should prioritize physical and sensory accessibility, avoiding unnecessary replication of physical-world barriers and incorporating diverse sensory modalities.

Barriers to the design of inclusive learning exist on many levels. For the designers of learning materials, there are problems in recruiting sufficient and representative learners with disabilities. From the learners' perspective, issues may arise related to the difficulty of traveling to specific locations and/or accessing the learning materials themselves. These problems were, of course, exacerbated during the peak of the COVID-19 pandemic, which, in turn, motivated efforts to address the barriers inherent to remote collaborative design (Biggs et al., 2022a) and highlighted the need for accessibility considerations.

The complexity of perspectives and definitions surrounding inclusive education poses the first challenge for researchers (Göransson & Nilholm, 2014). Inclusive education is closely associated with diversity, equity, and equality, reinforcing the need for a holistic understanding of these principles in educational settings. For example, diversity encompasses a broad spectrum of student characteristics, including but not limited to differences in abilities, backgrounds, cultures, and learning styles. Equity emphasizes the fair distribution of resources and opportunities to ensure every student has the support they need to thrive. Equality, on the other hand, emphasizes the provision of the same resources and opportunities to all, regardless of individual differences. To advance discourse on inclusive education, there is a pressing need for a unified and standardized set of definitions which can serve as a foundation for research, policy-making, and practical implementation (Iwarsson & Stahl, 2003). Acknowledging and addressing these complexities should be at the forefront of discussions, with a call to action for standardizing definitions.

Secondly, incorporating XR in education necessitates careful consideration of accessibility issues to ensure an inclusive learning environment. General accessibility considerations applicable in educational contexts, particularly for individuals with physical disabilities, highlight hardware-related issues, such as discomfort in wearing head-mounted displays (HMDs) and challenges with input methods (Mott et al., 2020). Accessibility features should cater to diverse user requirements, recognizing a single solution may enhance accessibility for some while hindering it for others. Customizability is vital to meet individual students' specific needs, especially in educational institutions' hardware purchasing decisions.

It is also essential to consider sensory aspects in XR and the intersection of XR and neurodiversity, particularly when designing immersive learning environments (Newbutt et al., 2023; Savickaite et al., 2022). Developers and educators should focus on harnessing XR's unique capabilities to provide experiences which benefit both disabled and non-disabled users. Furthermore, there is a need to consider challenges posed by conflicting accessibility requirements, such as visual content excluding blind individuals and audio content excluding deaf individuals. Continued research on haptic technology for broader accessibility is required.

A consideration for developers and researchers alike is how their project will most likely be one of, if not the first times a user has been exposed to XR technology. As a result, the interaction between the virtual environment and the user will likely be a completely different user experience than what they are accustomed to in the physical world. Users must be willing to learn a new set of technical skills to take advantage of this. If someone has a negative initial encounter, they may lose interest in using any other XR. This is especially important for neurodivergent people, who may be more prone to developing automatic adverse reactions to specific occurrences (Millington et al., 2022). Ultimately, the issue revolves around the ethical responsibility of developers to ensure XR technologies do not discourage potential users, especially those with neurodivergent traits, from embracing immersive educational platforms.

Thirdly, effective inclusive practices in education involve a multifaceted approach. Universal Design for Learning (UDL) principles (Larkin et al., 2014) emphasize the importance of embedding inclusive practices in both classroom instruction and syllabi. This approach acknowledges students' diverse learning needs, encompassing different learning experiences within education. Belonging and participation (Lourens & Swartz, 2016) are essential components of inclusive education, emphasizing the need to foster community and involvement among all students. For example, Gale et al. (2017) point out educators need to understand education processes, set expectations, and take appropriate actions when dealing with students with disabilities. However, the focus should extend beyond disabilities alone, considering other contextual factors which may affect inclusivity, such as the lack of knowledge regarding the development of training experiences in inclusive education.

A workshop titled "Immersive Learning and Inclusivity: Raising Awareness, Identifying Opportunities and Challenges, and Adapting Practice" at the 9th International Conference of the Immersive Learning Research Network (iLRN) was an example of how awareness training could be implemented. The workshop, facilitated by Sarune Savickaite from the University of Glasgow and Marie-Luce Bourguet from Queen Mary University of London, aimed to acknowledge the importance of inclusivity and accessibility in immersive learning experiences. Through a 90-minute interactive session, participants engaged in small group activities, discussions, and reflective exercises. The format included pre-set group tasks addressing accessibility issues faced by specific learner groups, fostering a shared understanding of the challenges (Savickaite & Bourguet, 2023). Despite the existing awareness of the significance of accessibility, the workshop identified insufficient knowledge regarding the specific accessibility needs of diverse user groups. The case studies and discussions, especially those related to autism and color blindness, emphasized the need for continuous education and research to comprehend better and address the unique challenges faced by learners with various accessibility needs. A key takeaway from the workshop was the realization accessibility considerations should not be an afterthought but an integral part of the design process from the very beginning.

Further barriers to inclusive learning relate more specifically to technologies which might be included in learning materials to improve accessibility. For example, data sonification is an approach used to represent data in sound. Data sonification has been used to represent many forms of data, including spreadsheets (Stockman et al., 2005), diagrams (Metatla et al., 2012), and maps in audio (Biggs et al., 2022b). Data sonification has also been used in combination with haptics as part of a multimodal display (Metatla et al., 2012). However, a problem faced by designers of XR learning materials involving data sonification and/or haptics is the lack of standards for both of these technologies. While several approaches have been proposed for designing data sonification, such as parameter mapping and model-based sonification (Hermann et al., 2011), there are no agreed standards for representing particular forms of data.

Issues with accessibility in immersive education require immediate attention. There is a need for clear definitions of accessibility and guidelines and more transdisciplinary collaborations to provide comprehensive guidance. While the universal design is ideal, its full implementation may not always be possible; thus, the customizable design emerges as an accepted alternative. Current literature predominantly focuses on neurodiversity and visual impairments, signaling a gap in research outside these areas. To raise awareness, concerted efforts are needed to broaden the scope of accessibility research and education.

Customizability and participatory design remain critical themes in inclusive design practices, emphasizing users’ ability to tailor their XR experience to suit their unique needs. As educators and developers plan XR instructional design, they must navigate the complexities of conflicting accessibility requirements and aim for a balance accommodating diverse learners. In the next section, we suggest an inclusive participatory design approach to XR instructional design, involving learners and instructors in equal partnerships with a focus on accessibility. The stages we propose are not conclusive, and the dynamic network of the active role end users take in the design process should be explored further, particularly around the concept of co-design. However, a more nuanced exploration of these constructs is outside of the scope of this paper (see Konings & McKenney (2017) and Nordquist & Watter (2017) for a more detailed review).

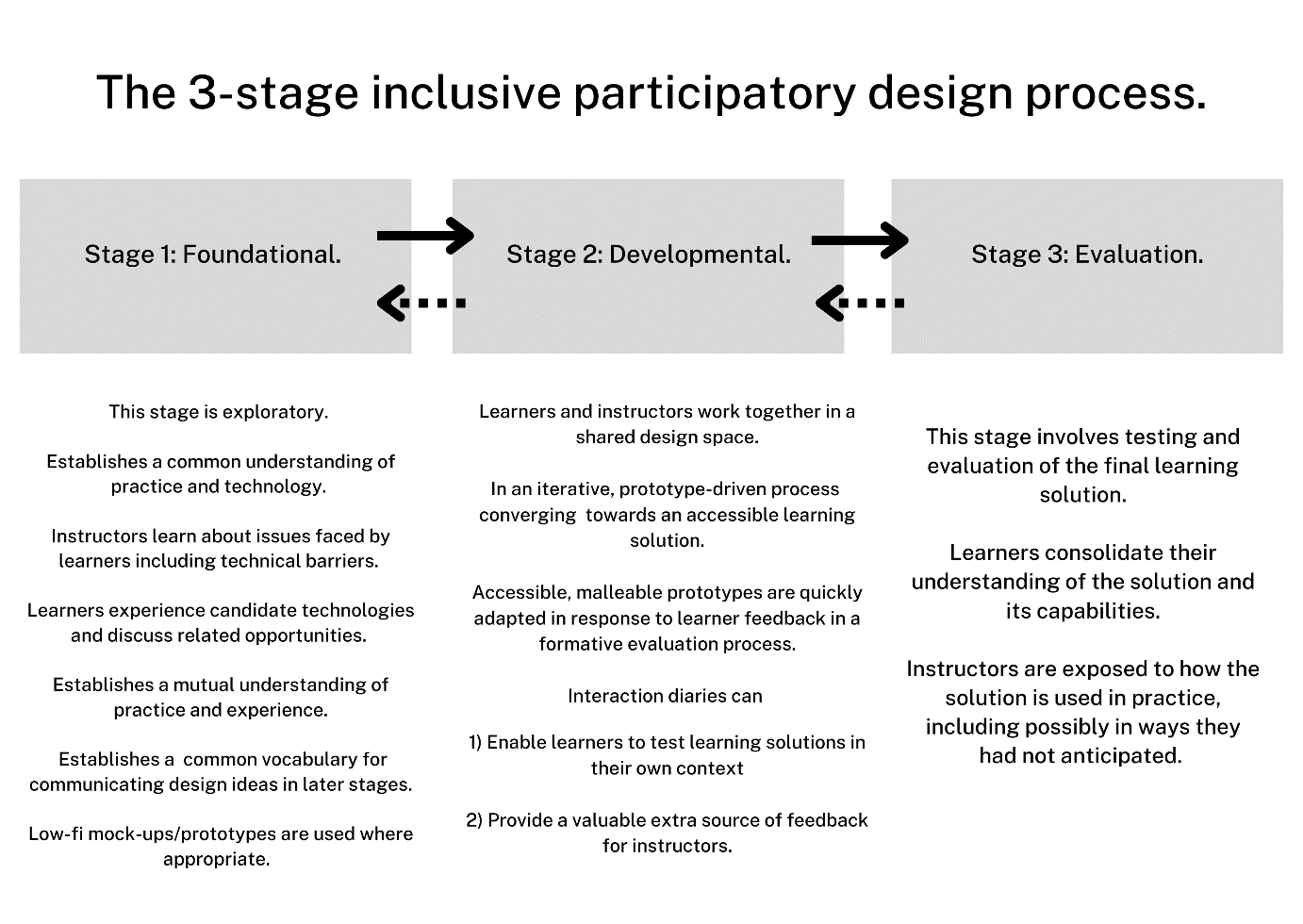

Inclusive collaborative design typically comprises several stages (Metatla et al., 2015). Note that we discuss stages one and two in more detail; however, we do not cover stage three in as much detail as it is an iterative process between the first two stages and does not require additional explanation (see Figure 1).

The initial stage is likely to be exploratory, aiming to establish a common baseline of understanding of practice and technology before attempting to engage instructors and learners in generating and capturing broad design ideas. This initial stage is valuable in helping all participants establish a deeper understanding of context and possibilities. From the instructors' perspective, this includes learning about the issues learners face, including those relating to disabilities, and recognizing when and where current technology fails to address those issues. From the learners' perspective, this includes encountering and understanding the capabilities of new technology and its possibilities and exchanging experiences with fellow learners. Essentially, only after each party has learned more about these independent aspects (context and technological capabilities) are they ready to move into a shared design space where they can effectively explore and generate instructional design ideas together.

Demonstrations of candidate technologies which may be involved in solutions can be valuable in this initial stage. These demonstrations help familiarize all stakeholders with technologies they may or may not have encountered before. Additionally, such demonstrations can help establish a common vocabulary between instructors and learners, which can then be used to express and communicate design ideas in later parts of the instructional design process. This benefit is beneficial when it involves technologies with no widely understood vocabulary to describe precepts or interactions, such as XR, haptics and sonification. A lack of vocabulary has previously been found to hinder design activities (Obrist et al., 2013).

Despite these benefits, it is essential to bear in mind that the advantages of demonstrations and low-fi prototyping may be limited for some populations. In a participatory design study with blind and visually impaired (BVI) people, early demonstrations had the benefits described above, but as the design process developed and became more focused, participants attempted to use the provided material to create audio-haptic mock-ups. However, as discussions unfolded, BVI participants drifted away from these materials and focused solely on verbal descriptions. It was observed the fewer materials BVI participants used, the more ideas they expressed (Buruk et al., 2021; Maguire, 2020; Metatla et al., 2016). Thus, constructing these mock-ups seemed to hinder rather than encourage communication. While several possible explanations for this were proposed (Metatla et al., 2015), the findings of the study were in line with previous work that found narrative scenario-based design to be particularly effective with BVI participants (Okamoto, 2009; Sahib et al., 2013).

The second stage of the inclusive collaborative design process involves addressing the details of tasks and functionality in an iterative design process. This stage provides an opportunity to collectively scrutinize finer aspects of instructional design, thus offering a joint learning space where learners become more familiar with the technology and techniques, such as sonification, and instructors gain a deeper understanding of learners’ needs.

Accessible digital implementations of highly malleable XR prototypes are critical in ensuring the success of participatory prototyping sessions at this stage. The prototypes’ capacity to adapt in response to changes and feedback generated from the joint prototyping process is crucial (Kyng, 1991). Presenting participants with different alternatives and quickly reprogramming features affords an immediacy of ideation and feedback that encourages engagement and exploration and can shorten the time between successive iterations of the development process.

Furthermore, the accessibility of these prototypes overcomes the limitations of the physical audio-tactile mock-ups described earlier, which were found to hinder rather than nurture communications with BVI users. Instead, digital implementations of highly malleable prototypes enable the exchange of design ideas and provide a more supportive communication medium between participants and instructors (Metatla et al., 2015).

Figure 1

The diagram shows the three stages of the Inclusive Participatory Design process. Progression through the three stages is primarily linear, from stage 1 to stage 3, as indicated by the solid arrows. In contrast, the dotted arrows indicate that there may occasionally be a need to revisit an early stage to consolidate understanding.

Interaction diaries can also be valuable in several ways during this second stage. Interaction diaries allow participants in a usability study to record their interactions with a prototype and make these recordings available to the prototype designers to inform the design process. The interactions are recorded privately, with participants working at their own pace, often in their preferred home or working environment. The participant and the prototyping team decide the medium used to record their interactions. Typical recording methods include video or audio recording or participants writing about their interactions and their wider user experience.

In the study by Metatla et al. (2015), BVI participants recorded their interactions with the prototype using screen readers in audio, enabling the designers to hear the synthetic voice responses of the screen readers employed as part of the interaction. Asking learners to document their interaction experiences in a medium comfortable to them, whether audio, video, pictorial or written, can bring advantages to both learners and instructors. First, diaries can expand the space for reflection about designs beyond the participatory sessions themselves. Participants can revisit their familiar home or study settings, re-experience the tasks with their own technology, compare this to what they have experienced with the new prototypes, and record these reflections in an interaction diary. Secondly, such diaries provide instructional designers with an additional source of feedback, giving instructors access to actual in-situ experiences, including current accessibility solutions. Learners can provide running commentary, like a think-aloud protocol, explaining the rationale for specific interactions, issues, and potential solutions. Interaction diaries can thus give direct access to actual experiences with assistive technology, which would make it hard for XR instructional designers to observe otherwise (Metatla et al., 2015).

The two stages constituting this approach can be considered complementary regarding the nature and aims of the activities they encompass. They aim to enable the exploration of XR experiences with built-in adaptability features. Depending on learners’ needs, they can personalize their XR experience, from changing visual settings to accessing alternate content forms through the use of malleable prototypes described above. While Stage 2 is characterized by exchanging ideas and suggestions and providing formative feedback from users to the learning designers, a third stage might be included, in which a final evaluation takes place. Here, learners provide summative feedback on the final learning solution. At this stage, learning designers are exposed to how the final solution is used in practice, possibly in ways they had not anticipated.

Designing inclusive XR experiences poses its own challenges, but the process of authoring these experiences adds another layer of complexity. In the next section of the paper, we share our firsthand experience of collaborating with dental educators to tackle the unique challenges of authoring inclusive XR content. By closely collaborating with educators in the field, we acquired valuable insights into the practical obstacles and opportunities inherent in the authoring process. Through this collaborative effort, our goal is not only to overcome existing barriers but also to pave the way for more accessible and inclusive XR content creation practices in the future.

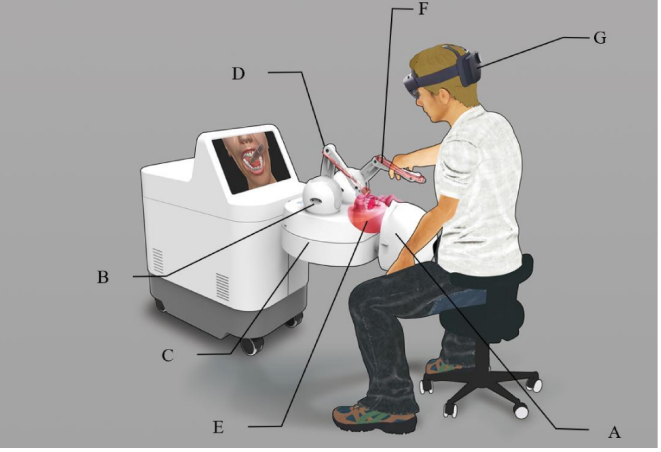

To gain a comprehensive understanding of the challenges faced by dental educators in utilizing VR-haptic simulators (see an example in Figure 2), we conducted a mixed-methods study involving both surveys and interviews. The Institutional Review Board of the School of Electronics Engineering and Computer Science (EECS) Ethics Committee approved the study at Queen Mary University of London. All participants provided informed consent before taking part in the survey and interviews.

The survey was distributed via email as an online questionnaire to dental education departments across seven UK universities equipped with VR-haptic simulator systems. The survey contained 20 multiple-choice and open-ended questions covering perceived benefits, challenges, and needs for improvement in using VR simulators. Seven respondents, representing their departments/faculties, completed the survey. Participants included dental educators (clinical lecturers and professors) VR-Haptic lab technologists, and e-learning managers, with an average of seven years of experience in their respective roles. Survey data was analyzed using descriptive statistics and thematic coding of open-ended responses.

Figure 2

Schematic diagram of the Unidental Mixed Reality (MR) Simulator with (A) a phantom head, (B) a haptic device base, (C) a haptic device motion platform, (D) haptic device handles, (E) virtual oral environment, (F) virtual dental instruments (red), and (G) mixed reality eyeglasses (Li et al., 2022).

Following the survey, we conducted semi-structured interviews with four respondents representing their faculties or departments from Queen Mary University of London, King's College London, University of Newcastle, and Queen's University Belfast/NIMDTA. Interviewees were selected based on their diverse expertise in VR haptic dental simulation (VRHDS) and dental education. Each interview lasted 45 to 60 minutes and was conducted via video call. We used an interview guide with ten open-ended questions, employing follow-up probes as needed. Interviews were audio-recorded, transcribed, and analyzed using thematic analysis.

We then employed thematic analysis on the survey and interview data, allowing for the triangulation of our findings. By comparing the quantitative and qualitative results, we validated the survey findings with detailed explanations and specific examples from the interviews. Details on ethics procedures, sample surveys, and interview questions are attached to the appendix.

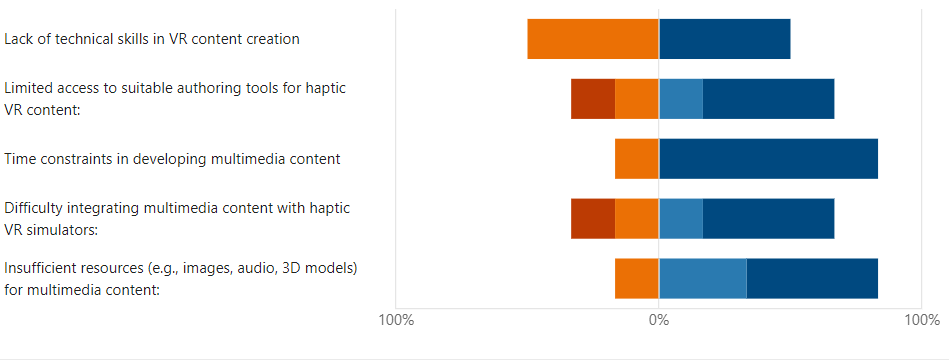

Our survey findings shed light on the current utilization of haptic VR technology in dental education curricula across the UK (Figure 3). A significant majority of respondents (71.4%) reported incorporating haptic VR into their teaching programs. Additionally, nearly half of the participants (42.8%) indicated they had personally created multimedia content specifically designed for haptic VR experiences.

Figure 3

Survey results for questions on challenges encountered when creating content for VRHDS (Rating 1 being not challenging, Rating 5 being highly challenging)

Our analysis revealed four primary themes: content creation challenges, technical expertise barriers, time constraints, and interest in emerging technologies/pedagogical ownership concerns. We present these themes with representative quotes from participants.

Dental educators had significant difficulties creating 3D content tailored to specific clinical cases and learning objectives. While pre-installed content was available, it often fell short of meeting particular educational needs.

One educator noted: "A particularly demanding aspect of my role involves constructing models for specific cases or scenarios, especially those intended to assess student competencies in examination settings."

The authoring tools for developing custom 3D models and interactive VR experiences presented a steep learning curve for many educators.

A professor stated: "I really don’t know how to use any of the 3D modelling tools the best I have done is try to use ChatGPT to create a sample image for a case for me. You know, I have been teaching for a long time, and have acquired many 2D images of varied cases over the course of my teaching years."

Even for e-learning technologists and educators with some technical skills, the time required to create custom XR content was a significant barrier.

An e-learning technologist respondent commented: "Even with my knowledge of some authoring tools, I get a lot of requests for cases but I have very limited time to create 3D content; I have to teach, I have other duties, and then there is research."

Educators worried about maintaining pedagogical control and ownership when relying heavily on pre-built content or external technical support.

One professor noted: "I'm quite intrigued by the prospects of AI-driven solutions that could facilitate the direct generation of high-caliber content. It could also help us with the challenge of getting images/3D models of custom cases for teaching because right now, we have a challenge with some patient 2D images as those always must come with consent which we sometimes don’t have. However, it's paramount that any such solution meets the exacting standards of quality and sophistication that align with our teaching objectives as this is crucial for effective educational materials."

These insights underscore the complex landscape of content creation for haptic VR in dental education, highlighting both educators' challenges and their innovative approaches to overcoming these obstacles. Our study revealed one of the primary challenges dental educators face is the extensive time and specialized skills required for creating 3D content tailored to specific clinical cases and learning objectives. While VR-haptic simulators typically come pre-installed with some content provided by manufacturers, the limited scope of these pre-built resources often necessitates instructors create their own supplementary materials.

However, the authoring tools available for developing custom 3D models and interactive VR experiences pose significant barriers. These tools frequently have steep learning curves and demand technical expertise that many dental educators lack, such as 3D modeling, animation, and programming skills. Furthermore, operating software may require access to specialized hardware and computing resources beyond what is readily available to instructors. As a result, many find translating their pedagogical vision into immersive VR content to be overly complex, laborious, and time-consuming. Even for the very rare ‘geeky’ dental educators with skills in creating 3D models, the time requirements for creating these models, amidst their already heavy teaching schedule, is very challenging.

These challenges often result in a limited or generic XR experience, which may not align with the instructor's pedagogical intentions. Consequently, students who receive and interact with this content may have a suboptimal learning experience. If only available XR authoring tools had simple ‘drag and drop’ and easy-to-use interfaces or workflows like standard authoring tools, dental educators might find it more accessible to take ownership of their pedagogical content. Implementing user-friendly XR authoring tools could empower educators to create more tailored and effective virtual learning experiences for their students.

Another recurring challenge dental educators face when authoring content for haptics, particularly concerning available tools, is the issue of tool siloing. For instance, if a dental educator wishes to create a clinical case for students involving a 3D model mouth with teeth and tongue that animates like an actual human on a surgery bed and includes animated textual and audio content for instructional purposes, they may find themselves having to learn three different tools. One tool may be required for the 3D model, another for animation, and another to process the audio file before integrating it into the VR-Haptics platform.

This siloed approach poses a steep learning curve for educators and introduces a level of complexity and inefficiency in the content creation process. The challenge becomes evident when trying to integrate various elements into a cohesive educational experience seamlessly. Furthermore, specific tools, such as dental VR-haptic simulators developed by manufacturers like SIMtoCARE, Simodont®, and Virteasy Dental©, may come with their proprietary systems, adding another layer of complexity to the authoring process (Li et al., 2021). Addressing the issue of tool siloing could significantly enhance the efficiency and effectiveness of content creation for dental educators using haptic technologies.

For all the highlighted challenges faced by dental educators and other practitioners in XR content authoring, current advancements in generative AI, text-to-image, image-to-image, text-to-3D, image-to-3D, audio generation, and generative algorithms present an opportunity to solve the problem of complexity of multimedia content creation (Gao et al., 2022; Kokomoto et al., 2021; Yi et al., 2023). Researchers can now employ the emerging capabilities of machines more naturally, using text and speech just like they would talk to an experienced professional designer, in participatory design and creation of instructional XR content (see Figures 4 and 5 for examples). In this partnership, the human—such as the dental educator and owner of the pedagogy—maintains agency control in the design process. At the same time, the machine, with its enormous capabilities and expertise, assumes the role of a developer. This kind of partnership between humans and AI for authoring 3D XR content is an emerging field that is now called AI-aided design.

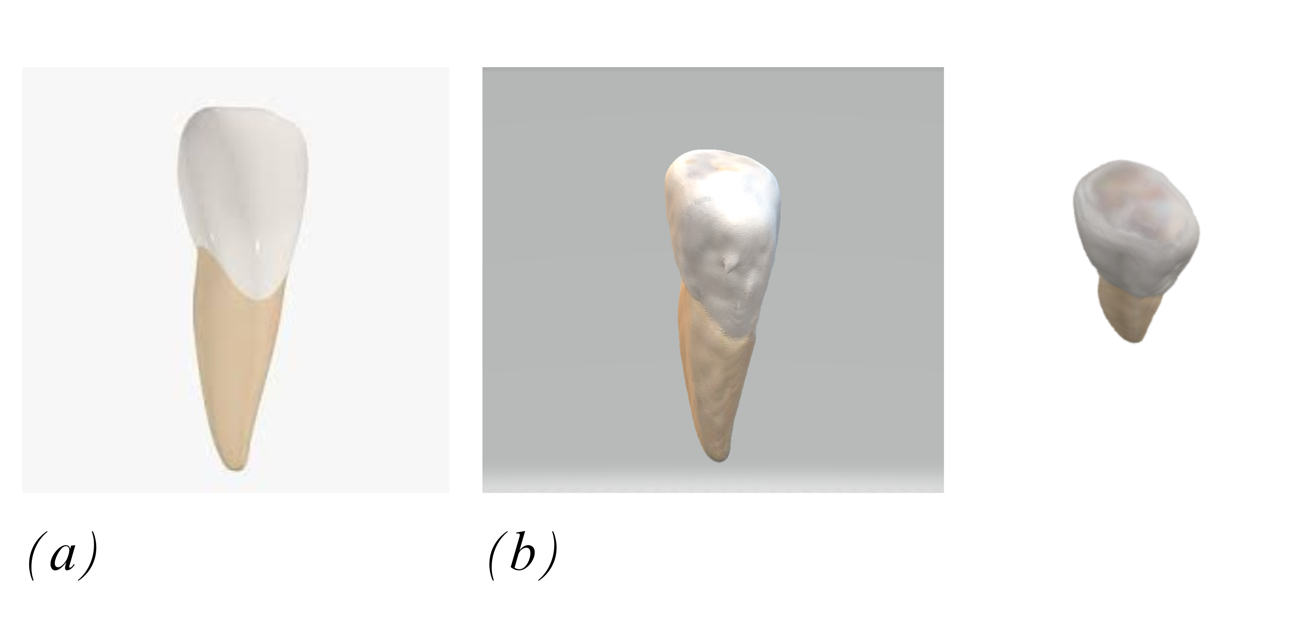

Figure 4

(A). 2D image of a single tooth fed to a Generative Gaussian Splatting Model for Efficient 3D Content Creation. (B). The final 3D model was generated from a 2D image of a tooth using the Generative Gaussian Splatting Model.

In our own work, we have begun exploring AI-aided design for effective dental education, as it is already being studied in other industries such as the automotive industry in the design of automobiles, and even rocket design (Broo, 2023).

When used to generate 3D models from 2D images and text (see examples in Figures 4 and 5), AI can simplify the design process and automate parts of XR content development, reducing the need for high technical expertise (Broo, 2023). Moreover, when coupled with inclusivity and accessibility instructional design guidelines, embracing multi-modal approaches, this process can further enhance the accessibility and inclusivity of immersive dental simulations.

By combining various modalities, such as 3D visuals, animations, text instructions, audio narrations, and haptic feedback, educators, with the help of generative AI, can cater to a wide range of learning abilities. For example, learners with visual impairments may benefit from audio descriptions or haptic feedback that provide tactile cues, while those with hearing disabilities can rely on visual aids and captions. Neurodivergent learners may find multi-sensory experiences created through AI-assisted design more engaging and conducive to their unique cognitive processing styles.

Furthermore, multi-modal approaches can reinforce learning and aid in knowledge retention by presenting information through multiple channels. For instance, a dental simulation could incorporate 3D visuals of a patient's mouth, animated step-by-step instructions, audio explanations, and haptic feedback simulating the sensation of using dental instruments.

Figure 5

User Interface (UI) for Inclusive XR Learning: 3D Model with Audio Narration Feature

Hence, AI can help educators in a participatory process to ensure the creation of XR experiences which cater to all students. Features such as automatic subtitles, adjustable contrast and brightness settings, haptic feedback adjustments, and alternate content formats can be seamlessly incorporated. However, it is essential to strike a balance. Educators should remain at the forefront of content development, while AI should be seen as a supportive tool, not a replacement for the educator's expertise. Educators' deep understanding of pedagogy and student needs should guide the AI-driven design process, ensuring that the resulting XR content is educationally sound and accessible.

Educators should retain the right to approve, modify, or decline any AI-generated content or suggestion, ensuring their teaching vision remains uncompromised. One potential issue arising from using AI is how we preserve the pedagogical ownership of the content. Pedagogical ownership over instructional material means that the instructors understand the content, the delivery mechanism, and the pedagogy underpinning it. They can develop and deliver the content to learners, reflect on the effectiveness of that content, and adapt it to better suit the learning needs of students (Ben-Naim et al., 2010; Newlin, 2014). However, relying too much on AI-aided content development might detract from the original teaching intentions of the educator.

AI-aided design introduces numerous ethical considerations for responsible use. Transparency is a crucial consideration, requiring that AI systems and their decision-making processes be clear and understandable to all users to prevent the black-box effect, where an instructor might unintentionally perpetuate biased outputs. As a result, algorithmic bias can cascade through AI systems, leading to unjust outcomes that reflect and amplify societal prejudices. The deployment of AI should be limited to what is necessary to achieve legitimate goals, thereby minimizing potential unwanted harms and vulnerabilities. All AI actors, including designers, instructors, and users, bear responsibility for these ethical considerations.

In the dynamic landscape of immersive education, there is a clear need for collaborative partnerships between students, educators, AI, and other stakeholders. This innovative alliance should be designed to harness stakeholders' strengths, creating an environment where technology complements human expertise. AI serves as a catalyst, supplying technical tools and inclusivity options that enhance the educational experience. These tools could allow for personalized learning and cater to diverse groups of learners and accessibility requirements. Meanwhile, students and educators would steer the pedagogical direction, ensuring the content aligns with accessibility needs and intended learning outcomes. This expertise is essential for cultivating critical thinking, creativity, and a deep understanding of subjects. This collaborative partnership would enable a more holistic approach to education, where integrating AI and human guidance fosters an inclusive, adaptive, and effective learning environment. In other words, while AI provides technical tools and inclusivity options, students and educators provide the pedagogical direction.

As XR continues to emerge as a vital tool in education, its design and inclusivity challenges must be addressed. This paper aimed to introduce key principles to guide the instructional design of XR learning experiences, focusing on inclusivity and accessibility. It also suggested the use of AI-aided content creation to reduce the need for XR technical expertise among instructors. Our work contributes to the literature on applied instructional design by addressing the following challenges: (1) the adoption of accessibility-focused effective instructional design principles for the development of inclusive XR experiences; and (2) the development of AI-aided XR experiences authoring tools that preserve instructors’ sense of pedagogical ownership.

In summary, a participatory and AI-aided design and authoring approach, aligned with the principles of inclusivity, can be the bridge which ensures instructors can use the technology and all students benefit from this powerful educational platform. However, as we embrace this future, we must prioritize pedagogical ownership, accessibility, and inclusivity, ensuring that technology remains a tool and not the driving force in the educational process.

Future research and practice in inclusive XR instructional design and authoring should focus on understanding diverse user groups' needs, preferences, and challenges, including learners with disabilities, non-native language speakers, and those from different cultural backgrounds. Incorporating user feedback into the design process is essential to ensure inclusivity and accessibility. Additionally, efforts should be directed towards developing AI-aided accessibility solutions and investigating how AI can be leveraged to generate inclusive XR content that adheres to accessibility standards, such as providing alternative text for visual elements, audio descriptions, and navigational aids.

Progress in inclusive XR design practices can be furthered by creating collaborative XR authoring tools which facilitate real-time collaboration between educators, students, and AI systems. These tools should enable stakeholders to co-create XR content while adhering to pedagogical guidelines for inclusive instructional design. Empirical studies will be needed to assess the effectiveness of inclusive, participatory XR instructional design approaches supported by generative AI, measuring learning outcomes, engagement levels, and user satisfaction.

Additionally, it is essential to explore ethical considerations surrounding the use of AI in XR instructional design, including bias, privacy, and equity issues, and develop guidelines for responsible deployment. Further research could focus on developing training programs and resources to support educators in effectively using AI-aided XR authoring tools, alongside providing ongoing professional development opportunities to keep educators informed about emerging technologies and inclusive design practices.

Pursuing these recommendations can advance the development of inclusive participatory XR instructional design and authoring with generative AI, ultimately benefiting accessibility, engagement, and learning outcomes for all students.

Savickaite, S., McNaughton, K., Gaillard, E., Amaya, J., McDonnell, N., Millington, E. and Simmons, D. R. (2022). Exploratory study on the use of HMD virtual reality to investigate individual differences in visual processing styles. Journal of Enabling Technologies, 16(1), 48-69. https://doi.org/10.1108/JET-06-2021-0028