EdTech Archives

International Consortium for Innovation and Collaboration in Learning Engineering (ICICLE) 2024 Conference Proceedings: Solving for Complexity at Scale

EdTech Archives

International Consortium for Innovation and Collaboration in Learning Engineering (ICICLE) 2024 Conference Proceedings: Solving for Complexity at Scale

EdTech Archives

International Consortium for Innovation and Collaboration in Learning Engineering (ICICLE) 2024 Conference Proceedings: Solving for Complexity at Scale

EdTech Archives

International Consortium for Innovation and Collaboration in Learning Engineering (ICICLE) 2024 Conference Proceedings: Solving for Complexity at Scale

The aviation industry has a longstanding history of influence in the field of instructional design, from World War II’s unprecedented demand for pilot training to the post-war effort, which expanded upon research and experience gained from the military’s systematic and technology-focused approach to learning (Dick, 1987). Reiser (2001) attributes these efforts to the mid-century educational and behavioral psychology movement, including the rise of key influential figures such as Skinner, Mager, Bloom, Gagné, and others.

With each technological innovation, new possibilities for creating and sharing learning experiences arise. Beginning in the late 1980s and early 1990s, as personal computers became more accessible, industries began experimenting with computerized training formats to replace or supplement traditional methods. When organizations and industries adopt new technologies, various proprietary formats in courseware develop, often leading to a lack of uniformity in design and function.

Consider the challenge for the aviation industry when proprietary courseware formats inhibited critical updates from consistently flowing across different organizations, undermining uniform safety standards. To address this issue, the Aviation Industry Computer-Based Training Committee (AICC) and the Advanced Distributed Learning (ADL) Initiative collaborated to enhance research efforts focused on standardizing distributed and digital learning and its interoperability within learning systems and environments (McDonald, n.d.; ADL Initiative, 2023). Although initially aimed at addressing regulatory and compliance needs specific to the aviation industry, these organizations acknowledged that their advancements could benefit the global training community.

The Sharable Content Object Reference Model (SCORM), created by the ADL in 2000, became—and remains—one of the most widely adopted e-learning publishing standards. At a basic level, SCORM specifies how instructional content is packaged so that it allows the tracking of basic learner progress, such as completion status and quiz scores, but little more. Furthermore, this tracking must occur within an online learning environment, typically a learning management system (LMS). If you have ever suffered through a tedious online HR compliance course, you have probably experienced SCORM’s click-through mechanics in action as countless “Next” screens intended to monitor completion rather than genuine engagement or learning.

Although newer e-learning standards, like the Experience Application Programming Interface (xAPI), have emerged, the broader education field has been slow to adopt them. To understand xAPI, it might be helpful to first understand Application Programming Interface (API). API is a set of rules that allows different software systems to communicate with each other and share data. The “x” in xAPI represents an “experience,” in which xAPI statements (programming codes) are used to collect data on what a learner experiences or does while interacting with learning content. In short, xAPI is an advanced API built for tracking learning experiences well beyond an LMS. In the proposed research design, we highlight how data-rich standards like xAPI can support iterative instructional design improvements and advanced analytics in UAS training environments.

Researchers at a regional uncrewed aviation systems (UAS) test site seek to explore successful models for developing e-learning for emerging aviation technologies. In this research exploration, we first examine current methodologies, practices, and standards. Despite being introduced over two decades ago, SCORM remains the dominant standard in online learning. However, the ADL Initiative (2023) and IEEE Standards Association (2024) have recently introduced xAPI—a more flexible standard that captures fine-grained learner data (e.g., specific interactions, time spent on content, performance on individual tasks) across various digital learning environments.

Similar to Bates’ (2022) criticisms of the ADDIE (Analysis, Design, Development, Implementation, Evaluation) model for its inflexibility in adapting quickly to new technologies and dynamic educational environments, analogous arguments could be made for the continued use of the dated SCORM specifications to measure and evaluate e-learning in modern learning environments. ADDIE, while a foundational instructional design model, can be slow to respond to dynamic shifts (Bates, 2022). Likewise, SCORM limits deeper insight into learning processes and outcomes, underscoring the need to move beyond outdated models, specifications, and practices to meet modern instructional development demands.

In the context of this proposed study, we once again look toward the aviation industry and its potential influence on instructional design to address this challenge. The emergence of uncrewed aviation systems (UAS) has revolutionized military and civilian sectors in many areas, such as commerce, surveillance, and emergency management, according to an annual Federal Aviation Administration (FAA) (2023) report. This same report also identifies UAS as the fastest-growing aviation sector in the U.S. and calls for more research and training to develop skilled drone pilots.

Similar to the AICC’s mission in the late 1980s, the FAA’s call also warrants careful adherence to safety and regulatory changes. Much like other historical demands in aviation training, the urgent and dynamic nature of UAS operations seemingly calls for a scalable learning development approach to rapidly meet its demands. As the demand for rapid e-learning development increases, so does the need for effective instructional design strategies (Tîrziu & Vrabie, 2015). This study proposes the application of Learning Engineering (LE) principles combined with Design-Based Research (DBR) to develop UAS e-learning as an effective solution to meet these pressing needs.

The core challenge in this study lies in updating e-learning design and development methods within an evolving domain—UAS operations—that requires real-time, precise training data. Relying on older publishing standards, such as SCORM, restricts the depth of data and range of learning environments in which that data can be collected. As a result, instructional designers lack the detailed insights they need to iteratively improve UAS pilot training in fast-moving contexts where competencies must be both demonstrated and documented for regulatory and safety purposes.

Kessler and colleagues (2023, p. 32) explain that learning engineering always starts with a learner-centered challenge or problem. In this study, the challenge is: How can we systematically produce robust, data-driven e-learning for UAS pilot training in a way that captures the complexity of the learning environment, supports rapid iteration, and ensures that learner efficacy remains at the forefront? By applying learning engineering as a practice within a design-based research (DBR) framework, the research aims to address these gaps by leveraging flexible data-collection methods (e.g., xAPI) and human-centered design principles.

Accordingly, the overarching research question emerges: How does applying learning engineering (LE) principles through design-based (DBR) research enhance the development process while maintaining learner efficacy in UAS e-learning courses? Specifically, we seek to investigate how learning engineering practices can optimize the systematic development of UAS e-learning courses using iterative improvements, detailed learning data, and user feedback. Correspondingly, we intend to examine how design-based research as an LE process can be used to continuously improve UAS e-learning courses based on learner feedback and performance data.

Propelled by these research questions, the study’s design is grounded in empirical evidence aiming to support LE and DBR as viable strategies to answer the FAA’s call. While much of the study’s context is demonstrated through UAS e-learning development, broader instructional design principles are rooted in the learning sciences. The subsequent sections further detail this fusion of theory applied through practice.

Much like UAS, learning engineering (LE) is an emerging discipline. Learning engineering can be defined as both a structured process and an applied practice, employing human-centered design and data-driven strategies grounded in the learning sciences to create and refine effective learning experiences (Goodell, 2023, p. 9). Learning engineering, as described by Kessler, Craig, and colleagues (2023), is a systematic process designed to iteratively develop, test, refine, and enhance learning conditions. Similar to the research endeavor depicted in this manuscript, the core of learning engineering centers around a challenge to innovate or optimize learning or the conditions of learning within a specific context.

In the “Learning Engineering Toolkit,” (2003) editors Goodell and Kolodner aim to clarify the field, showing how it extends beyond traditional educational roles to solve complex learning problems through a structured, interdisciplinary approach. The continued evolution of LE can be attributed to industry working groups that include advocates such as Goodell, Kessler, Craig, and many other scholars, including this manuscript’s author (Johnson). The International Consortium for Innovation and Collaboration in Learning Engineering (ICICLE) is an industry group that is helping to drive this momentum.

ICICLE is one of many working groups under the IEEE Standards Association (IEEE-SA). In addition to refining LE as a discipline, ICICLE’s efforts are also directed toward the broader adoption of newer e-learning specifications beyond the previously mentioned SCORM standard. Recently, IEEE celebrated a milestone by publishing the xAPI standard, 9274.1.1-2023 (IEEE Standards Association, 2024). This e-learning specification provides a framework for a more granular and flexible approach to capturing enhanced learner data (ADL Initiative, 2023). Such data collection methods are pivotal to the research proposed in this paper, offering a macro view of how LE can be practiced alongside other methodologies, such as design-based research, to optimize instructional design and improve learner outcomes.

Design-based research (DBR) is defined as “a research approach that engages in iterative designs to develop knowledge that improves educational practice” (Armstrong et al., 2020). Scholars like Edelson (2002) contend that DBR is uniquely capable of producing actionable knowledge that can be readily implemented by actively engaging researchers in augmenting educational practices. Anderson and Shattuck (2012) highlight the iterative nature of DBR and its role in developing practical theories that inform practice, thus bridging a gap in educational research by linking theory with practical application. Similarly, Barab and Squire (2004) emphasize DBR’s significance in learning sciences, particularly its capability to align empirical inquiry with real-world instructional design.

In essence, DBR removes the detachment between researcher and practitioner, making it ideal for specialized domains like UAS pilot training. By placing both design and research in tandem, DBR fosters real-world adaptation and continuous improvement.

As previously suggested, DBR is a methodology that integrates design and research through grounding, conjecturing, iterating, and reflecting—allowing for adaptive research based on real-world feedback (Hoadley & Campos, 2022). Such responsiveness is key in UAS pilot training, where both technology and regulatory demands shift rapidly.

Learning engineering similarly encompasses design as an integral part of the creation phase, incorporating ideation, refinement, critique, culling, and selection of ideas (Thai et al., 2023). This process is inherently human-centered and necessitates close collaboration with a diverse set of stakeholders, as exemplified through design-based research. According to Thai and colleagues (2023), designers track their design decisions through logic models depicting how the various components of the design are expected to interact and complement each other.

Likewise, Sandoval (2014) illuminates this approach by introducing "conjecture mapping" as a systematic educational design research method. This logic-modeling approach enhances precision and clarity by detailing why design decisions are made, which could be especially beneficial for instructional designers and researchers in UAS pilot training, where rigorous and well-defined educational interventions are crucial.

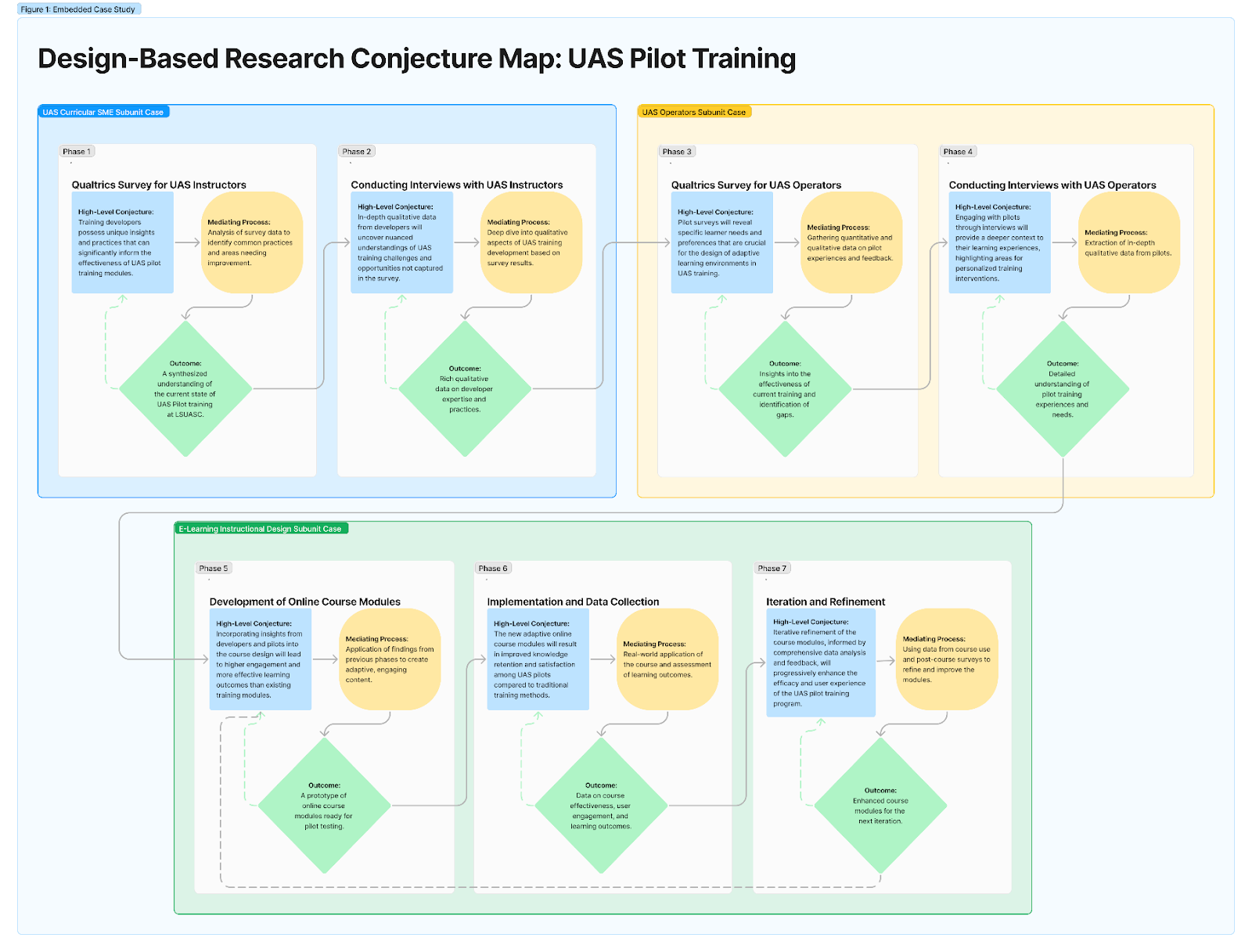

Grounded in the empirical literature presented, this study proposes a design-based research (DBR) framework within an embedded case study approach to investigate instructional design practices in UAS e-learning. This strategy explores systematic e-learning development using data-driven processes specified through learning engineering (LE) principles. The learning goals are firmly anchored in the DBR principles, which involve a seven-phase comprehensive and iterative research process as illustrated by the conjecture map in the Appendix. The ultimate goal is to optimize the efficacy of UAS e-learning modules based on the learners' performance and feedback. While the study engages a niche population as described below, its findings could offer insights into the systematic crafting of effective e-learning beyond UAS operations.

Participants in the study are selected based on their roles and UAS expertise through purposive sampling from a regional FAA UAS test site where they are employed. Possible participants include subject matter experts (SMEs) who have been involved in the training or development of the training curricula for UAS pilots, which may encompass the pilot trainers. Additional participants include the UAS pilots and those required to complete pilot training modules, such as visual observers and mission operations support staff. Voluntary recruitment of participants will focus on those with direct experience in UAS operations and instruction. After obtaining informed consent, multiple variations of qualitative and quantitative data will be collected, as further detailed below.

The study on UAS e-learning will employ a comprehensive data collection strategy. For qualitative data collection, a combination of survey instruments and in-depth interviews will be used. Quantitative data comprises survey and course learner data collected through the e-learning publishing and distribution methods.

The phased data collection approach begins with deploying Qualtrics survey instruments to gather initial insights to inform and refine in-depth interview questions in the subsequent phase. In Phase 1, a mixed-methods survey targets UAS curriculum developers and trainers to capture their unique insights into training needs and development approaches. The survey results are analyzed to identify key thematic areas, which are then used to organize and draft semi-structured interview questions in Phase 2. These interviews, conducted via secure video calls and recorded with participant approval, delve deeper into the themes relevant to the participants’ roles, skills, and experiences, allowing for a comprehensive understanding of the UAS training landscape.

Similarly, in Phase 3, the study pivots to gathering learners' perspectives through surveys designed to discern their specific learning experiences with UAS e-learning modules. These survey results inform the subsequent in-depth interviews conducted in Phase 4, where the aim is to gain a richer, more detailed context regarding the learners' experiences and challenges. This structured approach ensures that the interviews are directly informed by the most relevant data emerging from the surveys to enhance the depth and focus of the qualitative data collected.

In addition to interviews, extensive quantitative data will be collected throughout the phases, including user interaction data from the learning management system (LMS), learning record store (LRS), SCORM, and xAPI-generated metrics. This data encompasses a range of learner behaviors, such as course completions, quiz scores, and detailed interaction patterns, including which modules and content blocks were revisited, skipped, or lingered on. These comprehensive data sources provide a robust foundation for analyzing instructional design processes and learner outcomes.

Phases 5 through 7 focus on synthesizing these insights to inform the design and iterative refinement of UAS e-learning modules. Phase 5 translates the findings from earlier phases into the design of engaging and effective course modules using Articulate Rise. In Phase 6, the course modules are deployed, with the expectation that they will enhance learning engagement and satisfaction among course participants. Finally, Phase 7 involves iterative refinement based on learner data analysis and feedback, which is expected to progressively improve the course's efficacy and user experience. This cyclical and iterative design process ensures that the e-learning modules evolve in response to real-world feedback, contributing to the long-term advancement of UAS e-learning practices.

Throughout each DBR phase, qualitative data is collected via survey instruments and interview transcripts for analysis. The researchers employ a multi-cycle approach to qualitative data coding, as detailed by Saldaña (2021). Initial cycles use in vivo (participants’ exact words) and open (exploratory) coding to capture emergent themes. Subsequent axial and focused coding link categories and subcategories across participant feedback, culminating in theoretical coding that informs final design refinements. Member-checking, in which participants review summarized findings to help validate or clarify the data or interpretations, helps ensure accuracy and trustworthiness of qualitative analysis. This multi-cycle coding strategy facilitates comprehensive and iterative analysis, aligning closely with the dynamic nature of DBR.

Quantitative data gathered from surveys, quiz scores, completion rates, and fine-grained xAPI logs will be statistically analyzed to measure trends and correlations such as the frequency levels of user engagement, performance analytics, and patterns of behavior within the e-learning course activities. Together, these quantitative insights can support iterative design adjustments.

Since the research strategy comprises an in-depth analysis across multiple units within a case, we adopt an embedded case study methodology as classified by Baxter and Jack (2015). The aforementioned multifaceted analyses are confirmed through member-checking and feedback loops, which help inform and refine the subsequent DBR phases and, ultimately, the e-learning design. The researchers apply this comprehensive and systematic approach to rigorously triangulate each subunit case analysis back to the overarching case phenomenon (Yin, 2009). Through an embedded case analysis approach, each subunit (e.g., pilot trainers vs. operations support staff) is examined and then integrated into an overarching case conclusion to address our main research question: How can LE principles and DBR processes enhance e-learning development for UAS?

This study, while comprehensive in its approach to integrating learning engineering (LE) and design-based research (DBR) in developing e-learning for uncrewed aviation systems (UAS), is bound by certain conditions. The study’s use of advanced learning technologies and standards like xAPI over traditional SCORM renders it subject to availability at the regional FAA UAS test site. Technical issues or constraints in the existing systems infrastructure could impact the deployment and evaluation of the e-learning modules. Likewise, the niche nature of UAS operations may not represent other industries; further replication in different domains is encouraged.

This study proposes a research strategy to enhance the quality and scalability of UAS training by applying LE principles and DBR methodologies. By focusing on iterative design and data-driven decision-making, the researchers aim to create e-learning modules that are effective and adaptable to the rapidly evolving needs of the UAS industry. Despite its population and contextual limitations, the study’s findings could suggest a favorable framework for the broader application of LE and DBR across other learning domains. Additionally, this research could establish empirical groundwork for future research and innovation in the field of instructional design, helping to propel it to new heights.

The authors express their sincere gratitude to the key members of the Texas A&M University-Corpus Christi Autonomy Research Institute for their invaluable support of this research project. Special thanks go to Ronald Sepulveda for his exemplary leadership and unwavering advocacy, to Joe Henry for his resolute stewardship, and to Michael Sanders for facilitating the access and resources needed to pursue this research endeavor.

I extend my deepest appreciation to Dr. David Squires, my doctoral mentor and co-author, for his exceptional leadership as Principal Investigator and for his continuous support and mentorship throughout my doctoral journey. His ingenuity and guidance have been pivotal in shaping both this research and my development as a scholar.