EdTech Archives

International Consortium for Innovation and Collaboration in Learning Engineering (ICICLE) 2024 Conference Proceedings: Solving for Complexity at Scale

EdTech Archives

International Consortium for Innovation and Collaboration in Learning Engineering (ICICLE) 2024 Conference Proceedings: Solving for Complexity at Scale

EdTech Archives

International Consortium for Innovation and Collaboration in Learning Engineering (ICICLE) 2024 Conference Proceedings: Solving for Complexity at Scale

EdTech Archives

International Consortium for Innovation and Collaboration in Learning Engineering (ICICLE) 2024 Conference Proceedings: Solving for Complexity at Scale

Generative AI, a rapidly emerging technology, has transformed education by enabling innovative learning solutions and enhancing the accessibility of learning resources. Its ability to comprehend natural language, process various prompts or instructions, and generate learning materials has significantly transformed the educational landscape (Javaid et al.,2023; Elbanna & Armstrong, 2024). Yet, its effects on student development remain a subject of constant debate and scrutiny. While students may be eager to adopt this technology for coursework to enhance their understanding of concepts, educators express concerns about students potentially developing reliance on readily available answers generated by Large Language Models (LLMs), which could hinder the development of their critical thinking skills (Gammoh, 2024). Additionally, the unregulated nature of open-source materials raises questions about their trustworthiness and accuracy (Sun et al.,2024). Another challenge is that adapting Generative AI for pedagogical purposes requires teachers to learn about Generative AI and add prompt engineering to their skills (Lee et al.,2024; Walter,2024). Collectively, these challenges make it difficult to define the appropriate uses of Generative AI in education, particularly related to fostering critical thinking skills.

Learning engineering provides a systematic approach to tackle these learning challenges by identifying issues, designing solutions, implementing changes, and refining them through ongoing evaluation (Kessler et al., 2022). In the context of an emerging technology like Generative AI, this process starts by looking into the stakeholders’ needs based on their perspective of the obstacles that hinder critical thinking, customizing Generative AI to address these needs, using the tools in real learning scenarios and keep iteratively modifying the solution or product. Throughout the process, teachers must have agency in shaping learning outcomes, and students must be motivated to adopt the tool despite the differences in their perspectives regarding AI.

The methodology we applied in developing a platform during the AI for Education Hackathon at Arizona State University (ASU) was inspired by this iterative, inquiry-driven problem-solving approach of learning engineering. The hackathon provided an opportunity to explore how AI could be integrated into education to enhance student learning while addressing educators’ concerns. The event challenged participants to reimagine education by leveraging AI models to foster collaboration, enhance accessibility, and support critical thinking. Working in interdisciplinary teams, we engaged in structured problem identification and designed a solution followed by rapid prototyping. Our design process was shaped by mentorship from industry leaders, including Amazon and OpenAI, who provided access to their AI systems to support the development process.

Our solution, “ThinkPal.AI,” a web-based platform aims to bridge the gap between how educators and students use Generative AI in learning environments. Reflecting on this structured approach, we explore a key question:

In what ways do learning engineering principles emerge in the design of a Generative AI-based platform for education, and how can they inform future iterations of Generative AI integration for educators and students?

By examining our development process through the lens of learning engineering, we aim to identify key challenges in integrating Generative AI into education, assess the role of structured teacher-guided AI interactions, and explore how these insights can shape future AI-enhanced learning environments. In doing so, we offer a structured approach to help educators, learning engineers, and ed-tech developers design AI-driven learning solutions that effectively balance automation with meaningful human oversight. The following sections outline the objectives of our project, the learning engineering principles that shaped our design, our methodology, the resulting platform features, future implementation plans, and the limitations and challenges we encountered.

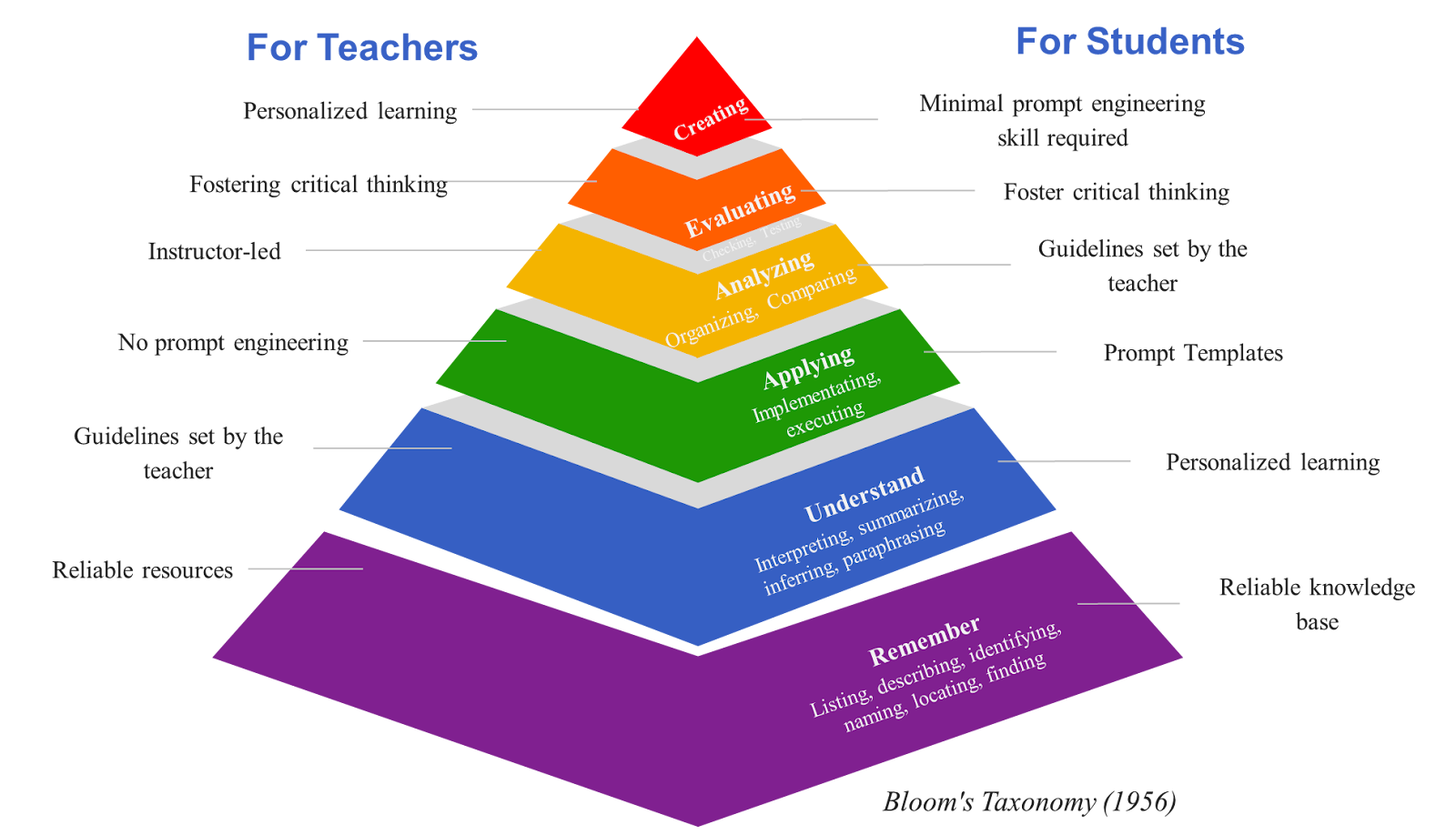

The design of ThinkPal.AI is grounded in Bloom’s Taxonomy (Bloom, 1956) of critical thinking, ensuring that its development aligns with pedagogical practices. Given this foundation, critical thinking can be defined as a process of analyzing information deeply, evaluating evidence, and creating well-founded conclusions or solutions – essentially progressing toward higher-order understanding (Dwyer et al., 2014).

Current adaptations of Bloom’s Taxonomy for Generative AI integration in learning primarily focus on categorizing tasks (Oregon State University Ecampus, 2024). However, they often lack differentiation between the distinct roles of teachers and students within the same framework, missing an opportunity to explore how AI supports their unique contributions to the learning process. To structure our approach to critical thinking, we have drawn on Bloom’s Taxonomy (Bloom, 1956) and extended it to illustrate the distinct but interconnected roles of teachers, students, and Generative AI. This framework categorizes cognitive learning objectives into six levels: remembering, understanding, applying, analyzing, evaluating, and creating. Within this structure, students engage with AI in ways that enhance their cognitive development, while teachers utilize AI as a tool for guiding and shaping learning experiences.

Figure 1

Application of Bloom’s taxonomy (1956) for Generative AI-based learning platform

Note: This figure illustrates the adaptation of Bloom’s Taxonomy for AI integration, highlighting the interconnected roles of teachers, students, and AI in ThinkPal. (Created by ThinkPal.AI team, 2024)

We used this taxonomy as a guide for designing our AI prompts and interactions, ensuring that ThinkPal.AI actively supports both student learning and teacher facilitation. Rather than merely providing answers, our platform is designed to encourage students to analyze concepts, evaluate their understanding, and create arguments or analogies, matching AI interactions with higher-order thinking skills. For instance, at the ‘Understanding’ level, students receive reliable sources curated by AI, enabling them to interpret and summarize information effectively. At the same time, teachers guide AI by selecting resources that directly support learning objectives. This interplay between teacher guidance, student engagement, and AI interaction is present across all cognitive levels. Thus, by clearly defining AI’s role within Bloom’s Taxonomy, we establish a structured approach that enhances learning interactions without replacing human decision-making.

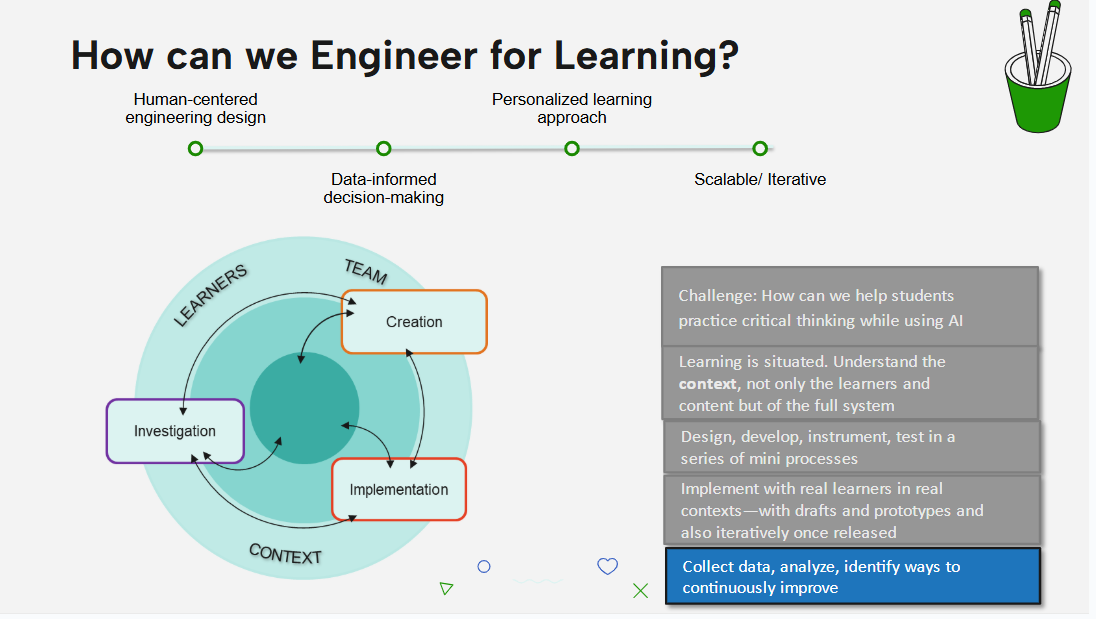

Our development approach is based on the investigation, creation, and implementation process outlined in the learning engineering framework. Two of the main principles we applied in this process are human-centered design and personalized learning, as elaborated below. The diagram (see Figure 2) illustrates the interconnected phases that guided our design. Beyond the initial development, this framework will inform future research, data collection, and iterative improvements.

Figure 2

Adaptation of the learning engineering process framework

Note: Adapted from “The Learning Engineering is a Process,” by Kessler et al. (2023) in J. Goodell & J. Kolodner (Eds.), Learning Engineering Toolkit (p.31). The adapted version reflects our integration of human-centered engineering design and personalized learning approaches for AI-supported learning. (Created by ThinkPal.AI team, 2024.)

One of our most critical decisions was ensuring human-in-the-loop in a way that gives teachers an active role as a guide in student-AI interaction rather than AI functioning as an autonomous learning system. This guidance is essential for fostering critical thinking skills because teachers can provide context, set appropriate boundaries, and steer students towards constructive use of AI. Without guidance or a reliable structure, students might use Generative AI in ways that hamper their critical thinking. For instance, AI outputs can be accepted uncritically, or AI can be used as a shortcut to answers. Prior research emphasizes that to promote critical thinking with AI tools, educators should be enablers who bring in reliable resources, set guidelines and lead the process (Guo & Lee, 2023). In ThinkPal.AI, we embed this human-in-the-loop philosophy: teachers control how the AI is used (such as configuring what AI features students can access) and oversee the AI-student interactions. This ensures that the integration of AI complements the curriculum and pedagogical goals rather than working against them.

Personalized learning is another core concept in our platform, focusing on tailoring the educational experience to each student’s context, skills, and interests. It ensures that content and support align with learners’ unique abilities, prior knowledge, and preferences, meeting them where they are in their learning journey (Shemshack & Specto, 2020). In ThinkPal.AI, this principle guided features that allow content and AI responses to adjust to information about the student. For example, the platform encourages students to input their interests and background in their user profiles or within prompts. By leveraging this information, the AI can provide explanations and examples that relate new concepts to a student’s interests. This approach also promotes critical thinking, as students must integrate new knowledge with their existing experiences, evaluating and analyzing information in a way that makes sense to them personally.

With these guiding principles, our development unfolded in 3 stages: preliminary research and problem identification, problem framing and solution ideation, prototype development and presentation.

In stage one, the main challenge was to reframe a problem. Initially, our team undertook preliminary research asynchronously, each member working independently to gather insights on pressing problems in education. This process was facilitated by a prep workbook provided by the organizers. The main focus was the following:

Frame a problem

Reframe the problem: Research, collaborate and reflect on the problem

The workbook included the following thinking tools:

Reframe using concepts in decision-making (McCombs School of Business, 2019)

Use of systems thinking in principled innovation to understand the whole rather than separate pieces (Synergos, 2019)

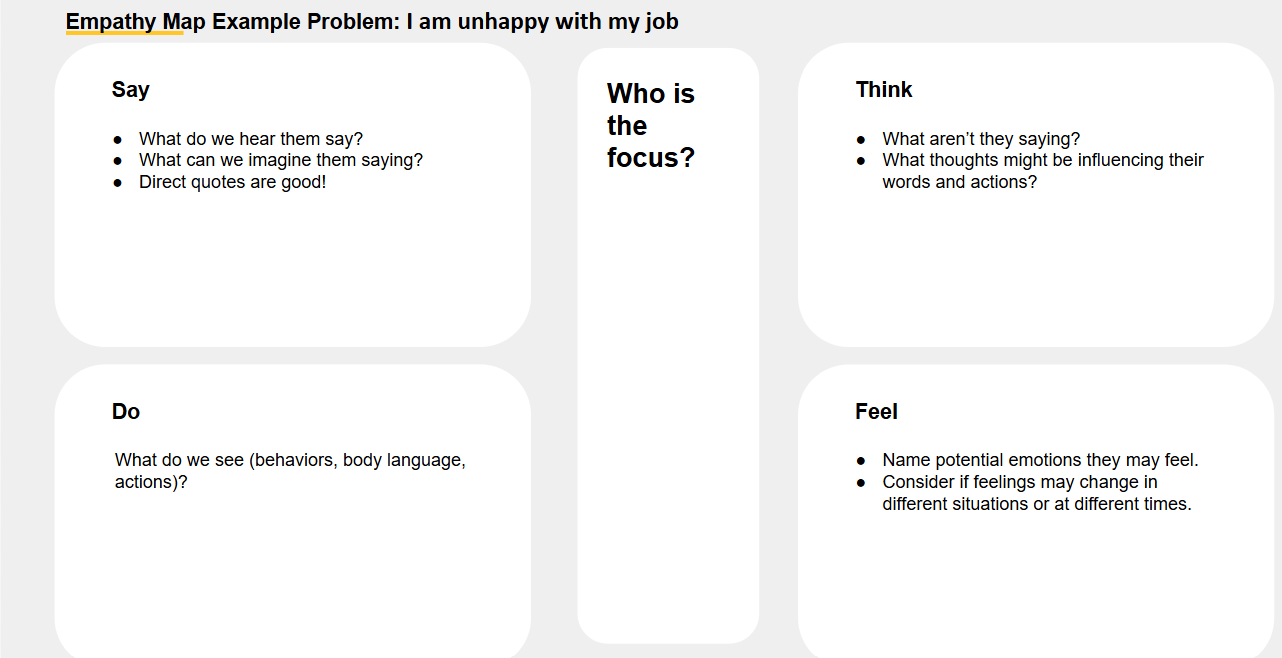

Empathy map (See Figure 3)

The main prompt used for problem identification:

Ask yourself why this problem exists. What are the issues related to this topic that truly matter to you? Begin researching the topic and identifying the top reasons contributing to the problem's existence or three different ways to look at the problem. Research can take different forms, ranging from reviewing articles and papers to conducting surveys and interviews. We understand that this initial research may not be deep, but it can provide you with additional insights into the challenge. After identifying and recording three top challenges, drag this checkmark to select which challenge you would like to move forward with.

After this preparatory research based on exploring literature and classroom experiences, we organized the list of ideas into broad categories as common themes emerged. The challenge identified in our workbook is as follows: a disconnect between educators and students in using generative AI for learning. This challenge provided a foundation for the next stage, where we analyzed specific issues to construct a precise problem statement.

This phase involved a full-day, in-person workshop focused on problem definition and solution ideation within the hackathon. The primary objective was to systematically define the problem from the perspectives of stakeholders and generate solutions. Our team consists of graduate and undergraduate students with various backgrounds and experiences: Rezwana, a PhD student in educational technology and a former teacher, Nicole - a PhD student in Computational History and Philosophy of Science Ritika - a former Product Manager and Masters student in Information Systems Management at the time, Namita Shah and Rufat Babyev - Bachelors students in Computer Science. This diversity was a strength, as it brought multiple viewpoints to understanding the problem and taking a multidisciplinary approach to problem solutions.

The first step was to identify stakeholders to frame the problem in education. We separated the roles into two user personas, the primary user being students and the secondary user being educators.

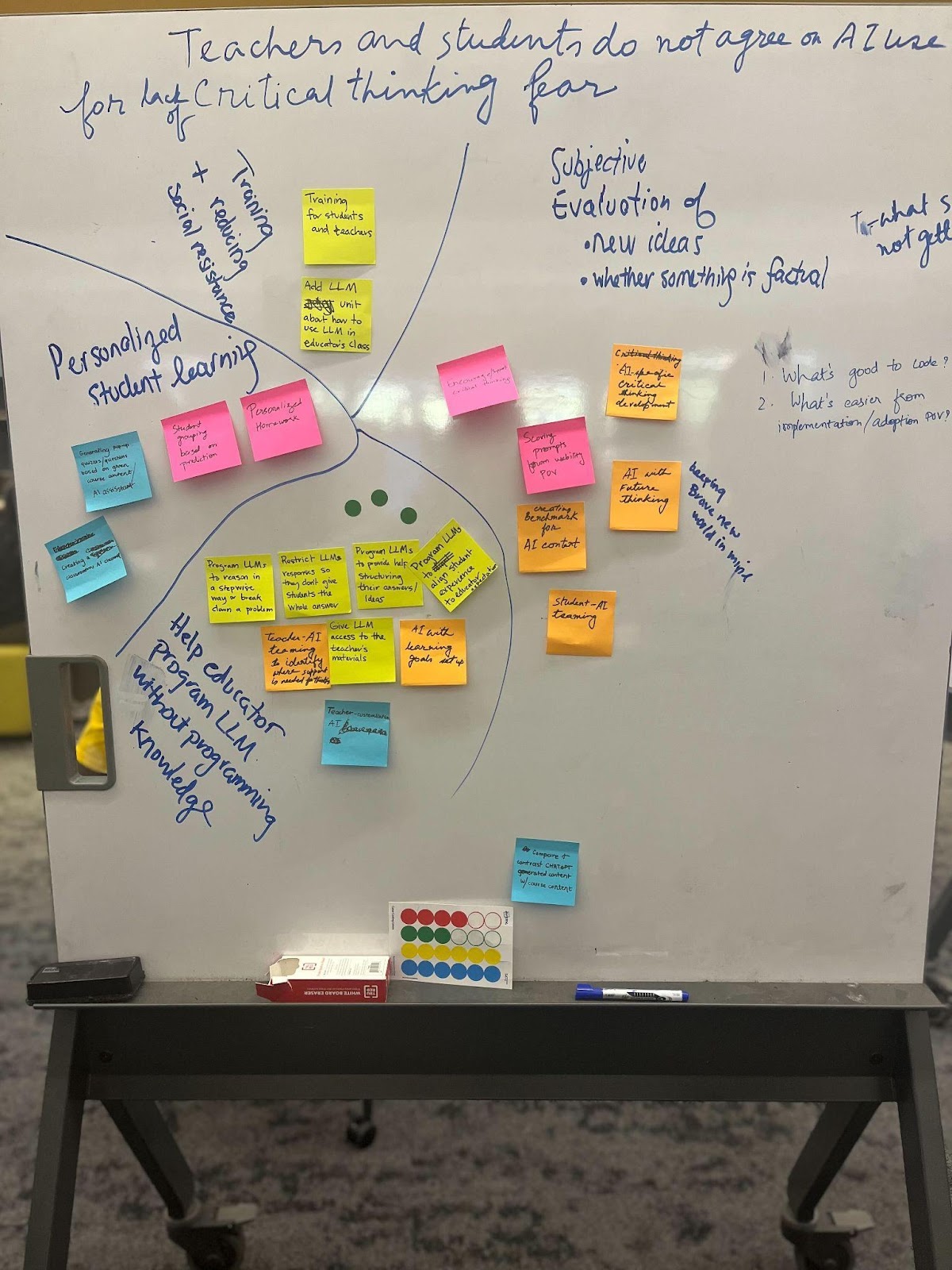

Figure 3

Empathy Mapping and Solution Ideation

Note. The left image represents an empathy mapping tool provided for brainstorming, used to capture perspectives on AI integration in education. The right image illustrates our team’s synthesized insights and proposed solutions, highlighting key challenges such as critical thinking, personalized learning, and subjective evaluation. (Empathy map from the AI for education hackathon workbook, solution brainstorming by ThinkPal.AI team, 2024.)

Empathy mapping (See Figure 3). In this step, we asked questions such as: What would teachers say, do, think, and feel about students using AI in class? What about students' thoughts and feelings on using AI with or without teacher help? For example, we considered that students might feel excited about getting help from AI but uncertain about how to use it well or whether it is even permissible. In contrast, teachers might feel concerned or out of control by the students’ unchecked use of AI.

Principled innovation. We also applied principled innovation to identify root causes rather than just symptoms. Two prompts guided our discussion:

Why is it important to solve for root causes rather than symptoms? How does stepping back provide a broader perspective?

How did discussing top challenges relate to truth-seeking, courage, collaboration, inclusivity, and honesty?

We documented challenges on sticky notes, mapped key issues, and selected one, ensuring a collaborative and reflective problem-framing process.

After gaining these insights, we reframed the problem statement and agreed on the following:

Current Generative AI practices do not aid students’ critical thinking development, which is exacerbated by a lack of intervention by teachers in the process.

This reframed statement highlighted both sides of the gap (student usage and teacher guidance) and set the stage for imagining solutions.

Defining the solution. The final step involved listing all potential solutions to the problem statement and selecting one or two for the minimum viable product (MVP) development (see Figure 3). Again, we categorized solutions under broad themes: personalization of learning, guided AI usage, teacher training in AI, improving AI prompt quality, and curating AI knowledge sources. We selected two themes to build the MVP - personalized student learning and helping educators program LLMs without programming knowledge.

Stage 3 involved rapid prototyping and initial validation of our solution. Following the workshop, our team had three weeks to build a working prototype of ThinkPal.AI and prepare a presentation for the hackathon’s virtual pitch competition. This stage was equivalent to the “implementation” step of the learning engineering cycle, which turned our planned solution into a tangible product that could be tested and iterated.

The prototype was implemented as a web-based application using a Jupyter Notebook environment, which provided a simple way to combine a backend (Python code and AI model integration) with a minimal user interface. We integrated OpenAI’s ChatGPT API (OpenAI, 2023) to serve as the generative AI engine behind the platform’s chatbot.

As one of the top five selected teams, we presented ThinkPal.AI MVP in the hackathon’s virtual pitch session. The successful completion of the prototype and positive feedback from the pitch validated our approach and provided direction for further development. While this was an initial implementation, it set the stage for extending the implementation from the design prototype to piloting the product.

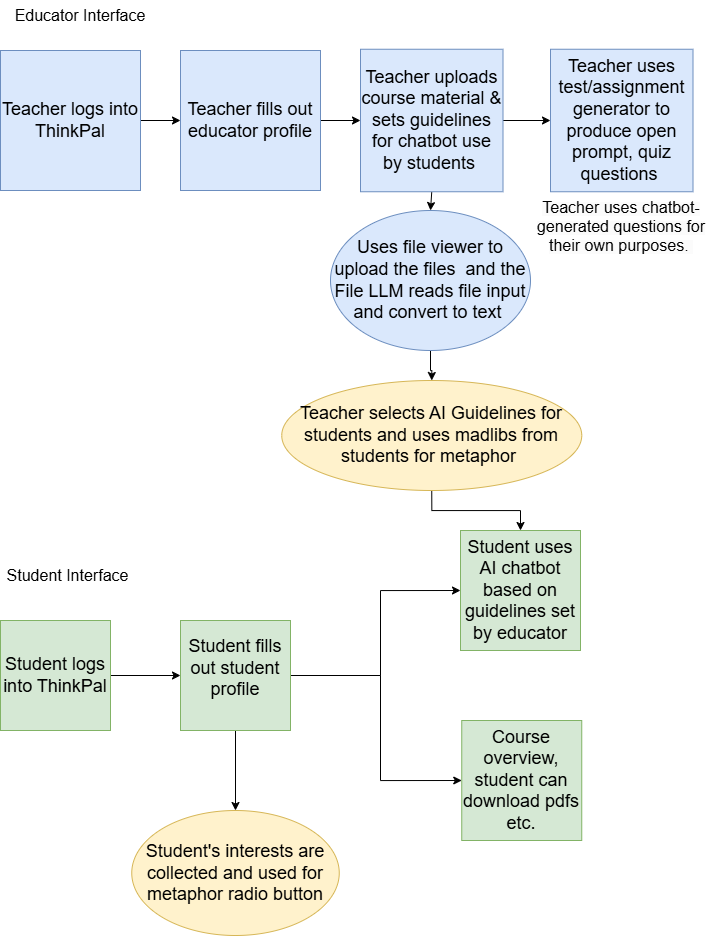

Figure 4

Workflow of ThinkPal.AI

Note. This flowchart illustrates the workflow of ThinkPal.AI, detailing the interactions between educators, students, and the AI system. The Educator Interface section shows how teachers upload course materials, set guidelines, and generate prompts. The Student Interface section outlines how students engage with the AI chatbot based on predefined guidelines. (Created by ThinkPal.AI team, 2024.)

This platform has two interfaces connected to facilitate student-teacher interaction:

It contains a student profile and a chat interface. The former is for students to enter their grade level and personal interests, which the system would use to tailor responses. The chat interface comes with built-in prompt templates based on personalized question forms conceptualized, along with an open prompt textbox.

This is a dashboard where educators could set guidelines and restrictions; teachers can select/ unselect the prompt templates, including the chatbot, with open prompts, which will be available to students. Educators can upload documents to build a curated knowledge base for the AI chatbot so that the AI’s responses are based on teacher-approved materials to improve the reliability of the chatbot.

Our chat interface provides built-in prompt forms to ameliorate the challenge of composing prompts by users. Much like the Mad Libs word game, our prompt interface has multiple prompt forms that are composed of a sentence interspersed with one or more textboxes where users can “fill in the blank.” When the user hits submit, the full sentence then appears in the history with the chatbot. Behind the scenes, the information input by the user is then used to generate a well-formed prompt. Along with the option for open prompting, we provide the following built-in prompts to students:

Table 1

ThinkPal Inputs

Type of prompt | Built-in Prompt details |

|---|---|

ChatBot Identity | You are playing the role of an expert and educator in {course subject}. |

User Info | I am a {grade level} student. My interests are {interests}. |

Prompt | I’m having trouble understanding {concept}. Please explain it as a metaphor. |

Metaphor | I’m having trouble understanding {concept}. Please explain it as a metaphor. |

Step-by-Step | I’m having trouble understanding {concept}. Please help me break it down into steps. |

Debate Partner | Please focus on challenging my assumptions and encouraging me to think deeply about the reasons behind my beliefs. |

Note. This table outlines the predefined chatbot input prompts available in ThinkPal.AI to facilitate structured student-AI interactions. (Created by ThinkPal.AI team, 2024.)

The guidelines feature allows the educator to select the features they want to be available to their students at any given time. Educators may turn off student access to the chatbot entirely or use checkboxes to select or deselect various built-in prompts they want to be available to their students. Making a certain set of built-in prompts available to the students at certain periods during the course can help the educator encourage students to get familiar with a particular kind of interaction with the chatbot.

Figure 5

ThinkPal.AI teacher interface for guidelines

Note. This image displays the section of setting guidelines in the ThinkPal.AI teacher interface, highlighting AI guideline selection options. Educators can enable or restrict specific prompts, allowing for customization of student interactions with the chatbot. (Created byThinkPal.AI team, 2024.)

We integrated a knowledge base built from an LLM that pulls content exclusively from teacher-approved materials such as PDFs, textbooks, lesson plans, and other documents that the instructor has uploaded. This process filters out unreliable information that may otherwise be pulled from open internet sources.

The chatbot tailors its responses to each user based on their interests so that the interaction feels personally relevant. For example, a student indicates their interest in ballet. They are having trouble understanding the concept of coevolution and have decided to use a built-in prompt for help. Using the ‘Metaphor’ prompt, they only have to type a single word, ‘coevolution, to receive a guided response that uses their interests in ballet by explaining phenomena as a dance between two people.

Figure 6

ThinkPal.AI’s Response to the “Metaphor” Prompt on the Student Interface

Note. This image shows ThinkPal.AI’s response when a student selects the "Metaphor" prompt and indicates an interest in ballet. (Created by ThinkPal.AI team,2024)

The learning engineering framework cycle (investigation, creation, and implementation) has not only guided our product’s idea generation and development (referred to as solution implementation), but it will also shape how we refine and apply the prototype in real-world settings through data collection and evaluation in classroom environments. The goal is to (1) follow an iterative approach to product development and (2) Ensure data-informed decision-making.

The pilot study will focus on ThinkPal.AI’s core functionality, such as technical validation, response accuracy, and user experience, before scaling it for long-term studies. It is estimated to be completed within two to four weeks across 5 classes. Each class will involve 15-20 students and a teacher to simulate a typical classroom setting and provide sufficient usability data. With the pilot, we aim to address the following question:

Does ThinkPal.AI run without technical issues, generate accurate responses, and allow teachers and students to complete the assigned task?

A teacher-controlled setting will be chosen to maintain the same structured AI support for all students. The AI response mode will be pre-selected to maintain consistency and focus on student engagement with AI-generated responses without teacher-introduced variability. For AI response mode, the step-by-step explanation response will be selected to ensure a structured evaluation of ThinkPal.AI’s usability and verify that AI-generated responses follow a logical sequence. This mode aligns with lower-order cognitive skills in Bloom’s Taxonomy (Bloom,1956). For task type, a general academic skill applicable across disciplines, such as summary writing, will be chosen as it focuses on usability rather than subject knowledge alone. ThinkPal.AI will evaluate how AI-generated structured breakdowns help students complete the task. For example, students use ThinkPal.AI to generate a step-by-step guide for summarizing an article and then follow the AI instructions to create a summary of the assigned reading.

The measure will include:

Technical Performance: Assessing bugs/crashes and ease of navigation

Accuracy & Quality: Evaluating AI response accuracy, e.g. if it misses step in step by step explanations, hallucinates, gives helpful responses, etc.

Usability & Interaction: Measuring task completion using AI and engagement with responses

The instruments will include:

System logs: It helps track student progress in task completion, time spent, and step-by-step answer delivery by the bot.

Survey questionnaire: Teachers will be asked about the accuracy of AI responses and alignment with content. Students will share experiences on ease of use, quality of explanations, and task completion. Student ratings will be cross-checked with teacher evaluations of AI responses.

Data from the pilot study will help refine ThinkPal.AI based on user experience and inform its scalability through iterative design. Next, we will explore its long-term impact, analyzing shifts in student behavior (e.g., using ChatGPT beyond direct answers) and teacher AI use (e.g., instructional strategies and usage rules). We will also assess how AI customization supports critical thinking, such as, whether metaphors are the most effective approach or if alternative methods yield better results. Additionally, we will evaluate whether ThinkPal.AI functions best as a standalone tool or within a learning management system.

Our product's design and application face challenges with respect to technical implementation, commercial deployment, and pedagogical adoption. From a technical standpoint, implementation remains a challenge, as the system can be hosted locally but has yet to be deployed on a cloud platform, which is essential for scalability and broader accessibility. Developers must be mindful of how the platform will get hosted and how the API keys will be integrated within the chatbot with an additional guardrail that the AI tool is maintained. Our team has explored compatible open-source platforms like Binder to host the app as a standalone application on the web. However, if Binder is not maintained, it could pose deployment issues. Another challenge is the financial aspect of maintaining the project. While we used open-source tools to build and deploy the platform, the chatbot relies on OpenAI API, which requires purchasing API tokens. Through currently marginal, funding would be required to scale and add new functionality. This is important to ensure that the financial onus does not lie with the students and educators.

The integration of Generative AI in education presents both opportunities and challenges. While students are eager to adopt AI tools for learning, educators remain cautious about its potential to hinder critical thinking. ThinkPal.AI was developed to navigate this tension by emphasizing meaningful teacher-student-AI interactions, ensuring that AI enhances engagement and reasoning rather than replacing human judgment. The development process we employed at the ASU AI for Education Hackathon underscored the importance of human-in-the-loop and personalized learning principles, where educators guide AI interactions for critical thinking. By incorporating learning engineering principles, our platform integrates AI capabilities with pedagogical goals for structured student-teacher interactions.

Looking ahead, the future of ThinkPal.AI will focus on refining its capabilities through further pilot studies, adding features, and scaling to different educational contexts. Beyond ThinkPal.AI, the broader landscape of AI-driven educational tools must explore how these technologies can be designed for critical thinking development through AI. The structured methodology we have demonstrated in the development of ThinkPal.AI can be leveraged by ed-tech development and learning engineering teams to create other such AI-based solutions.

This research contributes to the evolving discourse on Generative AI integration in education, providing a foundation for further product development by integrating learning engineering principles that support critical thinking and scalable implementation.

We would like to thank the Principled Innovation Academy Team at Arizona State University for organizing the AI for Education Hackathon - which served as the catalyst for the development of our learning platform - and for providing us with a temporary OpenAI (2023)license.