EdTech Archives

International Consortium for Innovation and Collaboration in Learning Engineering (ICICLE) 2024 Conference Proceedings: Solving for Complexity at Scale

EdTech Archives

International Consortium for Innovation and Collaboration in Learning Engineering (ICICLE) 2024 Conference Proceedings: Solving for Complexity at Scale

EdTech Archives

International Consortium for Innovation and Collaboration in Learning Engineering (ICICLE) 2024 Conference Proceedings: Solving for Complexity at Scale

EdTech Archives

International Consortium for Innovation and Collaboration in Learning Engineering (ICICLE) 2024 Conference Proceedings: Solving for Complexity at Scale

Arizona State University (ASU) collaborated with the US Army Soldier Center Simulation and Training Technology Center (STTC) to address a pervasive challenge of monitoring and training team dynamics. A key goal of addressing this challenge from the U. S, Army’s view is to create training as an example of their Synthetic Training Environment (STE) initiative within a broadly used application domain. While live simulation training can be effective, it is often expensive, time-consuming, and dangerous (Levy et al. 2013). Simulations and virtual systems have a long history of providing effective training (Swezey & Andrews, 2001) with decreased danger of injury during training (Andrews & Craig, 2015). STE training has been shown to be effective for team training (Siegle et al., 2021); however, it is recommended that this type of environment be used in areas with set content areas that do not require redevelopment that could cause rapidly increasing expense (Siegle et al., 2020). The project’s goal was to develop an STE training experience(i.e., immersive virtual reality) for Tactical Combat Casualty Care training (Craig et al., 2024). The system monitors team dynamics and helps improve team members’ ability to recover and reorganize from unexpected, yet significant, disturbances or system failures involved in combat lifesaving missions (Avancha et al., 2024). The Tactical Combat Casualty Care (TC3, Butler, n.d.) area was selected based on conversations with U. S. Army stakeholders and follow-up interviews with subject matter experts in the TC3 area. Effective teamwork, as noted by Marlow and colleagues, is a key factor in improving patient outcomes and minimizing medical errors, particularly in high-stress, mass-casualty situations (Marlow et al.,2018). Furthermore, a comprehensive curriculum, which incorporates decision-making under high-pressure conditions, is essential for providing tactical medical support in fast-paced combat scenarios (Milham et al., 2017).

The current paper describes the development of a team dynamics measurement framework, one smaller aspect of our larger system, to provide an example of the application of nested learning engineering process. Our team consisted of learning scientists, human factors specialists, a Project Manager, Software Engineers, Virtual environment developers, and military researchers with subject matter expertise on TC3, combat lifesaving, and military training.

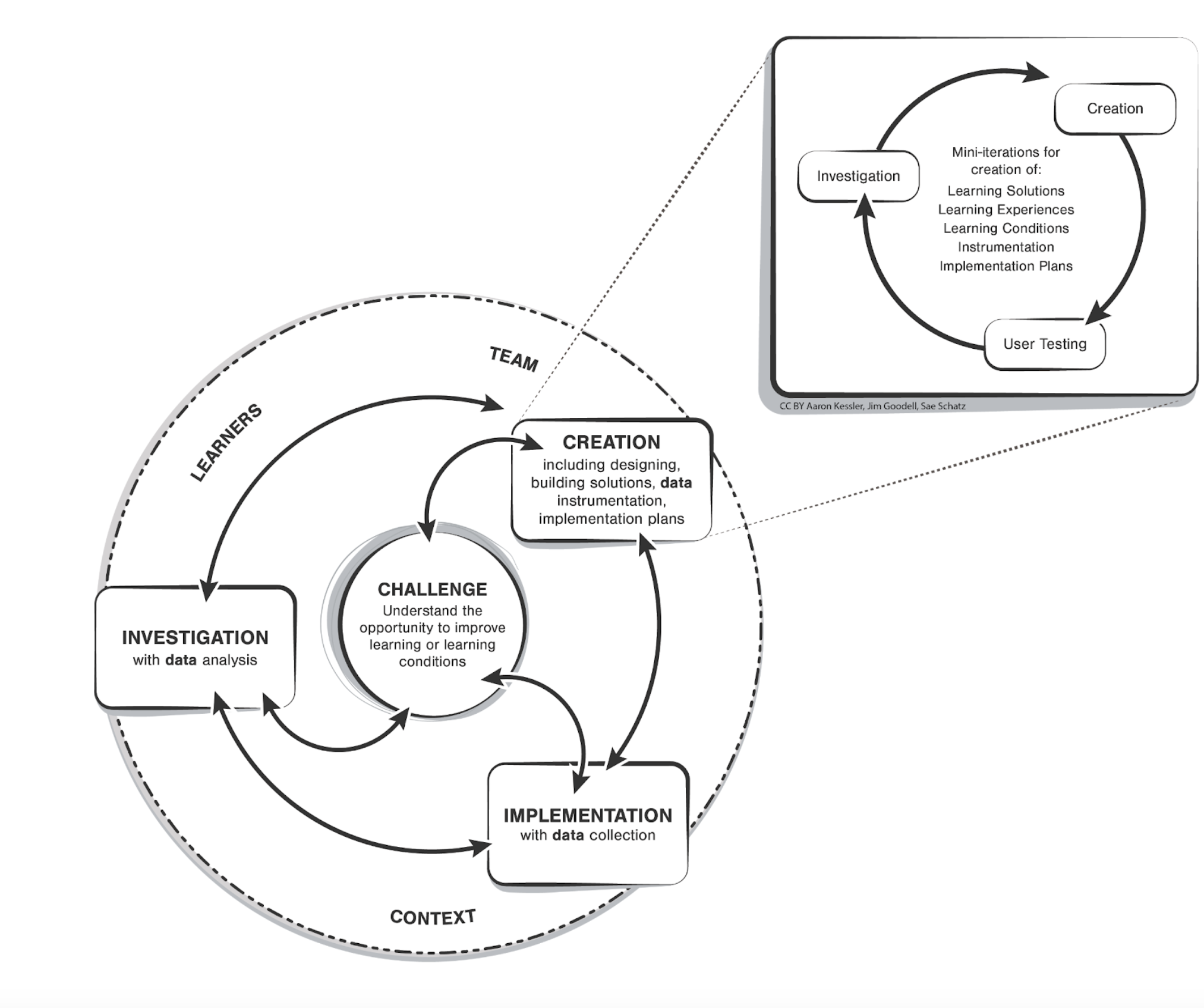

The development of our system adopted a broader Learning Engineering Process (Goodell & Kolodner, 2023; Kessler et al, 2023; Craig et al., 2023) which consists of four phases (see Figure 1): Challenge (understanding the problem in context), Creation (constructing an inclusive solution that meets the needs of end users and addresses the problem), Implementation (observing the solution in progress with data collected on impact), and Investigation (analyzing data to understand the impact of the solution on the challenge and the various stakeholders). However, any large-scale project will require smaller cycles of the process. We refer to these inner cycles as nested cycles for ease of description (Totino & Kessler, 2024). These mainly occur during the challenge phase, with multiple cycles needed to understand the challenge before moving forward, and in the creation phase, where multiple pieces of the solution need to be created. However, nested cycles could also occur during the investigation phase for refinement of analysis to better inform the next iteration. It would be less common for nested cycles to occur during implementation because the enactment of the solution needs to be consistent so that the data collected can be applied to the interaction of the implemented solution to the challenge (Czerwinski et al., 2022).

As highlighted in the opening of this paper, our initial challenge phase of the project consisted of multiple nested cycles. We had a series of conversations (informal unstructured interviews) with our military partners' representatives to understand their training needs, their needs around how the training would be built, and the targeted end users. Further conversations were held with subject matter experts to improve understanding of the content domain and the end users for the training. From these conversations, our educational scientists reviewed the initial needs and provided best practices for virtual training.

Figure 1

Learning Engineering process and nested iteration for creation phase

The remainder of this paper provides a detailed case study of a nested cycle within the creation phase. This nested cycle is an example of the nested Learning Engineering Process Model which has micro loops of the learning engineering process within one of the four phases of the larger process (Kessler & Totino, 2023). These phases allow for development and testing of individual part of the solution (Totino & Kessler, 2024).This cycle will focus on the identification of the learning content on TC3. The primary method used in this cycle was a Hybrid Cognitive Task Analysis (hCTA, Nehme et al., 2006). The hCTA is a specialty form of Cognitive Task Analysis (Clark et al., 2008) used to generate information and functional requirements using a scenario description and an enumeration of high-level mission goals. It has four steps: 1) generating a scenario task overview, 2) generating an event flow diagram, 3) creating decision ladders for decision points, and 4) generating a situational awareness requirement (Nehme et al., 2006; Macbeth et al., 2012). This process requires reviews of written materials, interviews with subject matter experts, creation of training material scenarios, and validation of final materials with SME.

Our initial challenge during the creation phase was to design a scenario that reflects Tactical Combat Casualty Care team training within a STE (Goldberg et al., 2021). The goal was to emphasize team dynamics, collaboration, and performance under stress all while replicating the technical aspects of Tactical Combat Casualty care (Avancha et al., 2024). We began with a deep dive of the available team training literature for TC3 (Townsend et al., 2016; Madrid et al., 2020; Milham et al., 2017; Ross et al., 2016; Johnston, 2019; Gerhardt, 2012). Literature review highlighted the role of immersive, simulation-based training in strengthening TC3 training through collaborative team interactions and enhanced overall performance.

Following our literature review, we engaged with the existing computer based TC3 training to gain an understanding of current training resources available. applied Hybrid Cognitive Task Analysis methodology, a structured approach that helped us break down the complex tasks into actionable steps required for team based performance. This process involved close examination of each role within a TC3 team, identifying the specific skills, competencies, and communication patterns necessary for the team to function effectively. To ensure the scenario we developed captured the realities of TC3 operations, we conducted a series of semi-structured interviews with subject matter experts (SMEs), including experienced military trainers, squad leaders, STTC training designers, and learning engineers. Interviews were conducted virtually through Microsoft Teams where the SMEs guided us through the different TC3 roles, operations, areas within the CCP, and the processes involved in casualty triage and support within the CCP. These interviews provided valuable insights into Casualty Collection Point (CCP) operations, team structures, and the current training available to Combat life savers. This step was crucial to ensure that the training being built reflects realistic interactions and task requirements. Through this, we identified gaps in existing training, which informed the design of our scenario and the necessary team competencies.

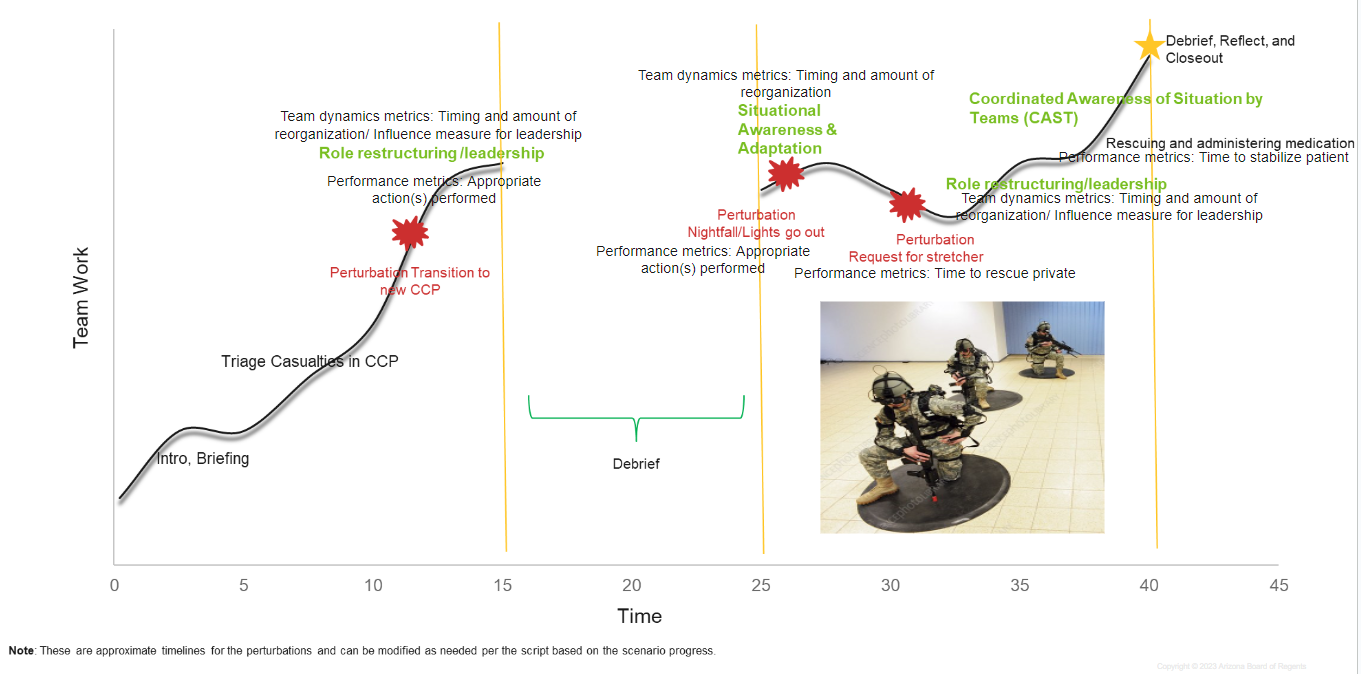

Using this information from SME interviews and hCTA, we developed an event flow diagram that detailed task sequences and situational awareness across team members. Additionally, we introduced critical perturbations, which are unexpected but significant disruptions—such as disorganization and unplanned changes in team leadership or environmental challenges, to assess and improve team adaptability.

Following the initial design, we conducted multiple rounds of feedback from SMEs. In the first review, SMEs focused on validating the accuracy of the CCP design, team roles, and operational flow within the scenario. Based on their input, we refined the training scenario and reintroduced it for a second round of review. In this phase, we focused on the fine-tuning of the non-player character (NPC) scripts, ensuring that the interactions and dialogue reflected real-life battlefield conditions. The final round of review provided detailed feedback on the remaining discrepancies and allowed us to make minor adjustments to enhance the trainee experience.

Based on the SME feedback and our iterative designs during investigation, we finalized a comprehensive pilot scenario for a CCP in an urban setting, which included various perturbations to challenge trainees. The scenario was then passed to the Software Engineering and Virtual Environment teams for technical development.

Finally, the scenario involved three Combat Life Saver (CLS) trainees operating in a high-stress CCP setting, supported by NPCs representing various roles. The scenario, divided into two 15-minute sessions with debriefing breaks, aimed to test the trainees’ ability to manage casualties, communicate effectively, and adapt under pressure, ultimately refining their ability to operate as a cohesive, adaptive team (see Fig.2).

Figure 2

Final Scenario that was developed

In conclusion, the current nested cycle provided a foundation to develop this solution using a multiuser VR system utilizing wireless tethered headsets connected to the client computers with a central server that tracks and manages game states. After the development, we plan on an implementation phase nested cycle and conduct an empirical heuristic evaluation. Our heuristic evaluation will examine usability and effectiveness through established evaluation principles. Finally, we will enter the Investigation phase to validate metrics and determine the impact of the training system developed. Additionally, we will assess whether these Learning Engineering cycles should be repeated to enhance our VR training in future.

By following the Learning Engineering process of “Challenge, Create, Implement, and Investigate”, we were able to develop a highly realistic and effective TC3 training within the Synthetic Training Environment. We began by addressing the challenge of accurately simulating team dynamics and performance under the stress of Tactical Combat Casualty Care. This helped us create a robust initial pilot scenario informed by Hybrid Cognitive Task analysis, Subject Matter Expert interviews, and iterative design feedback. Once the design was validated through multiple rounds of SME reviews, we proceeded to develop it on the Virtual Reality platform. Aspects such as perturbations were integrated to enhance immersive training experience. Finally, our goal is to investigate the training effectiveness in terms of team dynamics and adaptability and optimize the learning environment. This process allowed us to design a training experience that enhances trainees’ ability to adapt and perform as a cohesive team in high pressure situations.

The research described herein has been sponsored by the U.S. Army Combat Capabilities Development Command under cooperative agreement W912CG-23-2-0002. The statements and opinions expressed in this article do not necessarily reflect the position or the policy of the United States Government, and no official endorsement should be inferred.